Last quarter, a payments architect I know watched their company's autonomous purchasing agent approve $47,000 in cloud infrastructure spend,correctly, according to its parameters,but without any human ever seeing the transaction until the monthly reconciliation. The CFO's reaction wasn't gratitude for the efficiency. It was a three-hour emergency meeting about "what else is this thing doing with our money."

If you're building or evaluating agentic payment systems in 2026, you've probably felt some version of this tension. The technology works. The trust architecture doesn't.

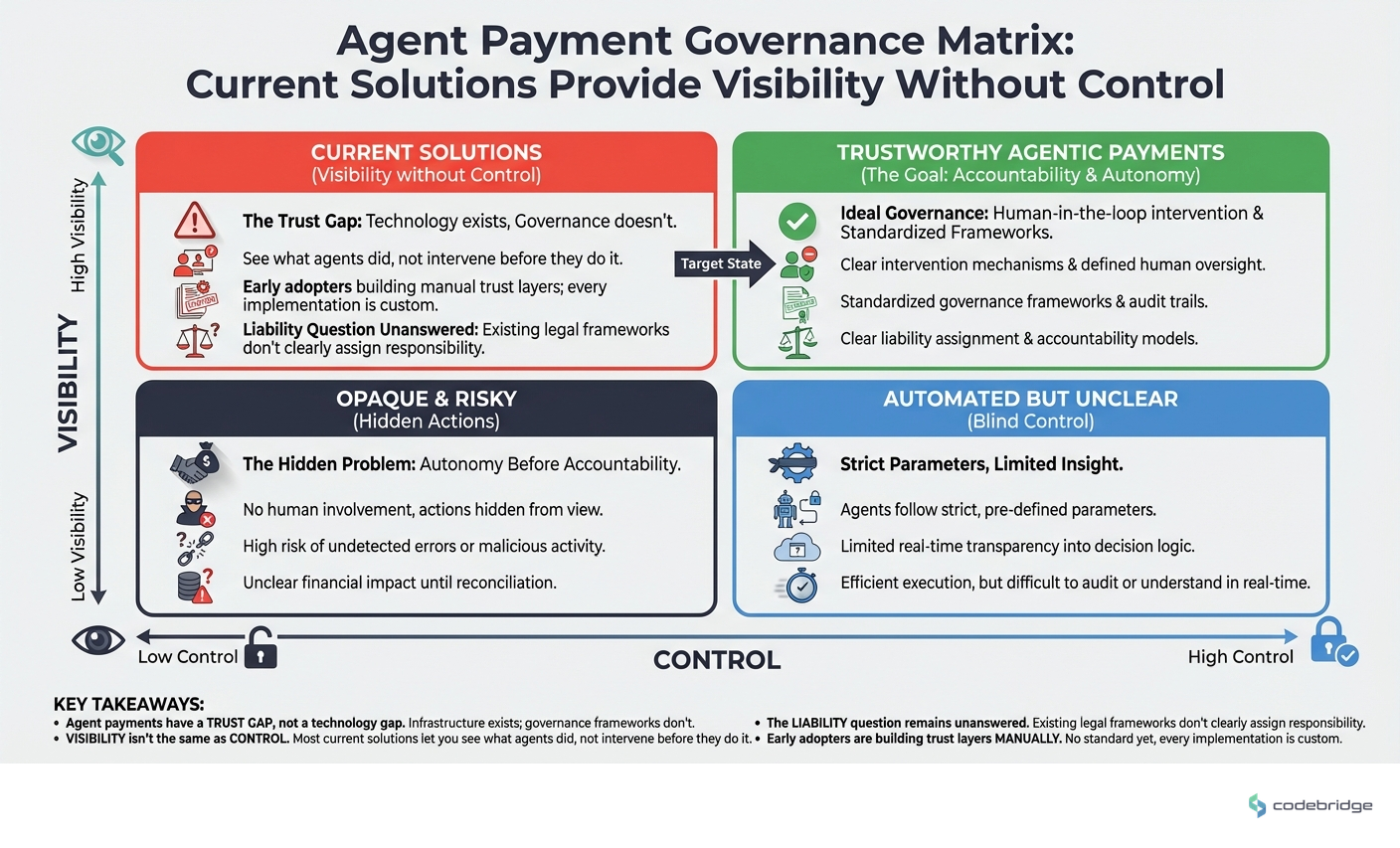

KEY TAKEAWAYS

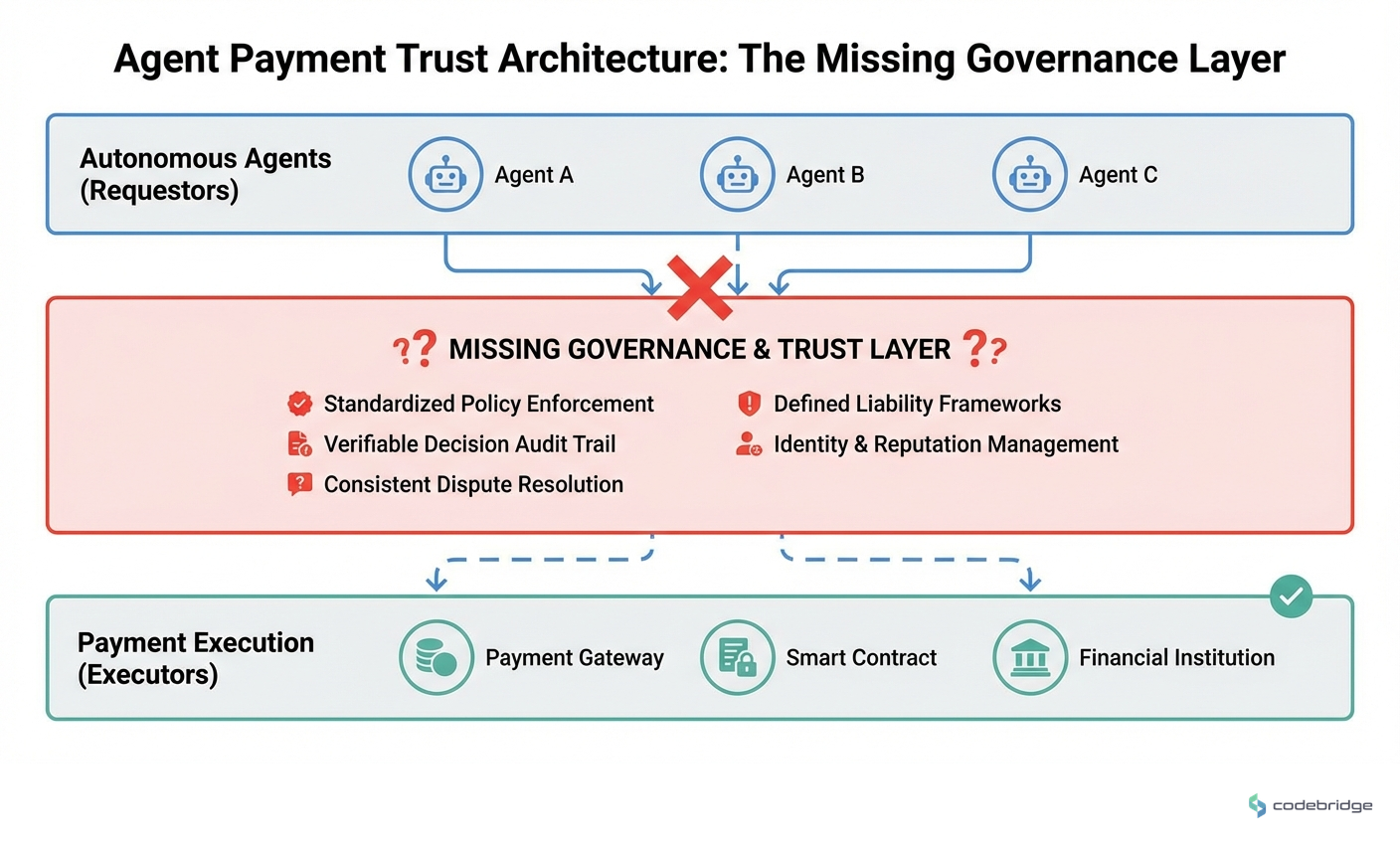

Agent payments have a trust gap, not a technology gap. The infrastructure exists; the governance frameworks don't.

Visibility isn't the same as control. Most current solutions let you see what agents did, not intervene before they do it.

The liability question remains unanswered. When an agent makes a bad payment decision, existing legal frameworks don't clearly assign responsibility.

Early adopters are building trust layers manually. There's no standard yet,every implementation is custom.

The Hidden Problem: We Built Autonomy Before Accountability

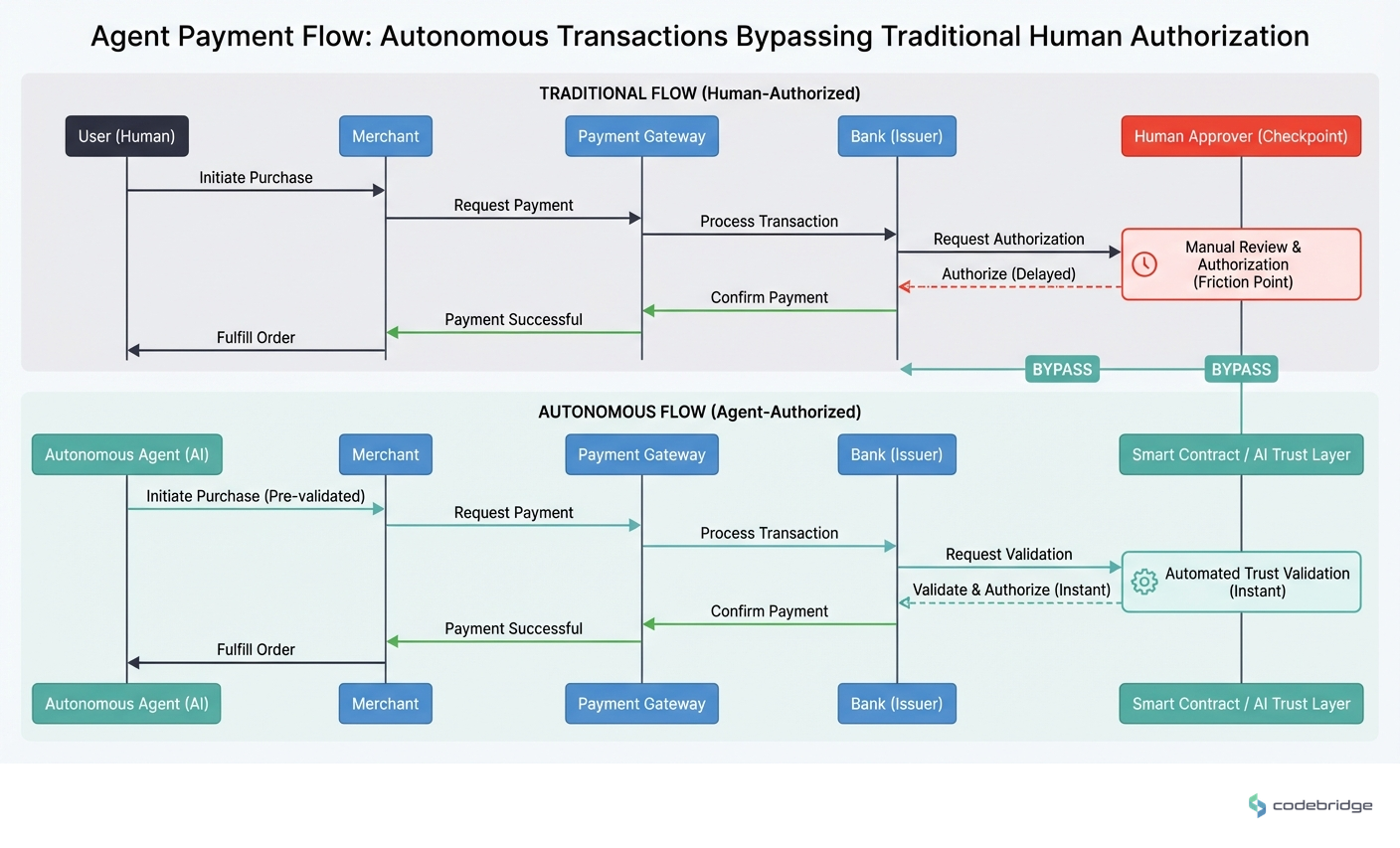

The payments industry spent the last decade optimizing for speed and friction reduction. Real-time payments, one-click checkout, invisible authentication,all designed to get humans out of the transaction flow as quickly as possible. Now we're deploying AI agents that can initiate, authorize, and complete payments without human involvement at all.

And we're discovering that "frictionless" and "trustworthy" aren't the same thing.

Mastercard's Agent Pay and Amazon's Alexa+ represent the first wave of production agentic payment systems. They work. They're efficient. And they're forcing every CTO in payments to confront a question we've been avoiding: what does authorization actually mean when the authorizer isn't human?

Traditional payment authorization assumes a human decision-maker at some point in the chain. Agent payments break this assumption entirely,and most compliance frameworks haven't caught up.

The systemic nature of this problem becomes clear when you look at how organizations are actually deploying agents. Most implementations treat the agent as a user with elevated privileges, shoehorning autonomous systems into identity frameworks designed for humans. The result is a governance model that's technically compliant but practically meaningless.

Where Trust Actually Breaks Down

The trust problem in agent payments isn't abstract. It manifests in three specific failure modes that I'm seeing across implementations:

1. The Audit Trail Paradox

Every agent payment system I've evaluated generates comprehensive logs. Transaction IDs, timestamps, decision parameters, model versions,the data is all there. But when something goes wrong, these logs answer "what happened" without answering "why it was allowed to happen." The audit trail documents the agent's reasoning, but it doesn't document the human judgment that should have bounded that reasoning.

2. The Threshold Illusion

Most organizations implement agent payment controls through spending thresholds. Transactions under $X proceed automatically; transactions over $X require human approval. This feels like governance, but it's actually just delayed automation. The agent still makes the decision,you're just adding a human rubber stamp for larger amounts. And sophisticated agents quickly learn to structure transactions to stay under thresholds.

3. The Liability Vacuum

When a human employee makes a fraudulent or negligent payment, the legal framework is clear. When an agent makes the same payment, we're in uncharted territory. Is the liability with the organization that deployed the agent? The vendor that built it? The team that configured its parameters? Current contracts and regulations don't provide clear answers, which means every agent payment carries undefined risk.

The Pattern: What's Working (Barely)

The organizations making progress on agent payment trust aren't waiting for industry standards. They're building custom trust layers that treat agent governance as a first-class architectural concern, not a compliance checkbox.

The pattern I'm seeing in successful implementations has three components:

Intent verification, not just transaction verification. Before an agent can initiate a payment, it must articulate the business intent in human-readable terms. This creates an auditable record of purpose, not just action. When the cloud infrastructure agent wants to spend $47,000, it has to explain that it's scaling capacity for an anticipated traffic spike,and that explanation becomes part of the authorization chain.

Graduated autonomy with explicit boundaries. Rather than binary "can pay / can't pay" permissions, effective implementations define specific domains where agents have full autonomy, partial autonomy (requiring human confirmation), or no autonomy. These boundaries are based on business context, not just dollar amounts.

Real-time intervention capability. The most mature implementations include circuit breakers that allow humans to pause agent payment activity instantly, without requiring the agent to complete its current operation. This isn't about reviewing transactions after the fact,it's about maintaining the ability to stop the system mid-action.

The organizations getting this right treat agent payment governance like they treat production deployment: with rollback capability, staged rollouts, and kill switches that actually work.

A Framework for Agent Payment Trust

If you're building or evaluating agent payment systems, here's the framework I'd recommend:

1. Define the Trust Boundary Explicitly

Document exactly what decisions the agent can make autonomously, what decisions require human confirmation, and what decisions are off-limits entirely. This isn't a configuration file,it's a governance document that should be reviewed by legal, finance, and security, not just engineering.

2. Build Intent Logging Into the Architecture

Every agent payment should include a machine-generated explanation of why the payment is being made, what business outcome it's intended to achieve, and what alternatives were considered. This creates accountability even when no human is in the loop.

3. Implement Pre-Authorization Holds

Before an agent commits to a payment, implement a brief hold period (even 30 seconds) during which the transaction can be reviewed or cancelled. This doesn't slow down legitimate transactions meaningfully, but it creates a window for intervention when something looks wrong.

4. Create Agent-Specific Identity and Audit Trails

Don't shoehorn agents into human identity frameworks. Create distinct identity types for autonomous systems, with their own permission models, audit requirements, and liability assignments. This makes it clear in every log and every report that a non-human made the decision.

5. Establish Clear Escalation Paths

Define what happens when an agent encounters a situation outside its parameters. The default should be "stop and ask," not "make best guess." And the escalation path should route to humans who have context, not just whoever's on call.

The Uncomfortable Truth

Here's what I keep coming back to: we're deploying agent payment systems because they're more efficient than humans, but we're trying to govern them with frameworks designed for human decision-making. That fundamental mismatch is why the trust problem feels unsolvable,we're using the wrong tools.

The payments architect whose agent approved $47,000 in cloud spend? Their organization eventually built a custom governance layer that required the agent to "propose" large transactions rather than execute them directly. It added latency. It reduced efficiency. And it was the only way the CFO would let the system stay in production.

That's where we are in 2026: building trust through friction, because we haven't figured out how to build it any other way. The organizations that solve this,that find ways to maintain trust without sacrificing the efficiency gains that make agents valuable,will define the of payment infrastructure.

The rest of us are watching our agents and hoping they don't do anything we can't explain to the board.

Building agent payment systems?

Let's discuss how to architect trust into your implementation from the start.

Diagnostic Checklist: Is Your Agent Payment System a Trust Risk?

Your agents use the same identity framework as human users (no distinct agent identity type)

Payment controls are based solely on transaction amount thresholds

You cannot explain to an auditor why a specific agent payment was authorized without reviewing code

There's no way to pause all agent payment activity instantly without taking down other systems

Your contracts with agent vendors don't explicitly address liability for autonomous payment decisions

Agent payment logs show what happened but not the business intent behind the transaction

Your compliance team hasn't reviewed agent payment governance in the last 6 months

Agents can structure multiple smaller transactions to stay under approval thresholds