Software engineering is changing more rapidly than at any time since the rise of cloud computing and DevOps. For decades, the Software Development Lifecycle (SDLC) has relied on an assumption of absolute deterministic control, where requirements are translated into static logic that produces predictable, verifiable outputs.

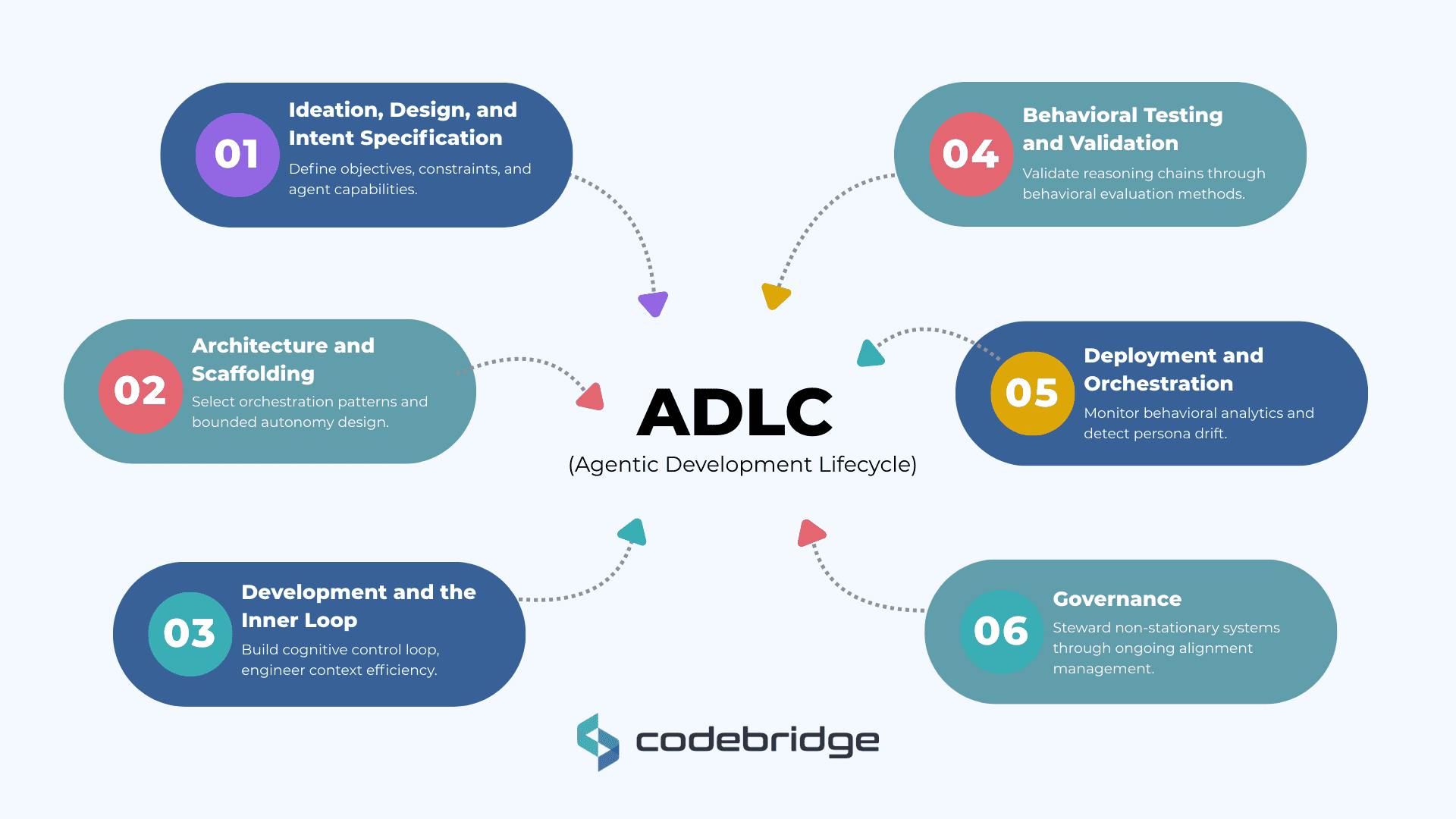

The arrival of agentic artificial intelligence upends this foundation by introducing probabilistic systems capable of reasoning, adaptation, and autonomous execution. As these systems move from reactive "thinkers" to proactive "doers," the traditional SDLC is proving insufficient to manage their inherent non-determinism and emergent behaviors. Successfully operationalizing agentic AI requires an evolution to the Agentic Development Lifecycle (ADLC), a framework that defines intent, goals, constraints, and safety limits instead of relying solely on deterministic code paths.

The stakes for this transition are immediate; the market for AI agents is forecast to grow at a 45% CAGR through 2030. However, a significant gap exists between lab-based prototypes and production-ready systems. Most organizations currently treat agents as advanced chat assistants rather than as complex, probabilistic components that require rigorous orchestration and behavioral governance. This playbook outlines the technical and architectural realities required to bridge that gap, focusing on the five distinct phases of the ADLC.

The Agentic Development Lifecycle (ADLC) Framework

The Agentic Development Lifecycle (ADLC) is a specialized methodology for managing the unique complexities of autonomous agents that learn and act in dynamic environments. Unlike the SDLC, the ADLC assumes agents optimize toward goals rather than execute fixed instructions.

Phase 1: Ideation, Design, and Intent Specification

The foundational stage of the ADLC requires a shift from writing rigid functional specifications to designing "intent". This involves articulating the high-level objective (the "what" and "why") while defining the operational constraints and policies (the guardrails) that govern the agent’s autonomy.

The Capability Matrix drives strategic success in this phase. This tool allows engineering leaders to systematically isolate which workflow steps require non-deterministic LLM reasoning, such as interpreting customer intent, and which must remain deterministic, rule-based logic, such as financial calculations or SLA timer initialization. Mature teams avoid the anti-pattern of "making everything agentic," as LLM reasoning comes at the expense of simplicity and performance.

Furthermore, designers must establish the agent’s Persona and Context Mapping. This includes defining the agent's identity, tone, and the specific scope of its knowledge base and conversational memory. Tools like GitHub’s "Spec Kit" are increasingly used to treat these specifications as executable artifacts, generating the task breakdowns that steer an agent toward its goal.

Deliverables:

- Intent Specification (objective: the “what/why”)

- Operational Constraints & Guardrails (policies governing autonomy)

- Capability Matrix (explicit split between non-deterministic LLM reasoning and deterministic logic)

- Persona & Context Mapping (identity/tone + scope of knowledge base and conversational memory)

- Executable Specifications / Task Breakdown Artifacts (e.g., Spec Kit–style specs treated as executable artifacts)

Phase 2: Architecture and Scaffolding

Architecture in the agentic era is reframed as "scaffolding" – providing a bounded space where an agent can navigate freely rather than scripting every possible decision path. A primary architectural decision is the selection of the orchestration pattern, which dictates how the system manages non-determinism at scale.

- Single-Agent Systems: These utilize one LLM to orchestrate a coordinated flow, making them easier to debug and ideal for cohesive, bounded domains. However, they struggle as toolsets grow, often leading to "context collapse" or hallucinations.

- Multi-Agent Systems (MAS): These decompose large objectives into sub-tasks assigned to specialized agents. The Coordinator Pattern uses a central supervisor to dynamically route tasks to specialized workers (e.g., a "Researcher" vs. a "Coder"), keeping individual context windows clean and focused. Specifically, hierarchical orchestration (the Coordinator Pattern), which uses a central supervisor to route tasks to specialized workers, has been shown to achieve up to 95.3% accuracy on complex benchmarks, consistently outperforming flat-agent architectures

- Review and Critique Pattern: This involves an adversarial loop where one agent generates an output and a second "critic" agent audits it for security or quality. This is essential for high-stakes tasks where manual verification of every step is impractical; studies show that AI-assisted code reviews can increase quality improvements to 81%, with nearly 39% of agent comments leading to critical code fixes.

Interoperability is standardized through protocols like the Model Context Protocol (MCP), which decouples the reasoning engine from its toolset, allowing for modular updates and preventing tool divergence.

Deliverables:

- Selected Orchestration Pattern (Single-Agent vs MAS + Coordinator Pattern as applicable)

- Review & Critique Loop Design (where adversarial auditing is required)

- Tool/Interoperability Contract via MCP (decoupling reasoning engine from toolset for modular updates)

- Scaffold Boundaries (the “bounded space” design that enables autonomy without scripting every path)

Phase 3: Development and the Inner Loop

The development phase focuses on the hands-on construction of the Cognitive Control Loop: a continuous cycle of perception, reasoning, action, and observation. Unlike traditional software, where bugs are fixed, agentic development focuses on managing variance.

A critical competency in this phase is Context Engineering. This involves the meticulous selection and structuring of information for the context window to maximize token efficiency and reasoning accuracy. To manage behavioral drift, mature organizations treat prompts, tool manifests, and memory schemas as version-controlled Infrastructure-as-Code (IaC). This ensures that any modification to the "agent’s brain" is subject to formal change approval and semantic diffing.

Deliverables:

- Implemented Cognitive Control Loop (perception → reasoning → action → observation)

- Context Engineering Assets (structured, token-efficient context window strategy)

- Version-Controlled “Agent Brain” as IaC (prompts, tool manifests, memory schemas under formal change approval + semantic diffing)

Phase 4: Behavioral Testing and Validation

Traditional unit testing is necessary for the deterministic components of an agent, but it is insufficient for probabilistic reasoning. Testing must evolve into Behavioral Evaluation.

- Golden Trajectories: These are validated interaction sequences that capture complete reasoning chains and tool invocations, serving as regression baselines.

- LLM-as-a-Judge: Advanced models are used to grade the performance of agent outputs against specific rubrics for accuracy, tone, and safety.

- Simulation and Sandboxing: To avoid risking production infrastructure, agents must be executed in isolated environments, such as MicroVMs or Docker Sandboxes, where they can run code and install packages securely.

Deliverables:

- Golden Trajectories (validated interaction sequences including reasoning chains + tool invocations)

- LLM-as-a-Judge Evaluation Rubrics & Workflows (grading for accuracy, tone, safety)

- Simulation/Sandbox Environments (MicroVMs or Docker Sandboxes for isolated execution)

- Deterministic Unit Test Suite (for deterministic components)

Phase 5: Deployment and Continuous Orchestration

Deployment marks the start of continuous monitoring and tuning. Monitoring shifts from infrastructure metrics like latency to Behavioral Analytics, tracking goal completion rates, and escalation quality. This shift is a technical necessity born from the inherent instability of LLMs: research indicates that agents can exhibit up to a 63% coefficient of variation in execution paths for identical inputs.

Mature teams implement Drift Detection to identify when agent responses shift over time due to model updates or subtle changes in the environment. Techniques like the "Spirit Profile" score agent responses against core values to detect deviation from the baseline persona. If significant drift is detected, "kill switches" or automatic rollbacks to previous version-controlled prompt sets are triggered.

Deliverables:

- Behavioral Analytics Monitoring (goal completion rates, escalation quality)

- Drift Detection Mechanisms (including persona-alignment scoring such as “Spirit Profile”)

- Kill Switches / Automatic Rollback Paths (to revert to previous version-controlled prompt sets)

- Outer Loop Operating Process (monitoring and tuning as ongoing lifecycle work)

Phase 6: Continuous Learning and Governance – Stewarding Non-Stationary Systems

Traditional software is largely stationary: once deployed, its logic stays stable unless deliberately changed. Agentic systems are different. Their behavior emerges from probabilistic reasoning, shifting context windows, and evolving external data. As a result, deployment marks the start of the lifecycle. This phase centers on long-term stewardship to ensure agents remain accurate, cost-effective, and aligned as models, data, and user behavior evolve.

Core Activities: Managing the Post-Deployment “Outer Loop”

Operationalizing an agent requires governance beyond standard infrastructure monitoring. Because outputs vary and meaning can drift, teams must actively manage performance, cost, and alignment.

- Operations and Cost Monitoring: Agentic systems risk “Denial of Wallet” scenarios, where recursive reasoning loops repeatedly call expensive tools without resolving tasks. Leaders must monitor real-time token usage and “Math of Ruin” metrics to control financial exposure.

- Feedback Loop Management: Simple thumbs-up/down signals are insufficient. Organizations need structured behavioral analytics, including detailed interaction logs, to detect conversational dead ends and prioritize refinements. “LLM-as-a-Judge” workflows – where stronger models audit outputs against accuracy, safety, and alignment rubrics – can systematically improve quality.

- Model Versioning and Compatibility: Third-party model updates can introduce silent regressions. Even small weight changes may degrade reasoning or tool use without producing errors. Teams can mitigate this through strict version pinning and regression testing before adopting updates.

- Behavior Alignment and Drift Detection: Subtle, compounding shifts in context or model behavior can alter decisions over time. Techniques such as scoring responses against core value profiles help detect deviations from the intended persona.

- Knowledge Base Refreshes: Agents relying on retrieval-augmented generation (RAG) must regularly re-index and ingest updated data. Without this, stale information can produce confident but outdated answers.

Deliverables

This phase produces auditable artifacts for optimization and compliance:

- Ongoing quality and cost reports tracking supervision burden and efficiency.

- Evidence-based model upgrade decisions grounded in performance-to-cost analysis.

- Updated guardrails and policy controls to address new risks.

Traditional SDLC vs. Agentic Development Lifecycle

Governance and Human-in-the-Loop (HITL)

As agents gain autonomy to interact with live ecosystems, governance becomes a primary architecture. To manage organizational risk, executives must move beyond vague supervision to explicit autonomy levels:

- Human-in-the-Loop (HITL): Requires manual approval for high-stakes, irreversible actions, such as production database writes or financial transfers. This is critical for maintaining fiduciary accountability and preventing the "Math of Ruin".

- Human-on-the-Loop (HOTL): Humans monitor real-time autonomous execution and intervene only when confidence scores drop or anomalies occur. This allows for operational scaling while maintaining a "guardian" presence.

- Human-in-Command: The AI serves as a strategic advisor, but the human operator retains the final decision-making power, preserving organizational agency.

To satisfy emerging regulations like the EU AI Act, systems must maintain Immutable Audit Trails. These serve as a "black box" flight recorder, providing the post-hoc explainability required to reconstruct why a specific action was taken. Without this, organizations face unmanageable legal liability.

Measuring Success: Metrics for Agentic Systems

Success in agentic systems is a measure of behavior, not just binary completion. Traditional DORA metrics, Deployment Frequency and Lead Time, must be segmented into "agent-involved" and "non-agent" pipelines to isolate the true impact of these systems. However, these lagging indicators often fail to capture the operational reality of probabilistic software.

To manage the risk of "capability chaos," mature teams prioritize three behavioral metrics:

- Acceptance Rate: This tracks the percentage of agent-suggested changes merged without modification. Low rates signal "review cruft," where the human effort required to vet or fix subpar AI output outweighs the initial speed of generation.

- Escalation Quality: This evaluates whether the agent correctly identifies high-risk ambiguity or "dead ends" instead of hallucinating a solution. It is the primary metric for assessing the system's calibration to organizational guardrails.

- Supervision Burden: Measuring the absolute frequency of human interventions required to keep an agent on track.

The strategic trade-off is clear: high-velocity code generation is a liability if it creates a bottleneck in human review. Only by establishing pre-adoption baselines can leadership verify if agents are accelerating the lifecycle or merely shifting technical debt to human reviewers.

Where Teams Get Stuck: The Prototype-to-Production Gap

The transition from a functioning demo to a production system reveals several common failure modes.

1. The Prototype Illusion

Agents often perform exceptionally well in controlled, lab-based environments but fail when exposed to real-world ambiguity. Teams frequently confuse "stochastic parroting", where a model predicts the next likely word, with actual reasoning. This leads to a precipitous drop in reliability when the agent faces novel variables not present in its training data or initial evaluation set.

In practice, this gap is measurable. For example, in the original SWE-bench study introduced by researchers at Princeton University, evaluating models on 2,294 real GitHub issues across 12 production Python repositories, the best-performing model at the time, Anthropic’s Claude 2, successfully resolved only 1.96% of issues end-to-end. These were not synthetic puzzles but real bugs requiring multi-file edits, dependency reasoning, and execution validation.

The result underscores that the performance, which appears impressive in sandboxed benchmarks, can collapse when exposed to messy, stateful, real-world systems.

2. Non-Determinism and Drift

Unlike traditional software, agents do not always produce the same output for the same input. Small, compounding changes in the context window or updates to the underlying model can result in "Behavioral Drift". If guardrails are stateless and fail to account for historical baselines, these gradual shifts in decision-making can go undetected until a system-level failure occurs.

3. Context Fragmentation and Overload

Single agents often struggle as their toolsets and context windows grow, leading to "Context Collapse". When the reasoning load is too high for a single-threaded execution, agents lose track of dependencies or hallucinate tool parameters. Mature teams solve this by moving to MAS architectures where tasks are decomposed and context is isolated per agent.

4. The Capability-Chaos Cycle

Deploying agents without rigorous governance often leads to a spike in code volume but a degradation in overall quality. This creates massive review overhead, or "review cruft," where human developers spend more time vetting low-quality AI output than they would have spent writing the code manually.

5. Denial of Wallet

Probabilistic agents can enter recursive reasoning loops, such as repeatedly calling search tools to resolve an unanswerable prompt. Without hard caps on iterations, these "infinite loops" can drain API budgets rapidly. A loop of 10 cycles per minute with a large context can cost several dollars per instance, creating a significant financial risk.

What Mature Teams Do Differently

Successful organizations do not treat agents as "magic black boxes"; they treat them as probabilistic components requiring rigorous engineering discipline.

A. Architecture: The Capability Matrix and "Zones of Intent"

Mature teams define Zones of Intent, bounded spaces where agents have autonomy to determine the "how" within strict guardrails. They use the Capability Matrix to strictly separate deterministic tasks (rules, IDs, SLAs) from non-deterministic tasks (reasoning, intent classification).

Rule: If a task has zero tolerance for ambiguity, such as financial calculations or security policy enforcement, it must remain a deterministic function, not an agentic one.

B. Engineering: Context as a First-Class Citizen

Context (history, prompts, and knowledge) is treated as a managed asset, not just a string of text. Mature teams implement Dynamic Context Selection to filter and compress data before it hits the LLM, preventing overflow and reducing inference costs. They adopt the Model Context Protocol (MCP) to standardize how agents connect to data sources, ensuring that the reasoning engine is decoupled from the toolset for modular updates.

C. Governance: Infrastructure-as-Code for Prompts

Prompts and tool manifests are treated as Infrastructure-as-Code (IaC). They are stored in Git, semantically diffed, and subject to formal change approval processes. Organizations use Version Pinning to lock agent behaviors and prevent sudden degradation when underlying models are updated by providers.

D. Human-in-the-Loop by Design

Mature teams design explicit "circuit breakers" and human checkpoints for all high-stakes actions. As trust in a specific agentic workflow matures, they shift from "human-in-the-loop" (direct intervention) to "human-on-the-loop" (supervisory control). They also designate "Adoption Owners" to run pairing and calibration sessions, ensuring that human reviewers have a shared standard of what constitutes "good" AI-influenced code.

Conclusion

The transition to agentic AI requires a fundamental managerial shift. Engineers must evolve from writing deterministic functions to shaping frameworks for intelligent behavior. The focus of software engineering is moving from defining the "How" to defining the "What" and the "Why".

For leadership teams, the Agentic Development Lifecycle provides the structure required to manage emergence without surrendering accountability. Organizations that master the ADLC – viewing agents as semi-autonomous teammates requiring rigorous governance and behavioral observability – will gain a decisive strategic advantage in the era of probabilistic computing. Success is not determined by the intelligence of the model used, but by the robustness of the control structures built around it.

%20(1).jpg)

%20(1)%20(1)%20(1).jpg)