Modern technology leaders attempting to add agentic AI to their SaaS products today are making the same structural mistake. Companies are treating agents as a feature, rather than as an architectural layer that must be designed, governed, and constrained. This mistake doesn’t show up in demos. It shows up later in runaway costs and quiet erosion of system integrity.

The pressure to “add AI agents” is real, especially for B2B SaaS executives navigating competitive expectations and board-level urgency. But agents are not chatbots with better prompts, nor are they a cosmetic upgrade to existing automation. They introduce non-deterministic behavior into systems built to be deterministic. That is not a product decision. It is an architectural one.

Gartner projects that by 2028, 33% of enterprise software applications will include agentic AI. It’s a dramatic increase from just a few years ago. However, what matters more than the adoption curve is how those agents are integrated. Organizations that rebuild core systems around probabilistic models will inherit unacceptable risk. Those that simply layer agents directly onto internal APIs will create fragile, ungovernable systems.

The only viable path for established B2B SaaS platforms is to treat agentic AI as a distinct architectural layer, one that sits above the system of record and translates intent into controlled action. This layered approach is not conservative; it is how serious software organizations scale autonomy without losing control.

Why a Layer, Not a Rebuild?

The primary argument for an agentic layer is the preservation of the "system of record". B2B SaaS products are built on hard-won stability, deterministic logic, and strict data contracts. Rebuilding these core systems to accommodate probabilistic AI models is not only prohibitively expensive but also poses an existential risk to system integrity. An additive architecture allows the core transactional systems to remain stable while experimenting with autonomous functionality at the edges.

Early adopters have demonstrated that contained use cases are far more successful than "big-bang" rebuild approaches. By treating the agentic layer as a sophisticated macro engine or orchestration service, organizations can avoid destabilizing the underlying system of record while still achieving a "self-driving" paradigm in specific workflows.

Where Agentic Layers Actually Work Today

The most successful implementations of agentic layers in B2B SaaS are currently found in back-office operations and decision support. Examples include:

- Procurement and Supply Chain: Automating inventory monitoring and coordination across thousands of suppliers, where agents handle the "boring" manual work of quote solicitation and follow-up.

- Document and Knowledge Management: Assembling complex RFP responses by retrieving and synthesizing internal policy and technical data within a "tightly locked down" domain.

- Customer Service: Using read-only agents that assist users in navigating massive databases (e.g., business records) without the permission to add or delete data in the primary system of record.

Deloitte's State of AI in the Enterprise confirms this pragmatic shift: while roughly 23% of companies report using AI agents moderately today, 74% project widespread use within two years. However, a critical maturity gap exists, as only 21% of these organizations have a mature governance model in place.

Where They Don’t (Yet)

Current technical limitations and risk profiles preclude agentic autonomy in several high-stakes areas. "AI that does everything" initiatives are frequently getting shelved due to the "AutoGPT lesson": broad goals without tight scoping inevitably lead to hallucination, mis-prioritization, and drift. Front-office finance (e.g., autonomous trading or lending decisions) and direct patient care in healthcare remain under strict human oversight due to the potential for systemic risk and the legal ramifications of non-deterministic errors.

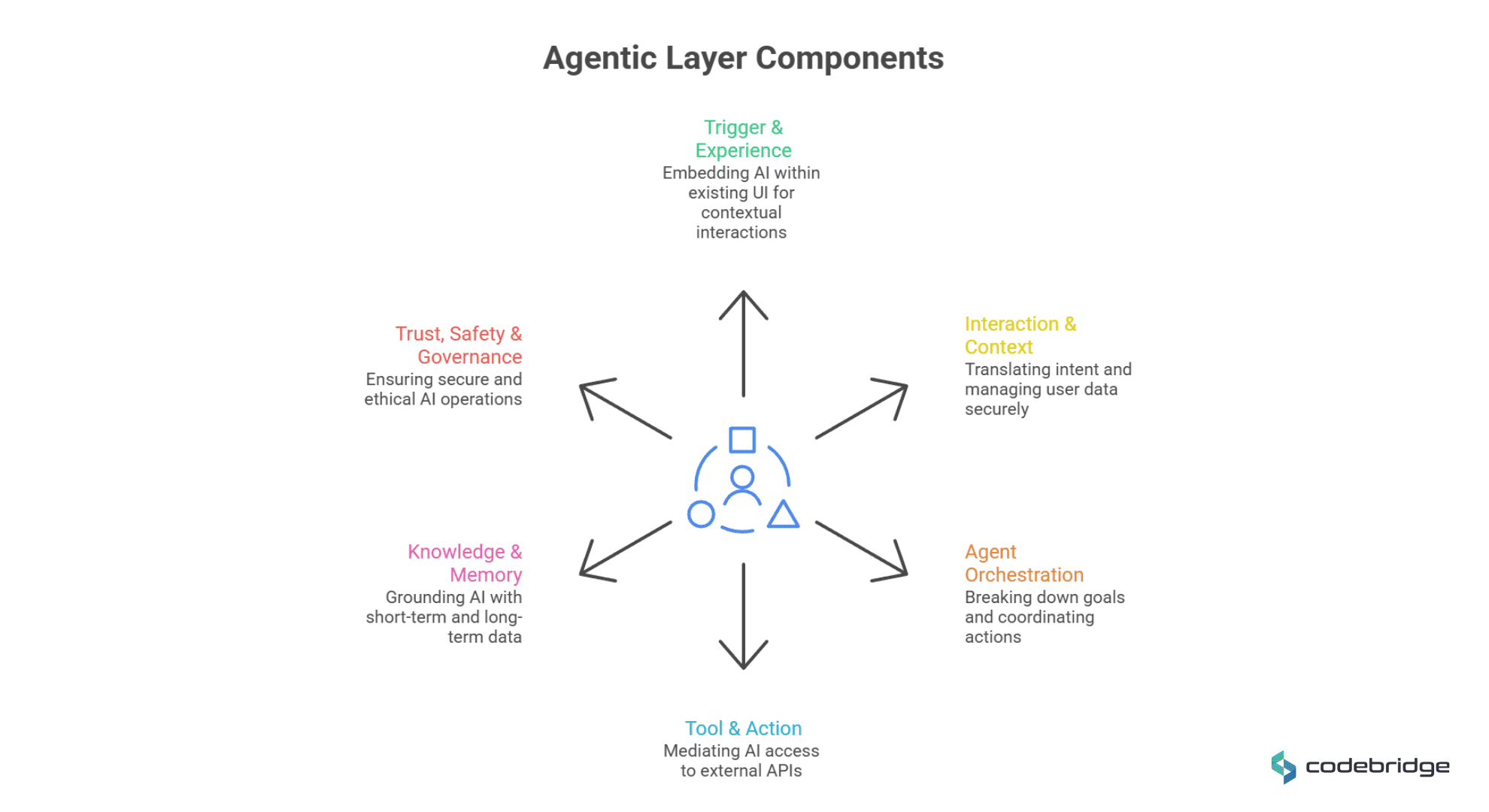

Reference Architecture: Components of an Agentic Layer

Designing a resilient agentic layer requires decomposing the system into modular, interfacing components that sit above the traditional application and data layers.

1. Trigger & Experience Layer

Instead of standalone chatbots that pull users away from their work, the trigger layer must be embedded within the existing UI. Natural language entry points should be tied to the user’s current context, such as an "Ask AI" button on an invoice screen. This creates an action-oriented UX where the agent proposes a complete plan with previews for the user to approve.

2. Interaction & Context Layer

This layer is responsible for translating free-form intent into structured input while ensuring that the agent is grounded in reality. It must assemble relevant context, including user identity, session state, and interacting permissions. Permission-aware prompt building is the critical security boundary here; it ensures the agent only "sees" data the user is authorized to access, preventing accidental data leaks or hallucinations based on unauthorized information.

3. Agent Orchestration Layer ("The Brain")

The orchestration layer breaks high-level goals into discrete steps.

- Planner/Reasoner: Determines the necessary sequence of actions.

- Executor: Coordinates tool invocation.

- Critic/Validator: Performs pre-execution sanity checks to verify that proposed actions align with business intent and safety rules. Architecturally, this may be implemented as state machines or directed graphs to make the decision process explicit and auditable.

4. Tool & Action Layer

Tools should be viewed as "public APIs for the AI," not as uncontrolled hacks. A mediator pattern, or tooling gateway, is essential to prevent the Large Language Model (LLM) from directly hitting microservice endpoints. This gateway validates inputs, checks permissions, and throttles calls, ensuring the agent remains within defined operational boundaries.

5. Knowledge & Memory Layer

This layer utilizes Retrieval-Augmented Generation (RAG) to ground agents in domain-specific knowledge. Architecture must distinguish between:

- Short-term memory: Session-scoped conversational context.

- Long-term memory: Persistence of organizational rules, learned preferences, and historical decisions. Maintaining this separation is vital for governance, as it prevents the system of record from being corrupted by ephemeral state changes.

6. Trust, Safety & Governance Layer

Governance is the "non-negotiable" foundation for scaling agentic operations. This layer includes automated safeguards like rate limits and blast-radius controls to mitigate the impact of an agent gone awry. Furthermore, each agent should be treated as a unique identity within the IAM (Identity & Access Management) system, requiring its own authentication and authorization akin to a human user.

Integration Patterns: Connecting Agents to Existing Systems

Choosing the right integration pattern is a trade-off between implementation speed and system reliability.

Event-Driven vs. Request/Response

Event-driven orchestration is often superior for SaaS platforms already utilizing event architectures. By having an agent publish an event (e.g., InvoiceApproved) that downstream services subscribe to, you achieve clean decoupling and align with existing infrastructure. Conversely, request/response via direct API calls is simpler to debug and offers clearer failure modes for synchronous tasks, though it risks tight coupling.

Mediator vs. Direct Tool Invocation

A mediator pattern is highly recommended over direct invocation. A "tooling gateway" validates AI-generated inputs before they reach internal APIs, protecting against corruption and prompt injection. Direct invocation, while faster to prototype, lacks this validation layer and leads to "unintended actions" if the LLM produces malformed requests.

API-First Thinking

Technology leaders must treat agents like any other external integration. This means leveraging existing API security, rate limits, and authentication. Designing tools at a high level of abstraction, such as SubmitExpenseReport instead of low-level SQL commands, encapsulates business rules and ensures the agent can’t bypass existing logic.

Safely Exposing Internal Capabilities

The engineering challenge lies in providing the agent enough capability to be useful without compromising security.

Organizations should adopt a whitelist approach using governed catalogs. Only explicitly approved tools are invocable by the agent. This forces a rigorous review of each capability: "What is the worst-case scenario if the AI misuses this specific tool?"

Scoped, Least-Privilege Tools

Agents must also inherit the permissions of the user they are acting for. Passing the user’s token through the tool call ensures session integrity and respects Multi-tenant and Role-Based Access Control (RBAC) boundaries. Least-privilege design might involve creating separate tools for different risk thresholds, such as one tool for refunds under $100 and another requiring escalation for higher amounts.

Input/Output Validation and Sandboxing

AI-generated inputs must be validated to prevent prompt injection from corrupting internal databases. Similarly, output filtering, such as scanning for PII (Personally Identifiable Information), is necessary to ensure the agent does not inadvertently reveal sensitive data in its responses.

Rate Limits, Quotas, and Kill-Switches

To prevent agent-induced DDoS attacks or runaway API costs, centralized management of quotas is essential. A "kill-switch" or circuit breaker must be designed into the architecture to allow for emergency shutdown of agent threads without taking down the entire platform.

Human-in-the-Loop Patterns

Progressive autonomy is the safest path: start with 100% human review, move to exception-only reviews, and eventually transition to auto-execution for routine, low-risk tasks. Transparent previews, where the agent explains its proposed actions, are critical for building the trust necessary for this transition.

Implementation Challenges and Mitigation Strategies

Hallucinated state and “phantom facts” (agents inventing what your SaaS did)

Challenge: When an agent can write tickets, change configs, or initiate transactions, an ungrounded completion becomes an operational incident. Research shows that parametric-only generation can hallucinate, and that grounding through retrieval reduces this failure mode.

Mitigation (agentic-layer pattern): Make the system of record authoritative by forcing the agent to “read-before-write”:

- retrieve/lookup current state

- cite the retrieved evidence, then

- produce an action proposal. Retrieval-Augmented Generation (RAG) reports more factual generations vs parametric-only baselines in knowledge-intensive settings.

Brittle long-horizon execution (agents lose the plot mid-workflow)

Challenge: Multi-step workflows amplify small reasoning errors into wrong actions, retries, and runaway costs. Benchmarks designed for LLMs-as-agents identify long-term reasoning, decision-making, and instruction-following as core obstacles to “usable” agents across environments.

Mitigation: Prefer short, reversible steps with frequent “observe → re-plan” checkpoints. ReAct’s interleaving of reasoning traces with explicit actions is reported to improve success rates in interactive decision-making benchmarks compared to baselines, supporting a design where execution is broken into small tool calls separated by state reads.

Human trust, controllability, and “surprising automation.”

Challenge: Agents introduce uncertainty into user-facing and operator-facing flows; users must be able to understand, correct, and recover from mistakes.

Mitigation: For high-impact actions, design the agentic layer and UX around controllability: stage actions (propose → confirm), make uncertainty visible, provide overrides/undo where feasible, and preserve clear “why/what happened” traces, directly aligned with the guideline set’s focus on predictable, inspectable AI behavior.

Regression risk (agents drift as prompts/models/tools change)

Challenge: Agent behavior is sensitive to prompts, tool schemas, and model updates, and failures often present as “works in demo, breaks in production.”

Mitigation: Treat agent behavior as a testable artifact: maintain scenario-based suites that include tool-calling, long-horizon tasks, and failure-mode tracking (e.g., instruction-following breakdowns), reflecting the benchmark’s emphasis on typical failure causes.

Compliance and Governance Considerations

In regulated B2B sectors, governance is a non-negotiable prerequisite for production.

Data Privacy (GDPR, CCPA)

Agentic layers must uphold purpose limitation and user consent. This often requires "ephemeral memory", ensuring interaction data is only retained as long as necessary for the task, and strict geo-fencing to comply with data residency requirements.

Healthcare (HIPAA)

Healthcare agents must operate within a regime of strict de-identification and isolated processing environments. Technical safeguards, including end-to-end encryption and unique user IDs for all AI actions, are mandatory for any system touching Protected Health Information (PHI).

Financial Regulations (SEC, FINRA, SOX)

For finance, auditability is paramount. Regulations require that all AI-driven communication with clients be archived and supervised just like human advisor messages. Furthermore, using "black box" AI does not exempt a firm from the Equal Credit Opportunity Act; any agent-driven denial of credit still requires a legally defensible explanation.

Governance Best Practices

Mature organizations are establishing AI Ethics Committees to review use cases before deployment. They treat every agent as an identity with IAM-style controls and maintain rigorous audit trails as a core compliance enabler.

Conclusion: Engineering for Reality, Not Hype

Executives must understand that Agentic AI is an architectural decision, and not just a feature one. However, most companies experimenting today are getting that decision wrong.

When probabilistic systems are allowed to directly change systems of record, failures don’t appear as bugs. They appear as audit findings, customer escalations, and executive fire drills. The problem isn’t that agents are unsafe. It’s that they’re being introduced without the structures required to contain them.

Treating agentic AI as a dedicated architectural layer is the difference between controlled autonomy and accidental exposure. It’s the line between experimentation that scales and experimentation that quietly hard-codes risk into the platform.

At this point, every leadership team is already making a choice. Either agentic systems are designed deliberately, or they will enter the architecture anyway, driven by pressure, shortcuts, and optimism. And by the time that choice becomes obvious, it’s usually already too late.

%20(1).jpg)

%20(1)%20(1)%20(1).jpg)