In the enterprise sector, artificial intelligence has moved beyond novelty and into an era of ROI scrutiny. This shift has exposed a structural paradox: while Large Language Models (LLMs) routinely score between 80% and 90% on standardized benchmarks, their performance in real production environments often drops below 60%.

This gap is especially visible in SAP’s findings. Models that achieved 0.94 F1 on benchmarks dropped to 0.07 F1 when tested on actual customer data - a 13-fold decline.

The operational consequence is severe: 95% of enterprise AI pilots fail to progress beyond proof of concept(POC). And based on long-term industry experience, Codebridge finds that failure rates rise sharply when AI is treated as a research tool rather than an engineered product.

Global benchmarks measure what is easy to test, while production requires measuring what matters to stakeholders. Thus, teams must design evaluation into the product from the beginning, rather than adding metrics only after deployment.

The Global Metrics Illusion

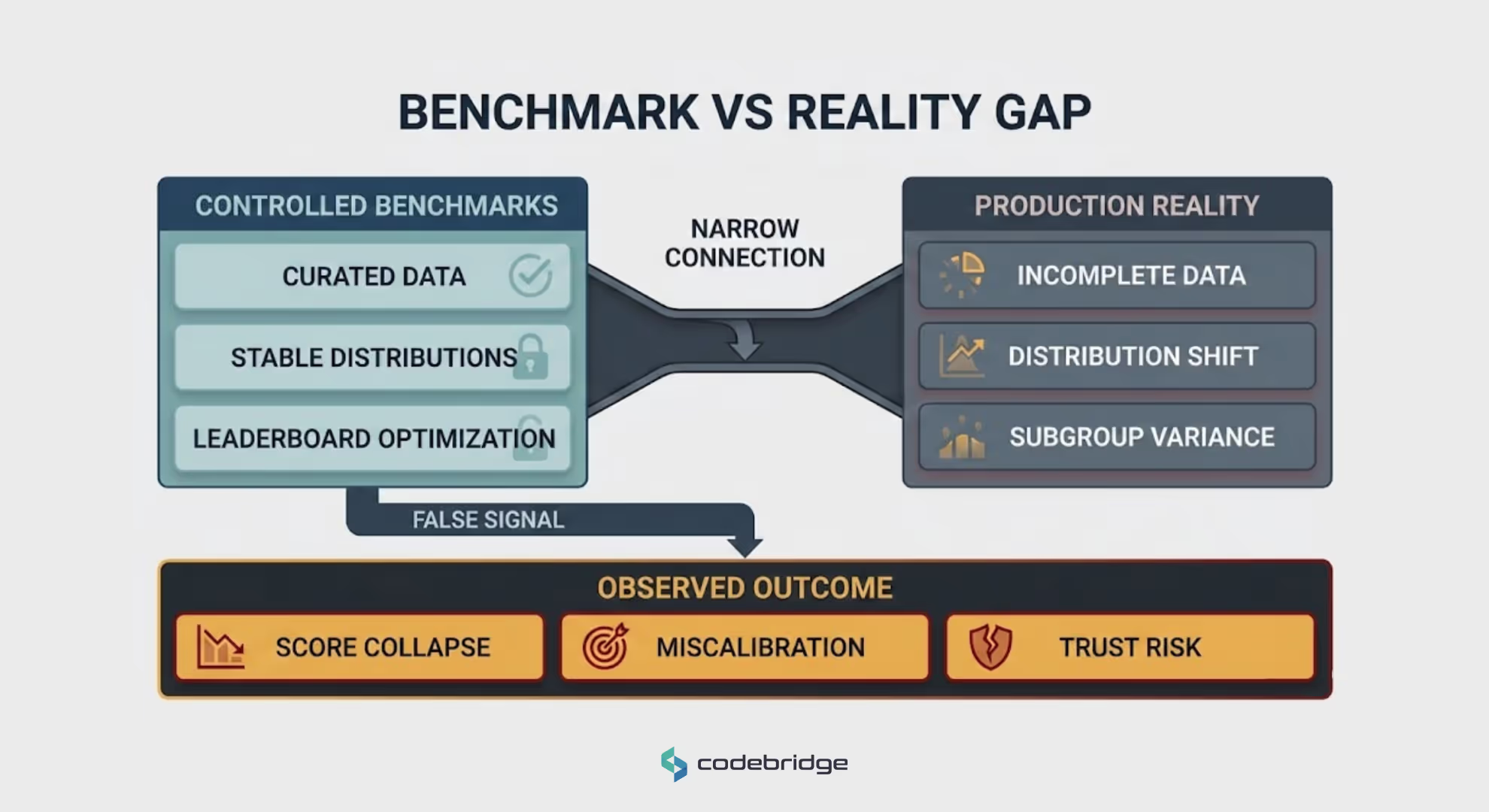

Universal benchmarks such as MMLU, MATH, and GSM8K create a false signal of readiness because they operate in controlled, optimized environments. These datasets are carefully curated, unlike the incomplete and unstable data found in production systems.

Models often learn benchmark-specific patterns instead of generalizable reasoning. That’s why, when leading models scoring above 90% on MMLU were tested on "Humanity’s Last Exam", a benchmark designed with anti-gaming controls, performance dropped dramatically.

This drop occurs partly because models are optimized for benchmark patterns (benchmark gaming) instead of real-world task complexity. AI labs optimize for leaderboard performance to attract investment, prioritizing narrow metrics over real-world reliability. As a result, models often report high confidence even when their predictions are wrong.

On the HLE benchmark, models exhibited RMS calibration errors between 70% and 80%, meaning a model claiming 70% confidence was correct only 3% to 15% of the time. In high-stakes domains such as healthcare or finance, where factual errors are perceived as deception, a single confident but wrong prediction can undermine trust in an entire deployment.

Standard accuracy metrics also fail to capture distribution shift. Covariate shift occurs when input features change while relationships remain stable. Concept drift occurs when those relationships themselves change. Subgroup shift is especially harmful because a model can seem accurate overall while failing badly for certain user groups.

Unlike traditional software defects, these failures emerge gradually and often remain hidden until financial or operational damage has already occurred.

The Case for Domain-Specific Evaluation

Codebridge operates on the principle that one-size-fits-all evaluation does not exist. A model can lead benchmark rankings while remaining computationally impractical, opaque, or poorly aligned with real business workflows.

Epic’s sepsis model illustrates this risk. While developers reported 76–83% accuracy, real-world testing showed it missed 67% of sepsis cases and generated over 18,000 false alerts per hospital annually. Alert fatigue caused clinicians to ignore warnings, including correct ones. Instead of improving detection, the model degraded operational effectiveness by introducing noise.

Therefore, evaluation must focus on business consequences and not just on accuracy scores. This includes task-specific precision–recall trade-offs, where the cost of false positives differs from false negatives. It requires failure-mode analysis to understand what happens when predictions are wrong and subgroup testing to ensure reliability across user populations. And it must also test whether professionals can realistically use the system’s outputs within their existing workflows and time limits.

Codebridge embeds evaluation into product design. Testing infrastructure is built alongside the model, and success metrics are derived from user requirements rather than available datasets. Organizations that integrate evaluation early often deploy faster because failures are detected before full production rollout.

Domain Deep-Dive: HealthTech

Healthcare is a safety-critical domain in which errors cause patient harm, and regulatory compliance is mandated by bodies such as the FDA and EMA. These systems operate under a strong explainability requirement, where opaque behavior violates oversight standards.

Failures often occur because training data reflects ideal clinical conditions rather than real hospital environments. Google’s diabetic retinopathy system performed well on curated clinical images but rejected 89% of images in Thai rural clinics due to outdated portable equipment and inconsistent lighting. IBM’s Watson for Oncology was trained on idealized patients and failed when confronted with comorbidities and incomplete medical histories.

A HealthTech evaluation framework must prioritize:

- Sensitivity and specificity by subgroup – consistent performance across age, ethnicity, and comorbidity profiles.

- Real-world false positive rates – measuring clinician alert burden.

- Clinical decision impact – whether treatment decisions actually improve.

- Workflow time delta – net effect on efficiency, including human verification time.

Domain Deep-Dive: FinTech

Finance operates in an adversarial environment where fraudsters adapt to detection systems. Models face extreme temporal instability: a credit model trained during economic stability will fail during a recession. Accuracy metrics can remain high while production performance silently deteriorates due to a distribution shift.

Different types of errors carry very different financial consequences. Missing a $10,000 fraudulent transaction is fundamentally different from blocking a legitimate customer. Regulatory frameworks such as MiFID II require explainability and auditability to prevent disparate impact and legal exposure.

A FinTech evaluation framework must include:

- Cost-weighted accuracy – reflecting the business impact of each error type.

- Adversarial robustness – testing against emerging fraud patterns and synthetic attacks.

- Temporal decay monitoring – drift detection with retraining triggers.

- Explainability compliance – decision transparency for regulators and customers.

Domain Deep-Dive: LegalTech

Legal systems operate under a precision imperative: a single fabricated citation can lead to professional sanctions. Unlike other domains, approximation is unacceptable.

Hallucination risk remains significant. Even with Retrieval-Augmented Generation, hallucinations occur in 17% to 33% of outputs. In Gauthier v. Goodyear, an attorney was sanctioned after submitting AI-generated cases that did not exist. Because models express identical confidence in real and invented citations, productivity gains are often offset by mandatory verification.

Key LegalTech metrics include:

- Citation accuracy – every reference must exist and support the claim.

- Jurisdictional precision – correct application of local law.

- Temporal currency – use of current law rather than outdated precedent.

- Professional adequacy – compliance with professional responsibility standards.

Integrating Evaluation into Product Design

At Codebridge, evaluation is treated as a first-class product feature. All teams must design measurement frameworks alongside product requirements instead of adding them later.

This approach follows a structured lifecycle:

- Discovery – define domain-specific success criteria with stakeholders before development begins.

- Architecture – embed evaluation hooks into system design for automated monitoring.

- Development – test continuously on production-representative data instead of academic benchmarks.

- Operations – implement MLOps pipelines with drift-triggered retraining.

This approach reduces launch failures and makes performance easier to maintain over time. Early detection prevents benchmark-optimized systems from failing at launch. Long term, drift monitoring sustains performance, predictable behavior builds user trust, and domain-specific risks are reduced.

Conclusion

A major obstacle in deploying AI is that strong benchmark results rarely predict how models behave with real user data. It persists because organizations measure what is convenient rather than what is operationally necessary.

To close this gap, teams must build AI systems as production infrastructure rather than research prototypes. Leaders must define success before building, test against real conditions, and integrate monitoring into the lifecycle. At Codebridge, evaluation is not an afterthought – it is the system’s foundation. When AI passes benchmarks but fails in production, the issue is rarely the model. It is the evaluation strategy.

.avif)

.avif)

.avif)