Financial services are moving from rule-based automation to AI systems that can plan and execute actions with limited human oversight. This isn’t just a forecast: the World Economic Forum estimates financial services firms will spend $97B on AI by 2027 across banking, insurance, and capital markets.

While predictive AI offered advice, agentic AI in fintech takes action: it moves money, approves loans, and executes trades. For buyers like bank risk committees and CTOs, the risk shifts from model risk (is the prediction accurate?) to agent risk (did the system just initiate an unauthorized SWIFT transfer?).

Consequently, technology scale-ups are hitting a due diligence bottleneck where the primary concern is no longer just model accuracy, but the ability to stop an agent from hallucinating a transaction and the existence of an immutable log of its reasoning. In this agentic era, "explainable prompts" are insufficient; the compliance requirement is a forensic audit trail of actions and controls that makes the system’s behavior inspectable, bounded, and reversible, even when the model’s outputs are probabilistic.

How to Design a Secure Architecture for Agentic AI in FinTech

A secure agentic architecture must combine standard software controls with safeguards designed for non-deterministic model behavior. While traditional FinTech architectures focus on securing static code and data flows, agentic systems introduce behavioral vulnerabilities, such as prompt injection or unsafe tool handshakes. Recent financial red-teaming benchmarks show even GPT-4 succumbs to domain-specific jailbreaks at 21.7%, with failure rates nearly doubling (up to 58%) in multi-turn dialogues that simulate persistent adversaries, exposing why generic safety alignment falls short for agentic FinTech.

The following blueprint synthesizes industry-standard secure architecture principles with the specific requirements for governing autonomous agents.

Secure Agentic Architecture Blueprint (FinTech Design Pattern)

The primary objective of this architecture is to establish the orchestration framework as the mandatory control plane. This prevents AI agents from recomposing the system at runtime, such as discovering and calling unauthorized third-party APIs (for example, via MCP-style tool discovery), unless tool access is explicitly governed.

1) Control Plane: Orchestrator + Policy Engine

The Control Plane acts as the brain and the governor of the system, separating reasoning logic from tool execution.

- Intent & Scope Enforcement (IBAC/ABAC): Beyond traditional Role-Based Access Control (RBAC), the system must utilize Intent-Based Access Control (IBAC) to evaluate if the agent’s specific intent aligns with the user’s authorized scope before any action is permitted.

- Tool Governance: FinTechs must implement a strict allowlist and signed tool manifests; unknown or discovered tools must be blocked by default so agents can only use explicitly approved tools.

- Jurisdiction & Residency Checks: The orchestrator must enforce geographic routing controls, ensuring that a request in one jurisdiction does not inadvertently route sensitive PII through a non-compliant region simply because a model determined it was the most efficient path.

- Risk-Based Approvals: High-materiality actions (e.g., SWIFT transfers or SAR filings) must trigger step-up authentication or mandatory Human-in-the-Loop (HITL) approval gates.

- Policy Versioning: Business rules encoded in prompts must be treated as code, requiring version control, change logs, and formal approval workflows before promotion to production.

2) Execution Plane: Agent Runtime

The Execution Plane is the sandboxed environment where the agent performs its cognitive tasks.

- Sandboxed Compute: Each agent or tenant workflow should run in an isolated runtime (e.g., Docker/K8s) with a deny-by-default networking posture to prevent unauthorized lateral movement.

- Scoped Credentials: Agents must never hold root or persistent access; instead, they should use short-lived, revocable tokens (JWTs) bound to a specific task, amount limit, and dataset scope.

- Structured Actions Only: To maintain determinism, agents must interact with tools using typed schemas (e.g., JSON Schema) rather than free-form execution, ensuring inputs are validated for format and range before reaching the tool.

- Memory Boundaries: Architecture must separate ephemeral working memory from persistent long-term memory, with default policies to prevent the persistence of sensitive data across sessions.

3) Tool Plane: Tool Gateway

The Tool Gateway serves as the single chokepoint between the probabilistic agent and the deterministic financial systems.

- Single Chokepoint: No agent should have direct API access; all calls must be mediated by the gateway for inspection and enforcement.

- Capability-Based Permissions: Permissions must be assigned to narrow, specific functions (e.g., "initiate_refund") rather than broad system endpoints.

- Quotas & Circuit Breakers: Real-time interdiction mechanisms, such as runtime safeguards (quotas, anomaly detection, and circuit breakers) that block suspicious calls before execution. If you use model-internals methods, cite the approach and state limitations.

- Dry-Run / Simulation: High-stakes transactions should undergo a preflight simulation (e.g., using agent-based market simulators) to assess the potential impact before commitment.

- Egress Controls: The gateway enforces domain allowlists and performs outbound Data Loss Prevention (DLP) to scan for unauthorized data exfiltration.

4) Data Plane: Retrieval + Privacy Controls

This plane governs how data is retrieved, masked, and utilized within the agentic workflow.

- Minimum Necessary Access: Implement row and field-level security controls to ensure agents only retrieve the specific data points required for their goal, preventing bulk data exports.

- Tokenization/Masking: An inference gateway should mask or tokenize PII/PCI data before it reaches the model, ensuring the reasoning engine never sees raw sensitive identifiers.

- DLP on Inputs/Outputs: Continuous scanning of prompts, RAG retrieval results, and tool outputs is required to identify and redact sensitive information.

- Segmentation: Maintain strict tenant isolation and data zoning (public, internal, confidential, restricted) to prevent cross-contamination in multi-agent environments.

5) Assurance Plane: Audit + Monitoring + Recovery

The Assurance Plane provides the evidence required for regulatory defense and forensic investigation.

- Immutable Audit Trails: Every autonomous decision must be recorded in an immutable audit log (replayable decision record) that captures the intent, the policy decision, the tool call parameters, and the final outcome.

- Provenance: Action logs must record the exact version of the model, prompt template, and tool manifest used at the time of inference to ensure reproducibility.

- Anomaly Detection: AI-powered monitoring must detect "weirdness" in real time, such as suspicious tool chaining, payment spikes, or low-and-slow data exfiltration attempts.

- Kill Switch: Authorized personnel must have a central emergency stop mechanism to instantly disable an agent, tool, or policy bundle and revert to a known-good state.

- Continuous Red-Teaming: Integrate Autonomous Attack Simulation (AAS) into the CI/CD pipeline to probe agents for prompt injection and goal subversion before every deployment.

In the agentic era, orchestration is your primary control plane. By forcing every autonomous action through a structured gateway involving policy enforcement, sandboxed execution, and immutable auditing, you transform agent risk into a manageable operational metric. This architecture ensures that agent autonomy remains bounded and reversible.

Validating Behavior in AI Systems for FinTech

The “innovation trilemma” argues that regulators face a three-way trade-off between clear rules, market integrity, and supporting innovation, and that pushing hard on two usually compromises the third.

Agentic AI intensifies this tension by introducing emergent, non-linear behaviors that cannot be fully anticipated at design time. In financial services, where autonomous systems may execute trades, access sensitive data, or trigger payments, traditional validation approaches such as static backtesting or historical performance comparisons are inadequate. They measure past accuracy, not future behavior under adversarial pressure.

Autonomous Attack Simulation (AAS)

AAS tests how an agent behaves across multi-step workflows by running adversarial scenarios in a sandbox, focusing on tool use, permissions, and data handling. Rather than relying solely on human red teams, AAS deploys adversarial AI agents that automatically generate and execute attack scenarios against target agents in sandboxed environments.

The objective is not to test isolated model responses, but to evaluate full system behavior, how agents interpret prompts, call tools, share context, and make decisions across multi-step workflows.

In fintech, this is critical as financial agents operate in high-stakes environments where prompt injection, context leakage, unauthorized API calls, or subtle goal manipulation can translate directly into compliance breaches or data exposure. AAS enables firms to proactively test for these behavioral vulnerabilities before they surface in production.

How AAS Works in Practice

- Deploy an adversarial agent library that simulates realistic attacker motives such as prompt injection, privilege escalation, data exfiltration, and jailbreak attempts.

- Run multi-agent scenarios inside a sandboxed behavioral harness that records reasoning traces, tool invocations, and data access patterns without touching production systems.

- Enforce agent contracts through a policy engine that monitors permissions, flags unauthorized API usage, and detects context leakage or unsafe tool combinations.

- Convert findings into regression tests via a feedback loop that blocks deployment on failure, generates structured reports, and continuously refines behavioral safeguards.

In CI/CD, AAS becomes a gated test stage. It builds fail if scenarios trigger unauthorized tool use or sensitive data leakage. Each build becomes a controlled stress test for autonomous reasoning, while continuous monitoring detects behavioral drift over time.

For fintech businesses adopting domain-specific adversarial standards such as FinJailbreak, AAS ensures that safeguards are evaluated against realistic financial misconduct scenarios, not generic safety benchmarks, aligning AI innovation with regulatory resilience and market trust.

Why Governance and Auditability Influence Procurement Decisions

For financial institutions, a robust transparency layer is a non-negotiable operational requirement and not just a feature. In the agentic era, where the “Point of Intent” is separated from the “Point of Execution,” the audit trail becomes the forensic flight recorder of your system. It is your primary defense when a regulator or board member asks, “How did this system arrive at that recommendation?”

Why This Becomes a Moat In Agentic AI-driven FinTech Systems

In regulated markets, traceability is a buying criterion. Oversight frameworks increasingly require record-keeping and logging capabilities to support monitoring, investigation, and accountability across the AI lifecycle.

When you can produce decision provenance on demand, such as inputs, sources, versions, tool calls, and approvals, you reduce the cost of due diligence for risk and compliance, which accelerates vendor approval and deployment. In other words, superior auditability converts governance from a blocker into a sales enabler by making assurance portable, inspectable, and repeatable.

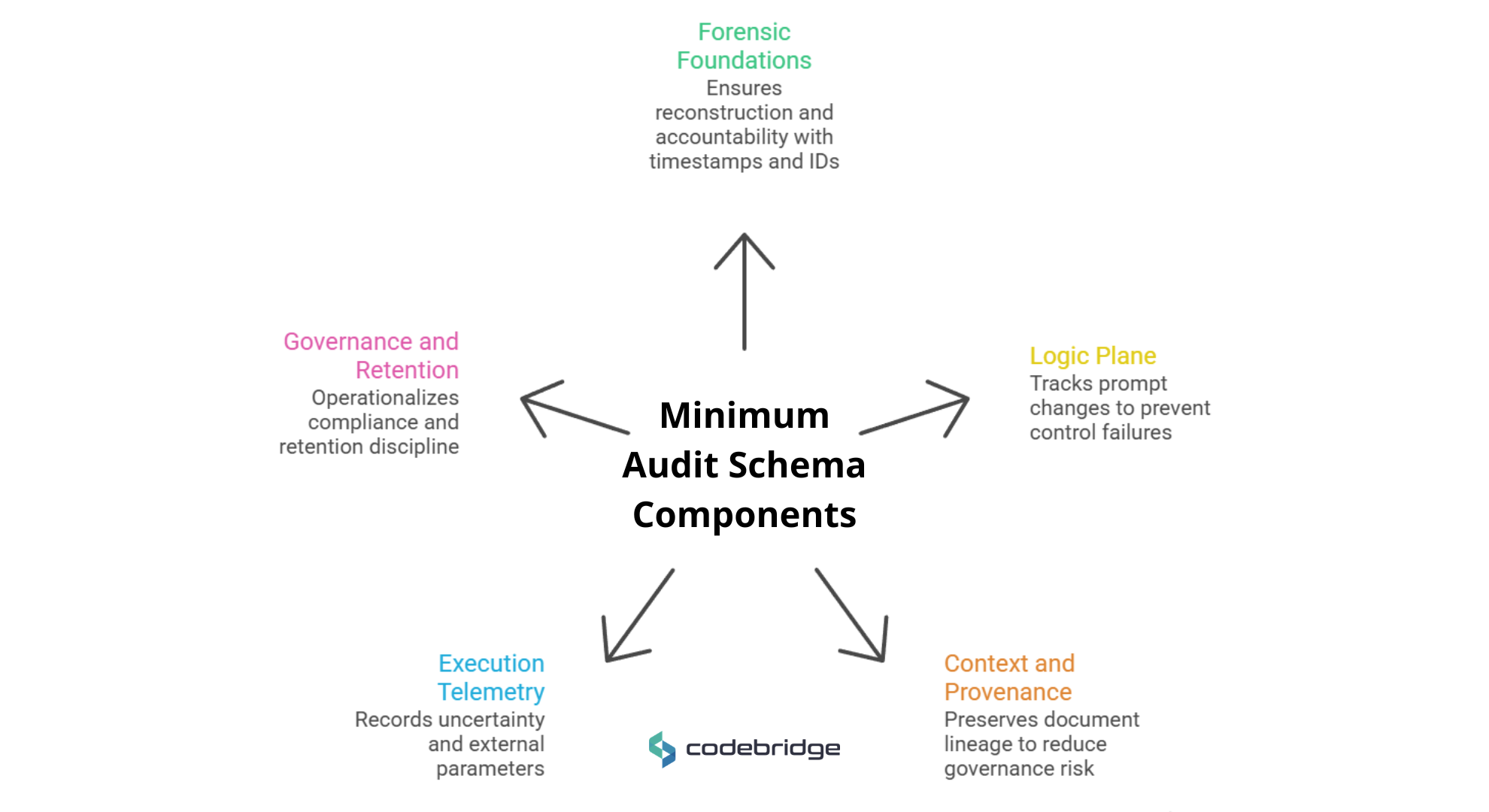

The Minimum Viable Audit Schema

Architecturally, FinTechs should implement a minimum audit schema (e.g., ~12–20 fields, depending on your regulator and risk tier) and publish the schema in your assurance pack.

- Forensic foundations (Timestamp_UTC, Audit_ID, User_ID) enable reconstruction and accountability.

- The logic plane (Model_Name & Version, Prompt_Version) matters because prompts function as executable business rules; undocumented changes become control failures.

- Context and provenance (Query_Classification, Source_Documents) preserve RAG document lineage, reducing “silent” governance risk from untraceable facts.

- Execution telemetry (Tool_Call_Inputs, Confidence_Score, Response_ID, Output_Type) records uncertainty and external parameters.

- Governance and retention (Regulatory_Context, Approval_Status, Data_Retention_Days) operationalize compliance obligations and retention discipline.

Strategic benefits your audit layer should deliver

- Compliance: faster evidence production for audits, investigations, and post-market monitoring.

- Trust: higher organizational confidence through documented accountability and traceability.

- Revenue acceleration: reduced procurement friction and shorter security/risk review cycles via reusable assurance artifacts.

- Risk insulation: faster incident triage and clearer blast-radius containment through immutable, queryable logs.

Implementation typically requires two shifts: adopting WORM immutable infrastructure to prevent retroactive modification, and tagging every response at inference time rather than reconstructing logs after the fact.

Strategic Outlook: Aligning with the Regulatory Roadmap

The regulatory landscape for AI in finance is no longer speculative. Supervisors increasingly expect firms to demonstrate accountability for opaque (“black box”) outcomes and are building supervisory technology capabilities to monitor risks at scale.

Regulatory roadmap by control objective

Record-keeping and traceability (AI governance evidence you can replay)

Across regimes, the durable requirement is provable traceability: high-risk AI systems must support automatic logging over the system lifecycle to enable oversight, post-market monitoring, and investigation. In practice, this means your audit trail (schema + immutable storage) must produce a replayable decision record that survives regulator scrutiny and internal audit.

Model governance and validation (MRM becomes the common language with Tier-1 banks)

Banks will map your agentic AI controls onto established Model Risk Management expectations: robust development practices, independent validation, and governance with clear documentation. This is explicit in U.S. SR 11-7 guidance and the UK PRA’s SS1/25 principles, both of which shape how large institutions evaluate third-party models and AI agents.

Operational resilience and third-party concentration risk (AI supply chains are now supervised)

Operational resilience obligations increasingly extend to ICT dependencies, cloud providers, and critical third parties, an especially acute issue as AI agents rely on shared infrastructure, data providers, and model vendors. DORA applies from 17 January 2025 and creates a supervisory backbone for resilience expectations, including oversight of critical providers.

Procurement math: KPIs that turn compliance into sales velocity

To prove “alignment pays,” optimize two procurement-facing KPIs.

Time-to-risk-signoff (days from security/MRM review start to approval) drops when standardized audit artifacts and reproducible logs replace manual evidence gathering.

Model change approval latency (days from agent update to production clearance) shrinks when prompts/models are versioned and each release automatically generates an assurance pack (audit + validation outputs) that enables apples-to-apples vendor comparison.

This is how governance becomes a commercial accelerator, not a post-sale hurdle.

Conclusion: What to implement first

In the agentic era, "autonomy" is a liability without "auditability". The transition from AI as an advisor to AI as an actor represents a shift in liability and operational accountability that traditional risk frameworks cannot handle. High-parameter complex systems like agentic AI upend static assumptions about stable models and one-time validations.

The winners in the FinTech space will not necessarily be the companies with the most powerful agents, but those that can provide regulators and clients with a "black box" flight recorder for every autonomous decision the system makes. Building these governance structures and forensic audit trails today is not merely a compliance exercise; it is a critical competitive advantage that unblocks the sales cycle and secures a long-term license to operate in the future of autonomous finance.

%20(1).jpg)

%20(1)%20(1)%20(1).jpg)