The healthcare sector is currently navigating a fundamental transition from experimental "AI-assisted" tools to a structural "AI-first" paradigm. Several forces are accelerating this change: a projected global health worker shortage of 10 million by 2033, a doubling of the medical knowledge base every 73 days, rising cost constraints, and the development of scalable digital infrastructure.

Therefore, healthcare leaders must now focus less on isolated AI pilots and more on engineering scalable, governed infrastructure that supports long-term patient care.

The Growth of AI in Healthcare: Why the Shift Is Structural, Not Experimental

The healthcare AI market is projected to expand from approximately $36.67 billion in 2025 to over $505 billion by 2033, a compound annual growth rate of 38.9%. This growth reflects a move toward technology-driven models that help healthcare systems manage increasing complexity.

The Impact of AI in Healthcare on System Architecture and Scalability

Traditional on-premises AI deployments are proving insufficient for the scale of modern clinical data, which is currently expanding at an annual rate of approximately 48%. The sheer volume of data generated over a patient's lifetime, including up to three terabytes from a single genomic sequence, creates "data gravity" that legacy, fixed-capacity hardware cannot handle efficiently.

Consequently, successful enterprise systems are transitioning to cloud-native and hybrid architectures to maintain the performance required for clinical use.

Cloud-native architectures are engineered specifically to leverage the elasticity of cloud computing through containerization and microservices. By decomposing monolithic applications into smaller, independently deployable services managed by orchestration tools like Kubernetes, these systems can dynamically scale computing nodes during peak demand, such as the high-volume periods in radiology between 10 AM and 2 PM.

This architectural shift is a major performance multiplier; cloud-native systems have demonstrated a 78% reduction in deployment times, moving from a traditional average of 180 days down to just 40.

Hybrid architectures. This model provides a strategic balance by combining private on-premises resources with public cloud infrastructure. It addresses the dual constraints of regulatory compliance and latency.

In practice, sensitive data processing can remain within an institution's firewall to satisfy HIPAA requirements and data sovereignty laws, while the public cloud is utilized for computationally intensive tasks like training deep neural networks or managing "burst capacity" during unexpected surges in patient volume.

The adoption of these scalable frameworks allows for sub-second image rendering and real-time inference, which are critical in life-safety scenarios. Furthermore, these modern architectures show reduced total cost of ownership by 30–40% compared to traditional on-premises alternatives by eliminating the need for expensive hardware over-provisioning.

How AI Will Affect Healthcare Product Roadmaps in the Next 5–10 Years

In the near term (0–5 years), roadmaps prioritize “reclaiming the clinician” because the binding constraint in care delivery is no longer clinical capability, it’s clinician attention. Documentation and EHR work can consume disproportionate time (with physicians reporting roughly 2 hours of documentation for every 1 hour of patient care), while operational friction like prior authorization adds recurring load.

This is also a retention and financial imperative: physician burnout is estimated to cost the U.S. system $4.6B annually through turnover and reduced clinical hours. Therefore, success in this phase is measured by hard reductions in time spent on high-volume cognitive work, especially documentation and imaging workflow, so the “keyboard” stops mediating the patient-provider interaction.

As we move into the 5-10+ year horizon, roadmaps must address a different crisis: the fact that medical knowledge now doubles every 73 days. This volume of information has surpassed human cognitive capacity, necessitating a shift toward "Ambient Intelligence" and "Digital Twins."

Roadmaps are therefore evolving through three distinct phases of agency:

- Administrative Automation (Current): Using NLP and Gen AI to solve the documentation crisis.

- Predictive Orchestration (Mid-term): Moving from "DISEASEcare" to "LIFEcare," where AI analyzes longitudinal data to predict sepsis or chronic exacerbations hours or weeks before clinical presentation.

- Digital twin Simulation (Long-term): Transitioning to a paradigm where interventions are tested on a patient’s "digital and biomedical" replica before real-world execution.

The product goal is no longer just to provide a better interface for data entry, but to build a system of intelligence that acts as an autonomous participant in the care journey.

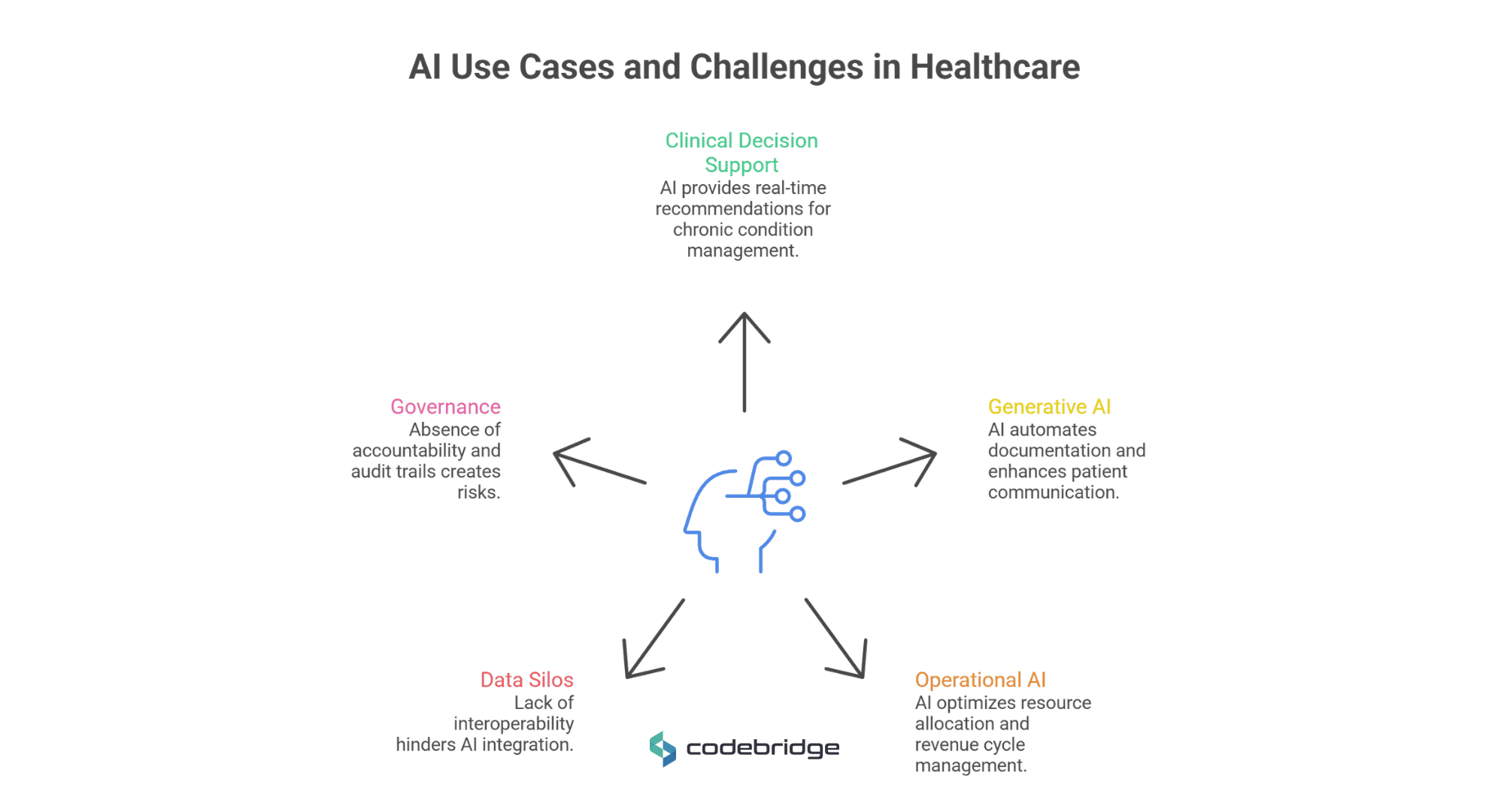

Current AI Use Cases in Healthcare – and Where They Break

While current AI applications show technical maturity, many fail in production due to a lack of integration and clinical trust.

Clinical Decision Support and Predictive Analytics

AI-driven Clinical Decision Support Systems (CDSS) now provide real-time, evidence-based recommendations for managing chronic conditions such as diabetes and hypertension. Predictive analytics can forecast sepsis onset hours before clinical symptoms appear, a critical advancement given that mortality increases by 8% for every hour treatment is delayed.

However, these systems "break" when they produce excessive alerts that are not contextually relevant, leading to alert fatigue, where clinicians ignore up to 96% of AI-generated warnings.

Generative AI in Healthcare: Documentation, Coding, and Patient Communication

Generative AI (Gen AI) has seen rapid adoption in administrative functions. "Ambient scribes" leverage Large Language Models (LLMs) to listen to consultations and create clinical notes, reducing documentation time by roughly 50-70% in time per note in specific workflows. For example, one emergency medicine study reported a drop from 127.5 to 42.8 seconds per discharge note.

In patient communication, GPT-4 has demonstrated the ability to draft empathetic responses to patient inquiries that, in some studies, outperformed human physicians in quality metrics.

AI Tools in Healthcare Operations and Workflow Optimization

Operational AI optimizes resource allocation, such as bed management and staff scheduling, by predicting patient admission rates. In the revenue cycle, AI-powered tools automate medical billing and fraud detection, finding irregularities that indicate overbilling and potentially saving the industry billions.

The Limitations of AI in Healthcare Today (Integration, Data Silos, Governance)

The primary failure point for modern AI is the persistent existence of data silos. Approximately 80% of clinical data is unstructured, and a typical practice uses up to 14 different software applications. This creates massive fragmentation, where intelligence cannot move seamlessly between clinical, administrative, and financial systems without standardized interoperability.

Consequently, 85% of AI research projects fail to transition into clinical practice because they are developed in isolation from real-world workflows. Effective integration requires more than a RESTful API; it demands response times under three seconds and deep contextual relevance to prevent "alert fatigue."

Governance represents the third critical bottleneck. Most current systems operate as "black boxes," creating an accountability vacuum in which liability is diffused among developers, vendors, and practitioners. Without immutable audit trails and rigorous model versioning, organizations accumulate "Model Debt", the accumulation of suboptimal lifecycle practices that erode system reliability over time.

For technology leaders, any AI tool that lacks clear error-attribution frameworks or deep workflow embedding remains a high-risk liability rather than a scalable asset.

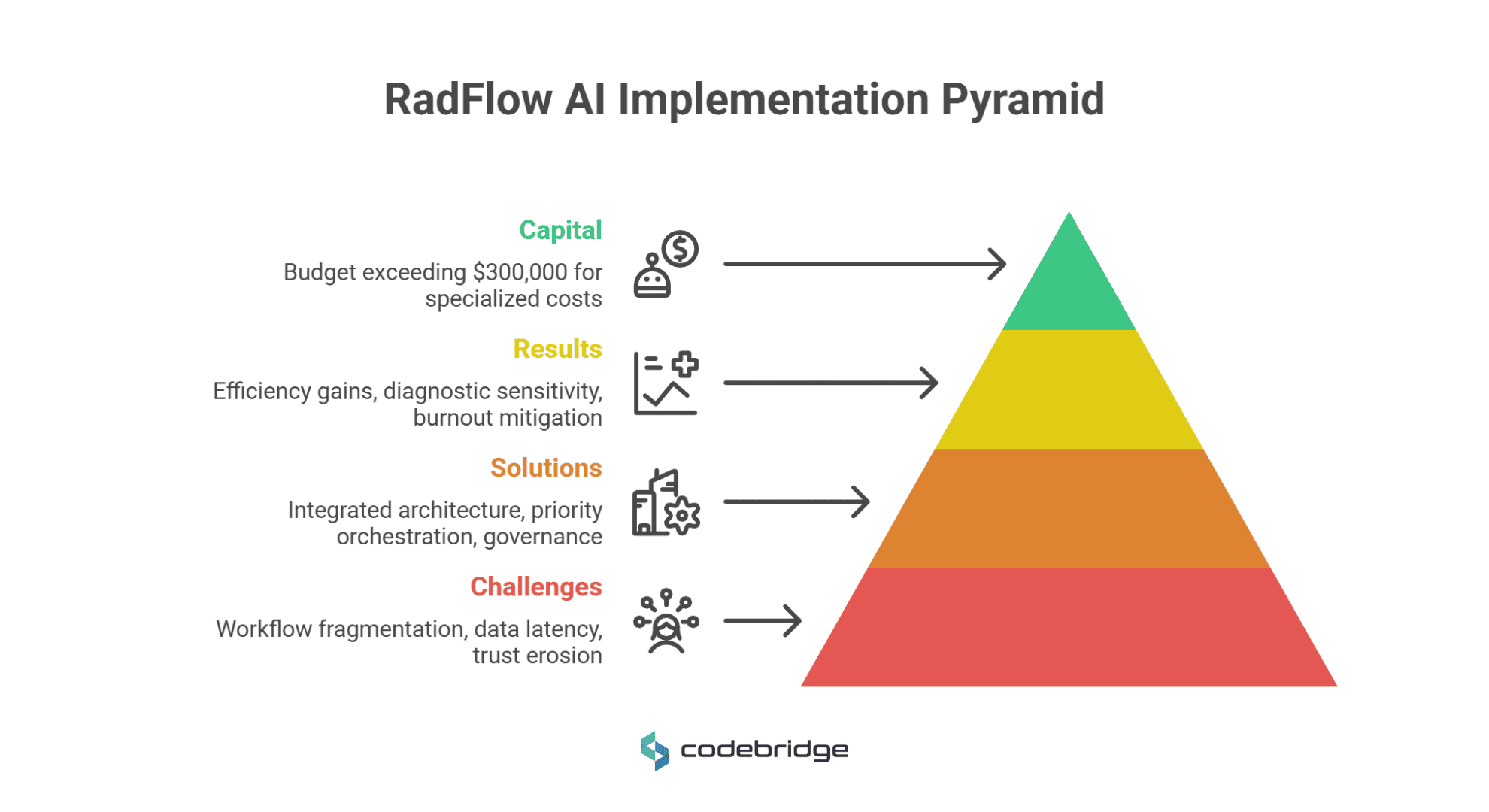

AI Use Cases in Healthcare: How AI Is Used in Radiology Workflows (RadFlow AI Case Study)

The case of RadFlow AI provides a blueprint for tech leaders on the structural requirements of moving from experimental algorithms to production-grade "Human-in-the-Loop" systems in Tier-1 diagnostic environments.

Case Description

A Tier-1 diagnostic imaging network, operating 12 centers across three states, faced a critical operational bottleneck: scan volumes were increasing by 22% year-over-year while radiologist headcount remained static. This imbalance led to accelerating clinician burnout and turnaround times that exceeded contractual SLAs by 15%.

The objective was to engineer a HIPAA-compliant, cloud-native diagnostic overlay that would integrate computer vision directly into the clinical workflow to augment, rather than replace, human expertise.

Challenges: Fragmentation, Latency, and Trust Erosion

The discovery phase identified three systemic failure points that prevented traditional AI tools from delivering enterprise value:

- Workflow Fragmentation: Radiologists were forced to switch contexts between the primary PACS viewer, a separate AI interface, and a dictation system. This fragmentation consumed roughly one-third of total reading time in non-interpretive tasks like re-orienting spatial context after window switches.

- Data Gravity and Latency: High-resolution CT studies often exceed several hundred megabytes. Legacy remote access infrastructure created rendering latencies that degraded scroll-through performance, particularly for rural sites on low-bandwidth connections.

- Trust Erosion: Previous AI pilots generated high volumes of false positives in post-surgical cases and motion artifacts. Consequently, clinicians developed a habit of dismissing AI findings entirely, rendering the tools a liability rather than an asset.

Solutions: Integrated Architectures and Governance

The team redesigned the system to integrate AI directly into the diagnostic workflow:

- High-Performance Serving Infrastructure: The system utilized a GPU-accelerated web imaging engine (built on WebGL 2.0) and progressive DICOM streaming to achieve sub-second navigation and initial render times under 400ms.

- Priority Orchestration: A real-time "AI Triage" module was implemented to rank studies by malignancy probability, automatically promoting high-risk cases to the top of the worklist.

- Production-Grade Governance: A "Clinical AI Oversight Module" was deployed to track agreement rates and model version history. Every AI-assisted decision was logged in an immutable audit storage with clinician override status and timestamps to ensure regulatory traceability and liability protection.

- Compliance by Design: The platform was architected for FDA Software as a Medical Device (SaMD) Class II alignment, incorporating IEC 62304 traceability in the CI/CD pipeline.

Results: 38% Efficiency Gains with Precision

The production deployment achieved measurable clinical and operational impact:

- Quantifiable Efficiency: Average CT interpretation time decreased by 38%, from 15.2 minutes to 9.4 minutes per study.

- Diagnostic Sensitivity: The system maintained a 96% sensitivity for detecting sub-4mm nodules while reducing the false-positive rate from 4.1 to 0.4 per scan over nine months of active learning.

- Burnout Mitigation: By eliminating one-third of time spent on non-interpretive navigation, the system restored clinician focus to direct patient care.

- Capital Requirements: The budget for this Tier-1 production-grade solution exceeded $300,000, accounting for specialized costs such as NVIDIA Triton Inference Servers, auto-scaling GPU nodes, and rigorous HIPAA/SaMD change control.

From Conversational AI to Agentic AI in Healthcare

The healthcare technology industry is currently transitioning from reactive "Conversational AI" to proactive "Agentic AI" systems capable of reasoning and executing multi-step tasks independently. While less than 1% of enterprise software applications featured agentic capabilities in 2024, adoption is projected to surge to 33% by 2028.

Why Conversational AI in Healthcare Is Only the First Layer

Conversational AI focuses on natural language understanding to interpret intent, context, and sentiment in human dialogue. In healthcare, this technology is primarily reactive, waiting for a patient or clinician to provide a prompt before responding with information or guided self-service.

While effective for "Level 0" queries, such as checking a lab result or scheduling a basic appointment, it typically relies on human intervention or separate systems to carry out complex clinical tasks beyond the chat interface.

For technology leaders, the limitation of a conversational-only approach is its inability to solve workflow fragmentation. A chatbot that merely lists symptoms without the agency to trigger a clinical pathway remains a passive tool rather than an active participant in care.

Furthermore, research indicates that while two-thirds of patients are comfortable with AI freeing up a professional's time, only 29% currently trust AI to provide standalone health advice, suggesting that the value of AI lies in its ability to execute behind-the-scenes administrative and operational tasks rather than just mimicking human speech.

What Is Agentic AI in Healthcare?

Agentic AI refers to intelligent systems that can set goals, plan complex sequences, and take autonomous actions with minimal human oversight. Unlike traditional models that respond to discrete prompts, agentic AI is task-oriented and proactive; it reasons through a problem and carries it through to completion.

In a healthcare context, this means the system can autonomously summarize call conversations, foresee a member's specific needs based on longitudinal data, and guide advocates with the most relevant information during a live interaction.

The transition to agentic AI is driven by the need to scale output rather than just improve interfaces. Recent industry surveys indicate that approximately 85% of healthcare leaders plan to increase investment in agentic systems over the next three years, with 98% expecting at least a 10% cost savings through these autonomous workflows. These systems operate within explicit guardrails and under human oversight to ensure that autonomous reasoning remains clinically safe and compliant.

Conversational AI vs Agentic AI

Agentic AI for Healthcare Platforms: Build vs. Integrate vs. Partner

For CTOs and founders, the decision to develop native agentic capabilities or integrate third-party agents is a critical architectural crossroad. The market is evolving into a mixed landscape where AI-native entrants, unhindered by legacy architectures, are driving transformative advances.

Simultaneously, established vendors are embedding AI into their platforms to avoid being sidelined.

What is emerging is an agentic mesh – a distributed ecosystem where orchestration becomes more important than application ownership. In this model:

- Interoperability is Mandatory: Agents must be able to share context and hand off tasks across disparate systems. This requires a move toward structured, semantically rich, and longitudinal data foundations like openEHR and HL7 FHIR.

- Data Segmentation is Critical: While agents may need access to EMR data, they must be architecturally blocked from sensitive, unrelated data like private email exchanges to prevent security breaches.

- New Identity Models: Security frameworks must evolve to support autonomous agents with their own secure identities and permissions, moving beyond static, human-centric access controls.

Success for technology leaders depends less on the size of a single application and more on the ability of their systems to interoperate and reason collectively within this emerging ecosystem.

Cost of AI in Healthcare – Investment, ROI, and Long-Term Ownership

For technology leaders, the financial profile of AI is distinct from that of traditional software. Unlike monolithic systems with predictable depreciation, AI systems require continuous maintenance and retraining, which increases long-term costs. For that reason, understanding the total cost of ownership (TCO) requires evaluating the initial build against the realities of clinical integration and systemic decay.

The Real Cost of Implementing AI in Healthcare Systems

Initial capital requirements are dictated by the complexity of the clinical task and the implementation approach.

- Off-the-Shelf vs. Bespoke: Basic AI functionality integrated into existing apps typically starts at $40,000, while bespoke, built-from-scratch deep learning solutions frequently exceed $500,000. A Tier-1 production-grade radiology assistant, for instance, requires a budget exceeding $300,000 to account for high-performance inference servers and rigorous change controls.

- The Compliance Envelope: Regulatory alignment is a primary cost driver. HIPAA certifications range from $10,000 to over $150,000, depending on infrastructure complexity.

- Data Preparation: Before a single line of model code is written, organizations often face "Data Debt." Standard data cleaning and labeling, essential for achieving the 95-99% accuracy thresholds required for clinical tasks, start at $10,000 per project.

Despite these entry costs, the return on investment (ROI) is tangible. On average, healthcare organizations realize ROI within 14 months, generating $3.20 for every $1.00 invested.

How AI Reduces Costs in Healthcare – and When It Doesn’t

AI serves as a powerful cost deflator by targeting clinical inefficiencies and preventing high-cost adverse events.

- Diagnostic and Preventive Gains: AI can improve breast cancer detection rates by 20% and has demonstrated a massive 48% reduction in patient readmissions through personalized care plans.

Early detection of sepsis, where mortality increases 8% for every hour treatment is delayed, directly reduces the intensity and cost of ICU stays.

- The Productivity Paradox: In the near term, AI can paradoxically fuel inflation. Under the "fee-for-service" model, providers are rewarded for volume. Because AI increases productivity, more patients are seen, and more treatments are administered, which increases total system spending even if the unit cost of a single read remains stable.

Additionally, AI’s ability to recognize complex patterns may lead to "overdiagnosis," triggering interventions for minor abnormalities that might never have become clinically significant.

Technical Debt, AI, and the Hidden Cost of Poor Architecture

Fragile architectures create long-term financial liabilities. AI systems introduce four novel types of technical debt:

- Data Debt: This results from using non-representative or poorly curated datasets, leading to a "garbage in, garbage out" cycle that necessitates total model recalls.

- Model Debt: AI performance is not static; models typically degrade by 5-10% annually without regular retraining on new data. Neglecting this lifecycle management leads to "Model Debt," where suboptimal features and hyperparameter settings erode predictive accuracy.

- Infrastructure Debt: Rapid prototyping often leads to "pipeline jungles" – complex, brittle webs of data processing and "glue code" that are nearly impossible to debug or upgrade without significant re-engineering.

- Accessibility Debt: A specific risk for enterprise platforms, this occurs when a system becomes so complex that the learning curve dissuades clinicians from immediate use, effectively devaluing the entire investment.

For CTOs, the strategic imperative is to treat architecture as a foundation for "LIFEcare" – a proactive system that integrates prevention and personalized treatment into the clinical workflow, rather than an isolated gadget that accelerates broken processes.

Ethical Issues of AI in Healthcare and AI Governance at Scale

For technology leaders, moving from experimental AI to enterprise-wide clinical deployment requires transitioning from aspirational ethics to quantifiable, domain-specific pathways. The high-stakes nature of healthcare, where errors can directly impact human lives, requires a shift from treating ethics as a theoretical checklist to treating it as a core architectural and operational requirement.

Data Privacy, Bias, and Regulatory Constraints

Data privacy remains the most significant barrier to the collaborative data aggregation required for high-performing, generalized models. Regulatory frameworks like HIPAA and GDPR impose strict limitations on the secondary use of health data, often resulting in "Data Debt" where models are trained on narrow, non-representative datasets.

Algorithmic bias is a documented structural risk that arises when data proxies misrepresent clinical needs. A critical example is a widely used population health management algorithm that was found to systematically disadvantage Black patients.

The algorithm utilized medical expenditure as a proxy for health needs, effectively ignoring the socioeconomic disparities in spending and incorrectly concluding that Black patients were healthier than they actually were.

To mitigate such risks, founders must move beyond basic accuracy metrics toward "structural parity" and "fairness-aware learning," utilizing techniques like balanced sampling and fairness auditing to close performance gaps across diverse demographics.

AI Governance in Healthcare: From Compliance to Continuous Oversight

AI governance in healthcare cannot function as a periodic compliance exercise; it must be ongoing and integrated into everyday operations, not treated as an occasional compliance review.

Governance in AI must operate as a continuous control system embedded into the People, Process, Technology, and Operations (PPTO) framework. Without this integration, organizations accumulate algorithmic risk, such as fragmented pilots, unclear ownership, and unmonitored model drift, that eventually undermines clinical trust and regulatory standing.

The PPTO model replaces ad hoc procurement with a standardized lifecycle that manages risk while preserving deployment velocity.

People: Establishing specialized AI governance committees that include clinicians, data scientists, and ethicists to define roles and ensure "Collective Responsibility".

Process: Implementing decision points across the AI lifecycle, including centralized algorithm inventories and systematic decommissioning protocols.

Technology: Building infrastructure that supports version control, performance monitoring, and the integration of Privacy-Enhancing Technologies (PETs) like Federated Learning and Differential Privacy.

Operations: Aligning AI initiatives with budget planning and executive sponsorship to ensure long-term viability.

To make this oversight actionable at the executive level, we propose the Healthcare AI Trustworthiness Index (HAITI) as a unified readiness metric. HAITI aggregates five core pillars, fairness, explainability, privacy, accountability, and robustness, into a composite, context-aware score that translates technical validation into board-level language.

By setting explicit decision thresholds (e.g., HAITI >0.85 for high-risk triage, with no pillar below 0.75), CTOs and CMIOs can standardize go/no-go decisions, align stakeholders around quantified risk, and avoid subjective debates.

This approach transforms governance from a compliance obligation into a strategic control surface, a factor that determines when AI systems are sufficiently mature for semi-autonomous or autonomous clinical deployment.

The Biggest Challenges of AI in Healthcare for CTOs and Founders

The main strategic challenge is "accountability". When an algorithm makes a clinical error, liability is often dangerously diffused between the software developer, the medical institution, and the individual clinician. This accountability gap creates a significant hurdle for adoption, as practitioners fear being held responsible for the decisions of an opaque "black-box" system.

To address this, CTOs must engineer systems with Human-in-the-loop (HITL) controls and Immutable Traceability Logs. HITL architecture ensures that AI supplements rather than replaces human expertise, keeping clinicians as the ultimate authority for high-stakes decisions.

Traceability logs must record every AI-assisted decision, clinician override status, and the specific model version used. This audit trail is inseparable from the technical architecture, serving as the primary mechanism for error attribution and legal defense in production environments.

For founders, the goal is to build systems where transparency and accountability are "baked-in" from day one, transforming AI from a potential liability into a trusted clinical asset.

The Future of AI in Healthcare

Healthcare is no longer piloting AI – it is restructuring around it. With the market projected to reach $505.59 billion by 2033, the real question for CEOs and CTOs is not adoption, but architectural control.

AI features layered onto legacy systems will stall. Competitive advantage will come from cloud-native, interoperable infrastructure deeply integrated into clinical data pipelines. The shift from conversational tools to agentic systems capable of executing multi-step tasks signals a move from assistance to operational autonomy, where ROI is calculated in measurable reductions in readmissions, labor friction, and decision latency.

Healthcare organizations must decide whether to rebuild systems around AI or simply layer tools onto legacy software. Organizations that treat AI as core infrastructure, governed, budgeted, and embedded, will define the next decade of care delivery. Those who treat it as an add-on will accumulate complexity without leverage.

%20(1).jpg)