A tech analyst dropped a truth bomb on Reddit that stopped me cold: investors keep thinking the AI trade is crowded when only 3% of US companies are meaningfully using AI,despite being three years into what he estimates is an eight-to-ten year buildout. We're not late to the party. Most organizations haven't even found the venue.

I've watched this pattern play out across dozens of conversations with technology leaders this year. The pain is consistent: teams stuck in perpetual evaluation, budgets allocated to "AI initiatives" that never graduate from proof-of-concept, and a growing gap between the organizations that figured out how to operationalize AI and everyone else still running demos.

KEY TAKEAWAYS

The AI adoption gap is widening,only 3% of companies have moved past pilots despite massive budget increases.

Infrastructure spending is exploding,data center systems growing at 15.5% annually, signaling serious enterprise commitment.

The shift from experimentation to execution requires different organizational capabilities.

Successful teams share a pattern,they treat AI as infrastructure, not innovation theater.

The Systemic Problem Nobody Wants to Acknowledge

Here's what the numbers reveal: global IT spending is projected to hit $5.74 trillion in 2025, with 9.3% year-over-year growth. AI spending specifically is growing at a 29% compound annual growth rate through 2028. The money is flowing. So why aren't the results?

The disconnect lies in where that money actually goes. Data center systems spending is growing at 15.5% annually,the highest rate among all IT markets. Server sales alone are projected to nearly triple from $134 billion in 2023 to $332 billion by 2028. Organizations are buying the hardware. They're signing the cloud contracts. But they're not shipping AI-powered products.

The infrastructure is being built. The production deployments aren't following.

What Separates the 3% From Everyone Else

The Reddit thread that surfaced this statistic had a crucial insight buried in the discussion: corporations are now budgeting instead of experimenting. That shift,from discretionary innovation budget to line-item infrastructure spend,marks the transition from hype to real deployment.

"The biggest mistake investors continue to make is that they feel the AI trade is already crowded." In fact, it's the complete opposite.

Dan Ives analysis discussion, Reddit r/NvidiaStock

The organizations in that 3% aren't smarter. They're not better funded. They made a structural decision early: treat AI like you treated cloud migration a decade ago. Not as a science project, but as an infrastructure transformation that requires dedicated teams, clear ownership, and measurable production metrics.

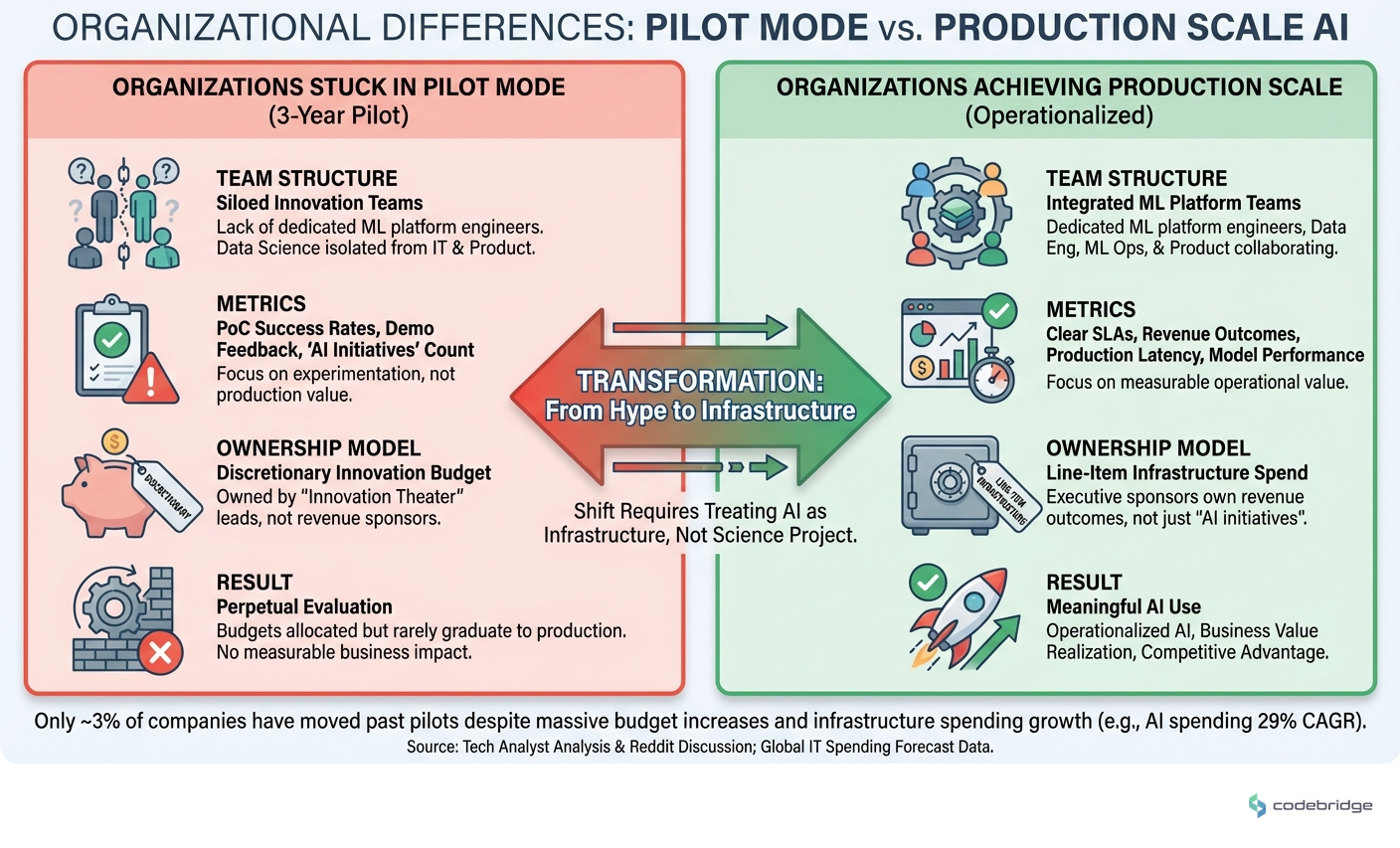

The comparison below illustrates the organizational differences between companies stuck in pilot mode versus those achieving production scale:

Notice the pattern: production-scale teams have dedicated ML platform engineers, clear SLAs for model performance, and,critically,executive sponsors who own revenue outcomes, not just "AI initiatives."

The Infrastructure Reality Check

The software market is growing at 14% annually, hitting $1.24 trillion in 2025. IT services,the largest single technology market,reached $1.74 trillion. These numbers tell us something important: organizations are spending heavily on the services and software layers that sit on top of AI infrastructure.

But here's the uncomfortable truth. Most of that spend is going toward enabling AI, not deploying it. Data pipelines. MLOps tooling. Governance frameworks. Security layers. All necessary,but all pre-production.

The gap between "AI-ready infrastructure" and "AI in production" is where most organizations lose 18-24 months. Building capability isn't the same as shipping value.

Gartner forecasts IT spending will cross $7 trillion by 2028, adding roughly $500 billion annually. The trajectory is clear. The question is whether your organization will be deploying production AI systems or still running proofs-of-concept when that $7 trillion threshold hits.

The Security Tax Nobody Budgeted For

One factor consistently blindsides AI initiatives: security requirements. The global cybersecurity market is projected to reach $200 billion by 2028. That growth isn't happening in a vacuum,it's directly tied to AI deployment complexity.

Every production AI system introduces new attack surfaces. Model poisoning. Prompt injection. Training data exfiltration. The security review that takes two weeks for a traditional application takes two months for an AI system,if your security team even has the expertise to evaluate it.

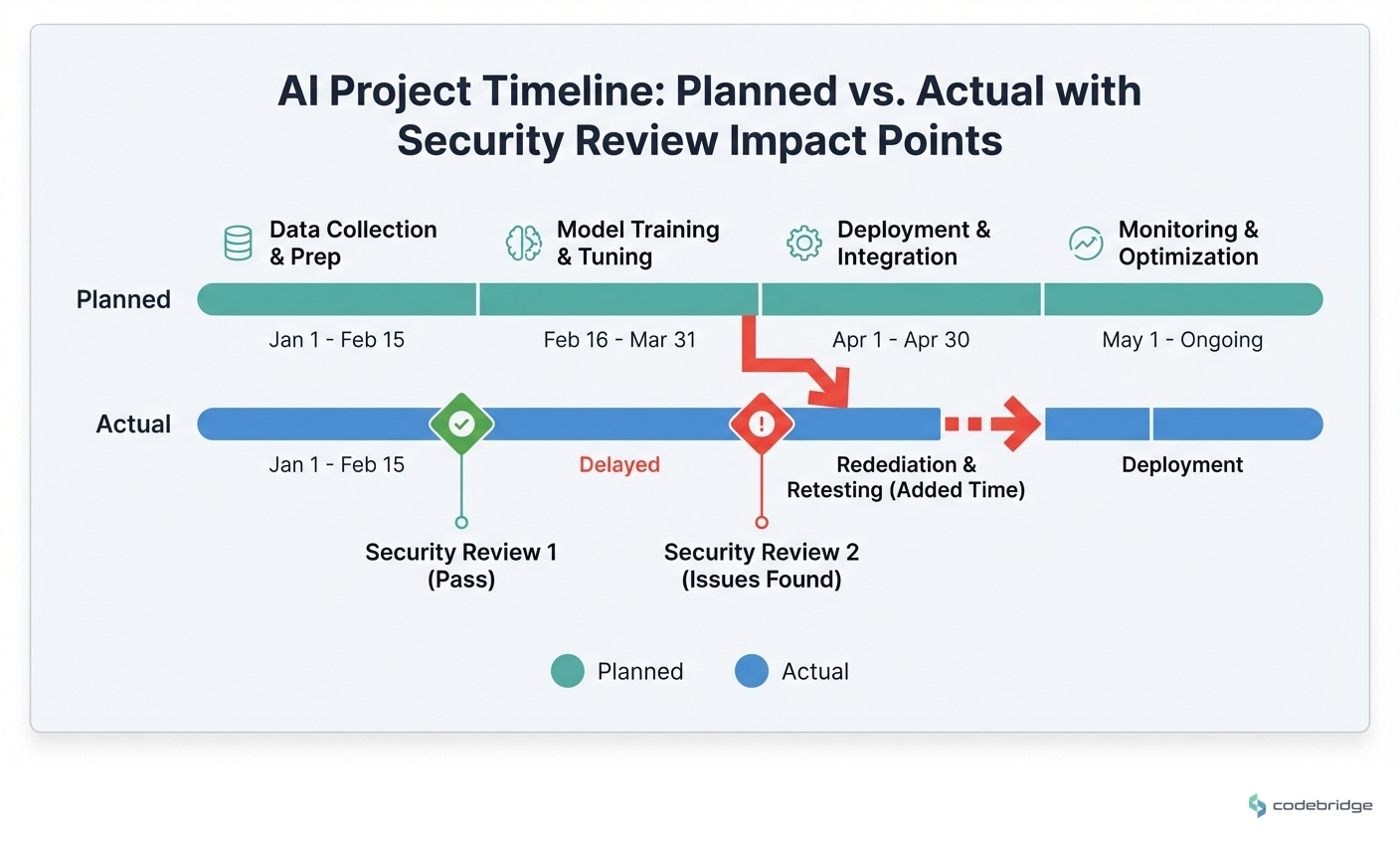

The timeline below shows how security requirements typically extend AI deployment schedules:

Organizations that account for this upfront,building security review into sprint zero, not sprint ten,compress their overall timelines .

A Framework for Moving Past Pilot Purgatory

Based on patterns from organizations that successfully transitioned from experimentation to production, here's what actually works:

1. Kill the Innovation Theater

Stop running AI pilots that exist to demonstrate capability rather than deliver value. Every pilot should have a defined path to production with specific criteria for promotion or termination. If you can't articulate what "success" looks like in production terms, you're not running a pilot,you're running a demo.

2. Treat MLOps Like DevOps in 2012

A decade ago, organizations that invested in CI/CD pipelines and infrastructure-as-code pulled ahead. The same dynamic is playing out now with ML pipelines, model versioning, and automated retraining. This isn't optional tooling,it's the foundation that makes production AI possible.

3. Assign Revenue Ownership, Not Project Ownership

The 3% have something in common: someone's compensation is tied to AI-driven revenue or cost savings. Not "successful pilot completion." Not "stakeholder satisfaction scores." Actual business outcomes measured in dollars.

4. Budget for the Security Sprint

Add 30% to your timeline estimates specifically for security review, compliance documentation, and governance approval. This isn't pessimism,it's pattern recognition from organizations that shipped.

5. Build the Feedback Loop Before You Need It

Production AI systems degrade. Models drift. User behavior changes. The organizations that sustain value have monitoring, alerting, and retraining pipelines in place before they hit production,not as a "phase two" afterthought.

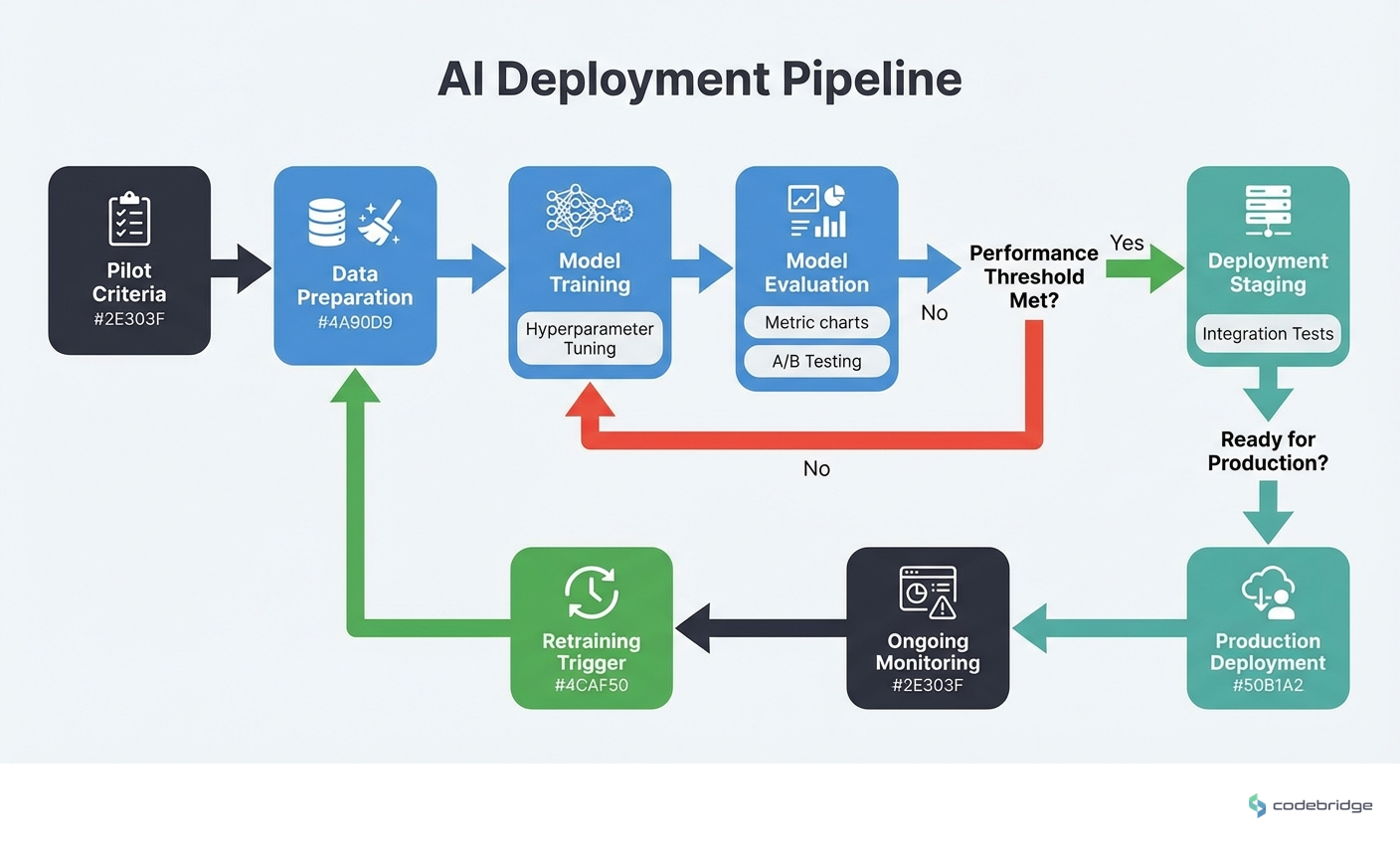

The following process flow shows how successful teams structure their AI deployment pipeline:

The Rare Request Problem

Here's something I've noticed in conversations with technology leaders: many express "no immediate needs" but acknowledge potential for "future, rare requests." This language is a tell. It usually means the organization knows it needs to move on AI but hasn't created the internal urgency to prioritize it.

The challenge with "rare requests" is that AI capabilities aren't something you can spin up on demand. The infrastructure, the expertise, the organizational muscle memory,these take time to build. By the time the "rare request" becomes urgent, you're 18 months behind competitors who started building when they didn't have immediate needs either.

The best time to build AI capability was two years ago. The second best time is now,before the "rare request" becomes an urgent competitive threat.

What Happens Next

Remember that 3% figure? By 2028, when IT spending crosses $7 trillion, that percentage will have grown,but not uniformly. The organizations building production AI systems now will compound their advantage. The ones still running pilots will find themselves competing against AI-native capabilities they can't match.

The analyst was right: this trade isn't crowded. We're early in an eight-to-ten year buildout. The question isn't whether AI will transform your industry,it's whether you'll be the one doing the transforming or the one being disrupted.

The infrastructure spending is happening. The budgets are shifting from experimental to operational. The only variable left is execution.

DIAGNOSTIC: ARE YOU STUCK IN PILOT PURGATORY?

Your AI initiatives have been in "pilot" or "evaluation" phase for more than 12 months

No one's compensation is tied to AI-driven business outcomes

Your ML infrastructure is separate from your production deployment pipeline

Security review happens after development, not during architecture

You can't articulate specific criteria for promoting a pilot to production

Your AI budget is categorized as "innovation" rather than "infrastructure"

Model monitoring and retraining are planned for "phase two"

You describe AI needs as "potential future requests" rather than current priorities

Checked more than three boxes?

Let's talk about what it takes to move from pilot to production.

%20(1)%20(1)%20(1).jpg)

.avif)