AWS bills can spiral out of control faster than application traffic during Black Friday. Yet the knee-jerk reaction, cutting resources indiscriminately, often leads to performance degradation, customer churn, and engineering teams scrambling to restore service levels. The real challenge isn't just reducing costs; it's optimizing your AWS spend while maintaining the reliability and performance your users depend on.

Our DevOps services team specializes in performance-first cloud optimization, helping engineering teams achieve 20-50% cost reductions without compromising reliability or user experience.

It is important to note, AWS held 32% of the global Infrastructure-as-a-Service market, with the total market valued at approximately $80 billion, according to Statista.

This guide presents a performance-first approach to AWS cost optimization, grounded in Service Level Objectives (SLOs), error budgets, and proven engineering practices. You'll learn how to systematically reduce your AWS bill through data-driven decisions, structural improvements, and governance practices that prevent cost creep from returning.

Spend Follows Architecture (Not the Other Way Around)

The most effective AWS cost optimizations aren't about finding cheaper services, they're about building systems that naturally consume fewer resources while meeting performance requirements. This architectural approach ensures that cost savings compound over time rather than requiring constant vigilance.

Tie Savings to SLOs (Latency, Availability) and Error Budgets

Before touching any AWS resource, establish clear Service Level Objectives for your critical user journeys.

For example, if your API must respond within 200ms for 99.9% of requests, this SLO becomes your guardrail during optimization efforts. Any cost-saving measure that risks violating this SLO should be rejected or require additional safeguards.

Error budgets provide the mathematical framework for balancing cost and reliability. If you have a 99.9% availability SLO, you can "spend" up to 43.8 minutes of downtime per month on optimization experiments, infrastructure changes, or acceptable service degradation. This budget allows you to take calculated risks with cost optimizations while maintaining customer trust.

Cost Per Request/User as North-Star Metric; Unit Economics Examples

Traditional cost tracking focuses on monthly AWS bills, but unit economics reveal optimization opportunities that aggregate metrics miss. Track metrics like:

- Cost per API request: $0.002 per request might seem negligible until you realize it's $20,000 for 10 million monthly requests

- Cost per active user: A SaaS platform spending $2.50 per monthly active user needs to ensure customer lifetime value justifies this infrastructure investment

- Cost per transaction: E-commerce platforms should track infrastructure costs against transaction volume to identify scaling inefficiencies

These unit metrics help engineering teams understand the direct relationship between code efficiency, architecture decisions, and business economics. A database query optimization that reduces RDS CPU utilization by 20% translates directly to lower cost per request and improved margins.

What Not to Cut (Observability, Backups, Security)

Cost optimization must never compromise three foundational areas:

Observability infrastructure provides the visibility needed to optimize effectively. Cutting monitoring costs to save a few hundred dollars monthly can hide performance regressions that cost thousands in lost revenue. Invest in comprehensive logging, metrics, and tracing, the ROI through faster incident resolution and optimization insights far exceeds the infrastructure costs.

Backup and disaster recovery systems represent insurance against catastrophic business loss. The cost of maintaining automated backups, cross-region replication, and recovery testing pales compared to the potential impact of data loss or extended downtime.

Security controls, including encryption, access management, and compliance monitoring, are non-negotiable. Security breaches can result in regulatory fines, customer churn, and brand damage that dwarf any potential infrastructure savings.

Measurement First, Your AWS Cost & Performance Baseline

Effective optimization requires understanding your current state with precise measurement. Without baseline metrics, you're optimizing blind, unable to validate improvements or detect regressions.

Cost Explorer & Cost and Usage Report (CUR), Tagging, Cost Allocation

AWS Cost Explorer provides high-level cost visibility, but the Cost and Usage Report (CUR) delivers the granular data needed for optimization. CUR exports hourly usage data to S3, enabling detailed analysis of spending patterns, resource utilization, and cost allocation across teams and services.

Implement a comprehensive tagging strategy before beginning optimization efforts:

- Environment tags: Production, staging, development, testing

- Team/owner tags: Which engineering team owns this resource

- Service tags: Microservice or application component

- Cost center tags: Business unit or project for chargeback

- Lifecycle tags: Temporary, permanent, experimental

Consistent tagging enables accurate cost allocation and helps identify optimization opportunities. A staging environment consuming 40% of your RDS budget indicates potential over-provisioning or forgotten resources.

AWS Budgets & Anomaly Detection; Alerts That Engineers Actually Read

Generic budget alerts that email the entire engineering team about monthly overruns create alert fatigue and get ignored. Design actionable alerts that reach the right people with enough context to take immediate action:

- Service-specific budgets: Alert the team responsible for the Lambda functions when compute costs exceed 110% of the monthly target

- Anomaly detection thresholds: Configure AWS Cost Anomaly Detection to alert on 25% day-over-day increases in specific service categories

- Usage-based alerts: Trigger alerts when data transfer costs suggest a misconfigured application is generating excessive cross-region traffic

Integrate cost alerts into existing engineering workflows through Slack channels, PagerDuty, or ticketing systems where teams already collaborate on operational issues.

Compute Optimizer + Rightsizing Playbook (Safe Rollout)

AWS Compute Optimizer analyzes resource utilization patterns and recommends rightsizing opportunities, but raw recommendations need careful evaluation and staged rollouts. Develop a systematic approach:

Assessment phase: Review Compute Optimizer recommendations for instances with consistent low utilization over 14+ days. Validate recommendations against application performance requirements and peak usage patterns.

Testing phase: Implement changes in non-production environments first, running load tests to verify performance remains acceptable with smaller instance types or different configurations.

Gradual rollout: For production changes, use blue-green deployments or rolling updates to minimize risk. Monitor key performance indicators continuously during and after the transition.

Rollback strategy: Maintain the ability to quickly return to previous instance configurations if performance degrades. Document rollback procedures and ensure monitoring systems can detect performance issues within minutes.

.png)

AWS Cost Reduction Strategies (Quick Wins vs Structural Changes)

Not all cost optimizations require the same effort or provide the same long-term value. Categorizing opportunities helps prioritize efforts and set appropriate expectations for engineering time investment. Quick wins provide immediate cost relief and build momentum for larger optimization projects. Structural changes require significant engineering investment but deliver sustainable, long-term savings that compound as your application scales.

Compute & Scaling, The Biggest Lever

Compute resources typically represent 40-60% of AWS costs, making them the highest-impact area for optimization efforts. However, compute optimizations also carry the highest risk of performance degradation if implemented carelessly.

EC2 Rightsizing Cadence (CPU/Mem/IO; Burstable Pitfalls)

Establish a monthly rightsizing review process that goes beyond simple CPU utilization metrics. Analyze memory utilization, disk I/O patterns, and network throughput to identify optimization opportunities:

CPU utilization analysis: Look for instances consistently running below 20% CPU utilization, but verify that low utilization isn't masking I/O bottlenecks or memory constraints that would be exposed with smaller instance types.

Memory optimization: Memory-optimized instances (R5, R6i) cost significantly more than general-purpose instances. Applications using less than 50% of available memory might benefit from compute-optimized instances with higher CPU-to-memory ratios.

Burstable instance pitfalls: T3/T4g instances offer attractive baseline pricing, but applications that consistently exceed baseline performance consume CPU credits and may experience throttling. Monitor CPU credit balance and burst utilization to identify workloads better suited for fixed-performance instances.

Savings Plans vs Reserved Instances (Coverage Targets, Flexibility)

Both commitment-based pricing models offer significant discounts, up to 72% off On-Demand rates, and Spot Instances can deliver up to 90% savings for fault-tolerant workloads (AWS). Choosing the right model and coverage level is critical to avoid locking into inflexible or inadequate capacity reservations.

Coverage targets: Aim for 60-80% coverage of your baseline compute spend through Savings Plans, leaving 20-40% as On-Demand capacity for scaling and new workloads. This balance provides substantial savings while maintaining flexibility for growth and experimentation.

Spot Instances Best Practices (Interruption Handling, Diversified Pools)

Spot Instances can reduce compute costs by 50-90%, but only for workloads that can gracefully handle interruptions. Successful Spot adoption requires architectural changes and operational discipline:

Diversification strategy: Spread Spot requests across multiple instance types, availability zones, and Spot pools to reduce the likelihood of simultaneous interruptions affecting your entire workload.

Interruption handling: Implement graceful shutdown procedures that can complete within the 2-minute interruption notice period. Use Spot interruption warnings to drain connections, complete in-flight work, and persist state before termination.

Fault-tolerant architectures: Design applications that can lose individual instances without service degradation. Use auto-scaling groups, load balancers, and stateless application design to maintain availability during Spot interruptions.

Workload suitability: Spot Instances work best for batch processing, CI/CD workloads, fault-tolerant web applications, and development environments. Avoid using Spot for databases, single-instance applications, or workloads requiring guaranteed availability.

Graviton Migration ROI & Compatibility Checklist

AWS Graviton processors offer 20-40% better price performance compared to x86 instances, but migration requires careful planning and testing to ensure compatibility:

Architecture compatibility: Most modern applications running on Linux support ARM64, but verify that all dependencies, container images, and third-party libraries provide ARM64 versions.

Performance validation: Graviton processors excel at multi-threaded workloads but may show different performance characteristics for single-threaded or specialized computational tasks. Conduct thorough performance testing before migration.

Migration checklist:

- Audit all application dependencies for ARM64 support

- Update CI/CD pipelines to build multi-architecture container images

- Test database performance and connection pooling behavior

- Validate monitoring and observability tool compatibility

- Plan gradual migration starting with non-critical workloads

Containers & Serverless

Container and serverless platforms introduce additional optimization opportunities and complexities compared to traditional EC2 deployments. These managed services can reduce operational overhead while providing fine-grained cost control.

EKS Cost Optimization (Node Groups, BinPacking, Karpenter, Cluster Autoscaling)

Kubernetes workloads present unique cost optimization challenges due to resource requests, limits, and scheduling inefficiencies. Effective EKS optimization requires both cluster-level and application-level improvements:

Node group strategy: Use multiple node groups with different instance types to optimize for diverse workload requirements. CPU-intensive applications benefit from compute-optimized instances, while memory-intensive workloads need memory-optimized nodes. Mix Spot and On-Demand instances within node groups based on workload fault tolerance.

Resource request optimization: Many applications set resource requests based on peak requirements rather than typical usage, leading to poor cluster utilization. Review CPU and memory requests against actual usage patterns, adjusting requests to improve bin packing while maintaining performance headroom.

Karpenter adoption: Replace Cluster Autoscaler with Karpenter for faster scaling and better instance selection. Karpenter can provision optimally-sized nodes within seconds and automatically selects appropriate instance types based on pending pod requirements.

Vertical Pod Autoscaling: Implement VPA to automatically adjust resource requests based on historical usage patterns, improving cluster utilization without manual intervention.

Fargate vs EC2 for Workloads (Cost/Performance Threshold)

Fargate eliminates node management overhead but costs 2-4x more than equivalent EC2 capacity. The cost-performance threshold depends on utilization patterns and operational complexity:

Fargate advantages: No node management, automatic scaling, simplified security model, and pay-per-task pricing work well for event-driven workloads, batch jobs, and applications with unpredictable traffic patterns.

EC2 advantages: Lower cost per CPU/memory hour, better resource utilization through bin packing, support for specialized instance types, and more control over the underlying infrastructure benefit steady-state workloads with predictable resource requirements.

Decision framework: Use Fargate for workloads with utilization below 30% or requiring rapid scaling from zero. Use EC2-based node groups for steady-state applications with consistent resource requirements and utilization above 50%.

Lambda Cost Optimization (Memory Tuning, Cold Start Strategies, Concurrency Limits)

Lambda pricing is based on execution duration and allocated memory, making memory optimization crucial for cost control. However, Lambda allocates CPU power proportionally to memory, creating complex optimization trade-offs:

Memory tuning methodology: Test function performance across memory allocations from 128MB to 3008MB, measuring both execution duration and cost per invocation. The optimal memory setting minimizes total cost (duration × memory allocation) while meeting performance requirements.

Cold start optimization: Minimize cold start impact through provisioned concurrency for latency-sensitive functions, connection pooling, and avoiding heavyweight dependencies in initialization code. Consider container-based alternatives for functions with consistently high cold start penalties.

Concurrency management: Set appropriate reserved and provisioned concurrency limits to prevent runaway costs from unexpected traffic spikes or infinite loops. Monitor concurrent executions and throttling metrics to optimize concurrency settings.

Storage & Data Lifecycle

Storage costs grow steadily over time as applications generate logs, backups, and user data. Implementing intelligent lifecycle management and choosing appropriate storage classes can significantly reduce long-term storage expenses.

S3 Lifecycle Policies & S3 Intelligent-Tiering (Logs, Backups, Data Lake)

S3 storage costs can be reduced by 30-70% through appropriate lifecycle policies and storage class selection. Different data types require different optimization strategies:

Application logs: Implement lifecycle policies that transition logs to Standard-IA after 30 days, Glacier Flexible Retrieval after 90 days, and Glacier Deep Archive after 365 days. Consider log retention policies that automatically delete logs after compliance requirements are met.

Backup data: Use S3 Intelligent-Tiering for backup data with unpredictable access patterns. Enable Deep Archive Access tier for long-term retention. Consider backup compression and deduplication to reduce storage volume.

Data lake architectures: Partition data by date and access frequency. Use S3 Standard for current month data, Standard-IA for recent historical data, and Glacier tiers for long-term analytics data. Implement query-based lifecycle management that considers actual data access patterns.

Object Compression & S3 Select; Glacier Tiers; Replication Gotchas

Advanced S3 optimization techniques can provide additional cost savings but require careful implementation to avoid performance or reliability issues:

Compression strategies: Enable server-side compression for text-based content (logs, JSON, CSV) to reduce storage volume and data transfer costs. Consider client-side compression for large binary files before upload.

S3 Select optimization: Use S3 Select to retrieve specific data subsets rather than downloading entire objects, reducing data transfer costs and improving query performance for analytics workloads.

Glacier considerations: Understand minimum storage duration charges (90 days for Glacier Flexible Retrieval, 180 days for Deep Archive) and retrieval costs before implementing Glacier lifecycle policies. Frequent access to Glacier-stored data can exceed Standard storage costs.

Replication cost management: Cross-region replication doubles storage costs and adds data transfer charges. Implement selective replication rules based on business requirements rather than replicating entire buckets automatically.

Databases & Analytics

Database costs often grow faster than compute costs as applications scale, making database optimization crucial for long-term cost management. However, database changes carry higher risk and require careful performance validation.

RDS Cost Optimization (Instance Families, Storage Autoscaling, Read Replicas vs Caching)

RDS optimization requires balancing cost, performance, and availability requirements across multiple dimensions:

Instance family optimization: Modern instance families (db.r6i, db.m6i) provide better price-performance than previous generations. Memory-optimized instances benefit workloads with large datasets or complex queries, while general-purpose instances work well for balanced workloads with moderate memory requirements.

Storage autoscaling configuration: Enable storage autoscaling to prevent over-provisioning, but set maximum storage limits to prevent runaway costs from poorly-optimized queries or data retention issues. Monitor storage growth patterns to identify optimization opportunities.

Read replica vs caching strategy: Read replicas provide eventual consistency and full SQL compatibility but double database costs. Consider implementing application-level caching (Redis, Memcached) for frequently-accessed data before adding read replicas. Use read replicas for analytical workloads that require complex queries rather than simple key-value lookups.

Aurora Serverless v2 Right-Sizing; Pause/Resume Patterns

Aurora Serverless v2 provides automatic scaling but requires careful capacity configuration to balance cost and performance:

Minimum and maximum capacity: Set minimum capacity based on baseline load rather than zero to avoid cold start delays. Configure maximum capacity conservatively to prevent cost spikes from poorly-optimized queries or traffic anomalies.

Scaling patterns: Aurora Serverless v2 scales quickly but not instantaneously. Applications with predictable traffic spikes benefit from scheduled scaling or pre-warming strategies that increase capacity before peak periods.

Pause/resume optimization: Aurora Serverless v1 could pause during inactivity, but v2 always maintains minimum capacity. Consider Aurora Serverless v1 for development and testing environments with intermittent usage patterns.

DynamoDB On-Demand vs Provisioned; Autoscaling; DAX for Hot Keys

DynamoDB pricing models require understanding access patterns and implementing appropriate optimization strategies:

On-demand vs provisioned decision: On-demand pricing works well for unpredictable workloads with usage spikes, but costs 6-7x more than provisioned capacity for steady-state workloads. Switch to provisioned capacity once traffic patterns become predictable.

Auto Scaling configuration: DynamoDB auto scaling can help optimize provisioned capacity, but responds slowly to traffic changes (5-15 minutes). Configure scaling policies with appropriate target utilization (70-80%) and cooldown periods to prevent oscillation.

DAX caching strategy: DynamoDB Accelerator provides microsecond latency for frequently-accessed items but adds $0.50+ per hour per cache node. Implement DAX for read-heavy workloads with hot keys that represent more than 10% of total read capacity units.

Redshift (Concurrency Scaling, RA3/Spectrum) & Warehouse Sleep Schedules

Data warehouse costs can be controlled through right-sizing, scheduling, and architectural improvements:

Concurrency scaling management: Concurrency scaling provides automatic scaling for concurrent queries but charges On-Demand rates. Monitor concurrency scaling usage and consider upgrading cluster size if consistent scaling indicates under-provisioned baseline capacity.

RA3 vs DC2 evaluation: RA3 instances separate compute and storage, allowing independent scaling and potentially lower costs for storage-heavy workloads. Evaluate total cost including Redshift Managed Storage charges against DC2 instance pricing.

Automated scheduling: Implement automated pause/resume schedules for development and staging clusters. Production clusters can benefit from scaling down during low-usage periods if ETL processes can be scheduled appropriately.

Networking & Data Transfer Costs (Often Overlooked)

Network and data transfer costs are frequently overlooked until they represent 15-25% of total AWS spending. These costs compound as applications grow and become distributed across multiple services and regions.

Egress Reduction via CloudFront; Origin Shield; Cache Policies

Data egress from AWS to the internet costs $0.09-0.15 per GB, making CloudFront optimization crucial for content-heavy applications:

CloudFront distribution strategy: Place CloudFront distributions in front of all public-facing content, including API responses, static assets, and downloadable files. Even dynamic content benefits from edge caching of common responses and reduced latency.

Origin Shield implementation: Enable Origin Shield in the AWS region closest to your origin to reduce origin requests and associated data transfer costs. Origin Shield is particularly effective for origins serving multiple CloudFront distributions.

Cache policy optimization: Implement cache policies that maximize hit rates while maintaining content freshness. Use cache keys based on relevant request parameters and implement proper cache invalidation strategies for dynamic content.

NAT Gateway Spend, VPC Endpoints, PrivateLink, Architecture Fixes

NAT Gateways charge for both hourly usage ($0.045/hour) and data processing ($0.045/GB), making them expensive for high-traffic applications:

VPC endpoint implementation: Replace NAT Gateway traffic to AWS services with VPC endpoints. S3, DynamoDB, Lambda, and other AWS services support VPC endpoints that eliminate NAT Gateway costs for service communication.

PrivateLink optimization: Use PrivateLink for communication between VPCs and with third-party services to avoid internet routing and associated NAT Gateway costs. PrivateLink also improves security and reduces latency.

Architecture improvements: Review application architectures that require private subnets to access internet resources. Consider moving appropriate workloads to public subnets with security groups for access control, eliminating NAT Gateway requirements entirely.

Cross-AZ Chatter & Microservice Chattiness, Patterns to Reduce

Cross-Availability Zone data transfer costs $0.01-0.02 per GB in each direction, creating significant costs for chatty microservice architectures:

Service collocation: Deploy related microservices in the same availability zone when possible, using load balancer health checks to maintain availability during AZ failures. Accept slightly reduced availability in exchange for significantly lower data transfer costs.

Batch processing patterns: Replace frequent small API calls between services with batched requests that transfer more data in fewer operations. Implement async messaging patterns that reduce real-time communication requirements.

Data locality optimization: Implement read replicas and caching strategies that serve data from the same AZ as consuming applications. Use eventual consistency models that reduce the need for cross-AZ synchronous communication.

Performance Guardrails, Proving You Didn't Break Anything

Cost optimization without performance validation is a recipe for customer impact and engineering reputation damage. Implement comprehensive performance monitoring and testing to validate that optimizations don't compromise user experience.

SLO/SLI Tracking, Load Tests, Canaries; Rollback Strategy

Establish quantitative performance benchmarks before beginning optimization efforts and maintain these benchmarks throughout the optimization process:

SLO/SLI implementation: Define Service Level Indicators for critical user journeys (API response time, page load time, transaction success rate) and establish Service Level Objectives that reflect user expectations. Use these SLOs as go/no-go criteria for optimization rollouts.

Continuous load testing: Implement automated load tests that run before and after optimization changes, comparing performance characteristics under various load conditions. Use tools like k6, JMeter, or AWS Load Testing solution to validate performance at scale.

Canary deployment strategy: Roll out optimizations gradually using canary deployments that expose a small percentage of traffic to optimized infrastructure while monitoring key metrics. Expand rollouts only after validating that performance SLOs are maintained.

Automated rollback systems: Implement automated rollback mechanisms triggered by SLO violations or performance degradation. Define clear rollback criteria and ensure rollback procedures can be executed quickly during incidents.

Performance Budgets in CI/CD; Cost/Performance Dashboards per Service

Integrate performance and cost monitoring into development workflows to prevent regressions and maintain optimization gains:

CI/CD performance gates: Implement performance tests in CI/CD pipelines that fail deployments if performance degrades beyond acceptable thresholds. Include both synthetic performance tests and cost projections based on resource utilization.

Service-level dashboards: Create dashboards that correlate cost and performance metrics for each service, showing cost per request, response time percentiles, error rates, and resource utilization. Make these dashboards visible to development teams to maintain cost awareness.

Performance budgets: Establish performance budgets that set maximum acceptable response times, resource utilization, and cost per request for each service. Treat budget violations as technical debt that requires immediate attention.

FinOps & Governance

Sustainable cost optimization requires organizational processes, team accountability, and ongoing governance that prevents cost creep from returning after optimization efforts.

Tagging Taxonomy (Env/Team/Service), Showback/Chargeback

Effective cost allocation and accountability start with comprehensive and consistent resource tagging:

Standardized tagging schema: Implement mandatory tags for Environment (prod/stage/dev), Team (owning engineering team), Service (microservice or application), and CostCenter (business unit). Use AWS Config Rules or third-party tools to enforce tagging compliance.

Showback implementation: Generate monthly cost reports showing each team's AWS spending broken down by service and environment. Make cost visibility a regular part of team retrospectives and planning sessions to maintain cost awareness.

Chargeback considerations: Consider implementing chargeback for teams with dedicated AWS accounts or clear resource boundaries. Avoid chargeback for shared services or infrastructure that benefits multiple teams unless you can establish fair allocation methodologies.

Monthly Savings Ceremonies; Engineering Ownership of Costs

Create organizational rhythms that maintain focus on cost optimization and celebrate optimization achievements:

Savings ceremonies: Hold monthly meetings where teams present optimization achievements, share successful strategies, and identify upcoming optimization opportunities. Recognize teams that achieve significant savings while maintaining performance SLOs.

Engineering cost ownership: Assign cost ownership to engineering teams rather than treating it as purely an operations concern. Include cost metrics in team dashboards and make cost efficiency a factor in performance reviews and promotion criteria.

Optimization backlog: Maintain a prioritized backlog of optimization opportunities identified through cost analysis, performance monitoring, and architecture reviews. Allocate dedicated engineering time to optimization work rather than treating it as spare-time activity.

Procurement Alignment: Commit Coverage Policy (e.g., 60–80%)

Align cost optimization efforts with organizational procurement processes and commitment strategies:

Coverage policy development: Establish organizational policies for commitment coverage (Savings Plans, Reserved Instances) that balance savings with flexibility. Typical policies target 60-80% coverage of baseline spend, leaving room for growth and experimentation.

Procurement coordination: Coordinate with procurement teams on commitment purchases, annual budget planning, and vendor negotiations. Share cost optimization achievements to support budget discussions and demonstrate engineering team fiscal responsibility.

Risk management: Develop policies for managing commitment risks, including procedures for modifying or selling unused reservations and strategies for handling commitment overages during rapid growth periods.

30/60/90-Day AWS Cost Optimization Plan

Systematic cost optimization requires a phased approach that builds momentum through quick wins while preparing for structural improvements that deliver long-term savings.

0–30 Days: Tagging, CUR, Top 10 Offenders, Quick Wins

Week 1-2: Foundation and Measurement

- Implement comprehensive tagging across all AWS resources

- Configure Cost and Usage Report (CUR) export to S3

- Set up AWS Cost Explorer with appropriate filters and groupings

- Identify top 10 cost drivers by service and resource type

Week 3-4: Quick Wins Implementation

- Delete unused EBS volumes, snapshots, and load balancers

- Upgrade EBS gp2 volumes to gp3 for immediate 20% storage savings

- Implement basic S3 lifecycle policies for logs and backups

- Remove idle EC2 instances and unattached Elastic IP addresses

- Review and optimize data transfer patterns causing high egress costs

Expected outcomes: 10-20% immediate cost reduction, established measurement framework, team alignment on optimization priorities.

31–60 Days: Commit Coverage, Rightsizing, S3 Lifecycle, NAT/VPC Endpoints

Week 5-6: Commitment Strategy

- Analyze historical usage patterns to determine optimal Savings Plans coverage

- Purchase Savings Plans targeting 60-70% of baseline compute spend

- Begin systematic EC2 rightsizing based on Compute Optimizer recommendations

- Implement performance monitoring for rightsized instances

Week 7-8: Architecture Optimization

- Deploy VPC endpoints for high-traffic AWS services (S3, DynamoDB, Lambda)

- Implement comprehensive S3 lifecycle policies across all buckets

- Optimize NAT Gateway usage through VPC endpoint adoption

- Begin EKS cost optimization for containerized workloads

Expected outcomes: Additional 15-25% cost reduction through commitments and rightsizing, improved architecture efficiency.

61–90 Days: Graviton/Spot Pilots, EKS Scaling, Database Right-Sizing

Week 9-10: Advanced Compute Optimization

- Launch Graviton migration pilot for suitable workloads

- Implement Spot Instance adoption for fault-tolerant applications

- Deploy Karpenter for EKS clusters to improve scaling efficiency

- Optimize Lambda memory allocation and concurrency settings

Week 11-12: Database and Storage Optimization

- Right-size RDS instances based on performance analysis

- Implement Aurora Serverless v2 for variable workloads

- Optimize DynamoDB capacity modes and implement DAX caching

- Review and optimize data lake storage tiering strategies

Expected outcomes: Additional 20-30% cost reduction through advanced optimization techniques, established processes for ongoing optimization.

Monthly Cost Review Process:

- Generate service-level cost reports with month-over-month comparison

- Identify top 10 cost increases and investigate root causes

- Review Compute Optimizer recommendations for new rightsizing opportunities

- Analyze unused resources (idle load balancers, unattached volumes, stopped instances)

- Validate commitment utilization and identify coverage gaps

- Review data transfer costs for optimization opportunities

- Update cost forecasts and budget alerts based on usage trends

Essential Cost Dashboards to Build:

- Executive Dashboard: Total monthly spend, forecast, savings achieved, cost per customer/request

- Service-Level Dashboard: Cost breakdown by microservice, team ownership, environment split

- Resource Utilization Dashboard: CPU/memory utilization vs. cost, rightsizing opportunities

- Commitment Dashboard: Savings Plans/RI utilization, coverage percentage, upcoming expirations

- Anomaly Dashboard: Cost spikes, unusual usage patterns, budget threshold violations

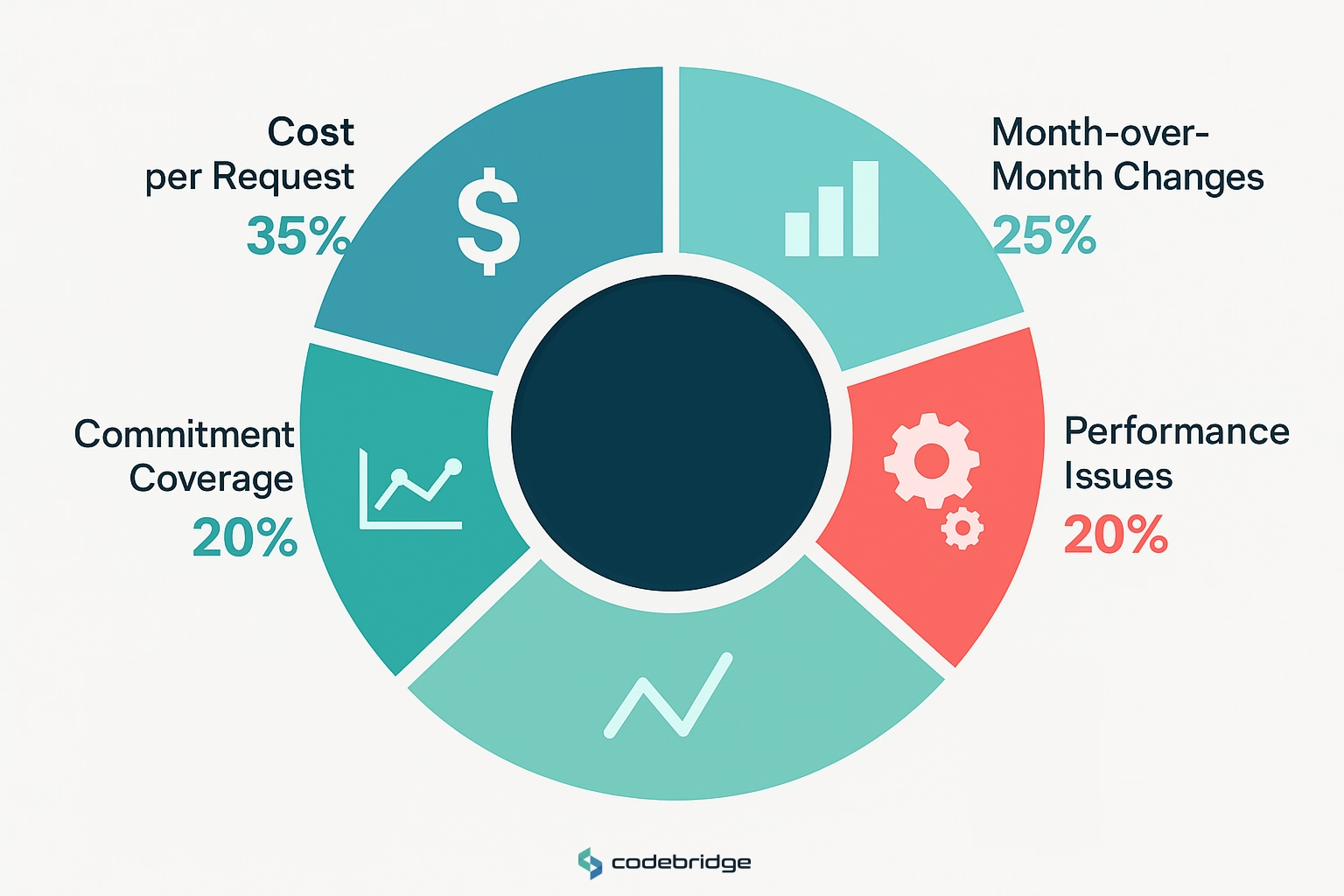

Key Metrics to Track:

- Cost per request/transaction/user (unit economics)

- Month-over-month cost changes by service

- Commitment coverage and utilization rates

- Resource utilization percentiles (CPU, memory, storage)

- Performance SLO compliance during cost optimizations

Conclusion

AWS cost optimization isn't a one-time project; it's an ongoing practice that balances fiscal responsibility with operational excellence. The strategies outlined in this guide provide a framework for systematic cost reduction while maintaining the performance and reliability your customers expect.

The key to successful optimization lies in measurement-driven decisions, performance guardrails, and organizational processes that prevent cost creep from returning. Start with quick wins to build momentum and establish measurement systems, then progress to structural improvements that deliver compounding savings over time.

Remember the core principles:

- Architecture drives spending patterns more than service selection

- Performance SLOs are non-negotiable guardrails for optimization efforts

- Unit economics (cost per request/user) reveal opportunities that aggregate metrics miss

- Never compromise observability, backups, or security to reduce costs

- Organizational ownership and processes sustain optimization gains long-term

The 30/60/90-day plan provides a practical roadmap for systematic optimization, but every organization's AWS footprint presents unique opportunities and constraints. Consider the complexity of your infrastructure, team capacity, and business requirements when prioritizing optimization efforts.

Ready to transform your AWS cost structure while maintaining world-class performance? Book a free consultation to discuss your specific AWS optimization opportunities and develop a customized plan that aligns with your business objectives and technical constraints. Let's prove that cost efficiency and performance excellence aren't mutually exclusive, they're complementary aspects of well-architected systems.

FAQ

Is there an AWS cost optimization checklist I can follow without hurting performance?

Yes, use a performance-first flow: define SLOs & error budgets → baseline with CUR/Cost Explorer + mandatory tagging → set budgets & anomaly detection tied to service owners → apply quick wins (gp2→gp3, S3 lifecycle, delete idle LBs/volumes) → structured changes (rightsizing via Compute Optimizer, caching, Graviton/Spot pilots, microservice and network minimization) → enforce guardrails (load tests, canaries, rollback) → add FinOps governance (showback/chargeback, 60–80% commitment coverage) and monthly “savings ceremonies.”

How do I run an AWS cost per request calculation for my API/SaaS?

Track unit economics (cost per request/user/transaction). Example: if infra spend is $20k for 10M requests, cost per request is $0.002, then target reductions via DB query tuning, caching, and rightsizing to lower CPU/RAM/I/O, and validate improvements against latency/availability SLOs before rollout.

What’s the real Graviton migration ROI and how do I validate it?

Graviton typically delivers ~20–40% better price-performance, but validate: audit ARM64 support, build multi-arch images, performance-test hot paths, check tooling/observability compatibility, then migrate gradually (non-critical → canary → production) with clear rollback.

When does EKS cost optimization Karpenter beat Cluster Autoscaler?

Karpenter improves bin-packing and scales nodes in seconds with optimal instance choices; pair it with right-sized requests/limits, multiple node groups (mix Spot/On-Demand), and (optionally) VPA. This lifts utilization while keeping p95 latency within SLO budgets.

Savings Plans vs Reserved Instances 2025: which should I pick?

Use Savings Plans for 60–80% of steady baseline (broad flexibility across services/regions) and RIs for highly predictable, pinned workloads. Leave 20–40% On-Demand for growth/experiments; layer Spot for fault-tolerant jobs. Validate coverage monthly to avoid over-commit.

%20(1).jpg)

%20(1)%20(1)%20(1).jpg)