The integration of generative AI models, encompassing large language models, deep learning frameworks, and sophisticated neural architectures, has created a dual-edged transformation in cybersecurity. These technologies enable unprecedented automation in content creation, decision-making, and pattern recognition, fundamentally altering both offensive and defensive capabilities across the threat landscape.

Organizations worldwide now confront a security environment where traditional perimeter-based defenses prove increasingly inadequate against AI-powered attacks that can adapt, evolve, and scale at machine speed. Simultaneously, these same AI technologies offer defenders revolutionary capabilities in threat detection, incident response, and risk mitigation that were previously unimaginable.

Ready to explore how AI can strengthen your cybersecurity strategy? Partner with Codebridge to build secure, AI-powered systems

The sophistication of modern AI-driven threats demands a comprehensive understanding of both technical vulnerabilities and strategic implications. From deepfake-enabled social engineering campaigns to adversarial machine learning attacks that can poison enterprise AI systems, threat actors are leveraging generative AI to create attack vectors that bypass conventional security controls and exploit human psychological vulnerabilities with unprecedented precision.

How AI Attacks Are Reshaping Cybersecurity?

The average cost of data violations worldwide last year was $4.88 million, representing a 10% increase from the previous year and a record high in the history of these surveys. (IBM), representing one of the fastest-growing threat vectors in cybersecurity history. This exponential growth reflects not merely an increase in volume but a fundamental shift in attack sophistication and effectiveness.

Modern phishing campaigns exemplify this transformation. Today, 60% of recipients fall victim to AI-generated phishing emails, equivalent to rates for non-AI-generated emails. (Harvard Business Review). These emails use advanced natural language processing technology to create personalized, contextually relevant lures that successfully bypass traditional email security filters.

Financial Impact and Geographic Distribution

The financial implications of AI-enabled cybercrime are staggering. In Southeast Asia alone, AI-powered criminal operations extracted between $18-37 billion in 2023 (Fortune Asia and UNODC Report), representing a mature criminal ecosystem that leverages AI for everything from synthetic identity creation to automated money laundering operations.

These criminal enterprises demonstrate sophisticated operational structures, employing AI for victim profiling, campaign optimization, and even automated negotiation in ransomware attacks. The scale and sophistication of these operations indicate that AI-enabled cybercrime has evolved beyond opportunistic attacks to become a systematic, industrialized threat landscape.

Adversarial AI and Model Manipulation

Perhaps the most concerning development is the emergence of adversarial AI attacks specifically targeting enterprise machine learning systems. 47% of organizations cite adversarial AI as a top security concern, reflecting growing awareness of threats like data poisoning, model inversion attacks, and adversarial example generation.

Data poisoning attacks, which manipulate training datasets to corrupt AI model behavior, can reduce classification accuracy by up to 27% in image recognition tasks and 22% in fraud detection models (arXiv Research). These attacks represent a fundamental threat to the integrity of enterprise AI systems, potentially compromising decision-making processes across critical business functions.

How AI Security Companies Are Responding to New Threats?

The AI cybersecurity market is experiencing unprecedented growth, projected to expand from $22.4 billion in 2023 to $60.6 billion by 2028 at a compound annual growth rate of 21.9% (MarketsandMarkets).

Enterprise adoption of AI-powered security solutions has accelerated dramatically. 82% of organizations in the U.S. have already integrated AI into their cybersecurity posture (Arctic Wolf), while 95% of cybersecurity professionals believe that AI-powered solutions will strengthen their defenses (Darktrace).

.avif)

Leading Vendor Solutions and Capabilities

Major cybersecurity vendors have developed sophisticated AI-powered platforms that address various aspects of the threat landscape:

Darktrace's Enterprise Immune System employs unsupervised machine learning to model normal behavior baselines and identify subtle deviations that may indicate compromise. The platform's ability to operate without predefined rules makes it particularly effective against novel AI-generated attacks that would bypass signature-based detection systems.

CrowdStrike's Falcon Platform integrates endpoint detection with cloud-native threat intelligence, utilizing machine learning algorithms to identify and block unknown malware variants in real-time. The platform's predictive analytics capabilities enable proactive threat hunting that anticipates attack patterns before they fully manifest.

Splunk's AI-powered SIEM solutions leverage reinforcement learning to optimize alert triage processes, reducing mean time to remediation by up to 30% while dramatically decreasing false positive rates. This capability is crucial for managing the overwhelming volume of security alerts generated by modern enterprise environments.

How is machine learning transforming technical implementation in cyber security?

The evolution toward multi-agent AI architectures represents a fundamental shift in cybersecurity system design. Gartner predicts, by 2028, multi-agent AI systems are expected to power 70% of threat detection workflows, up from just 5% today. These systems employ collaborative intelligence, where specialized AI agents focus on specific security domains while sharing intelligence and coordinating responses.

Multi-agent architectures offer several advantages over traditional single-agent systems. They reduce alert fatigue through intelligent correlation and prioritization, enable faster incident response through automated workflows, and provide specialized expertise for different threat types. However, implementing these systems securely requires careful attention to inter-agent communication protocols, access controls, and verification mechanisms.

Real-Time Analytics and Behavioral Detection

Modern AI-powered security platforms process millions of security events per second, applying machine learning algorithms to identify patterns that would be impossible for human analysts to detect. These systems employ behavioral analytics that establish baseline normal activity for users, devices, and applications, then flag deviations that may indicate compromise or malicious activity.

The effectiveness of these systems depends on sophisticated feature engineering and model selection. Ensemble methods, which combine multiple machine learning models, provide enhanced resilience against adversarial attacks while improving overall detection accuracy. These approaches are particularly effective against zero-day threats, where traditional signature-based detection fails entirely.

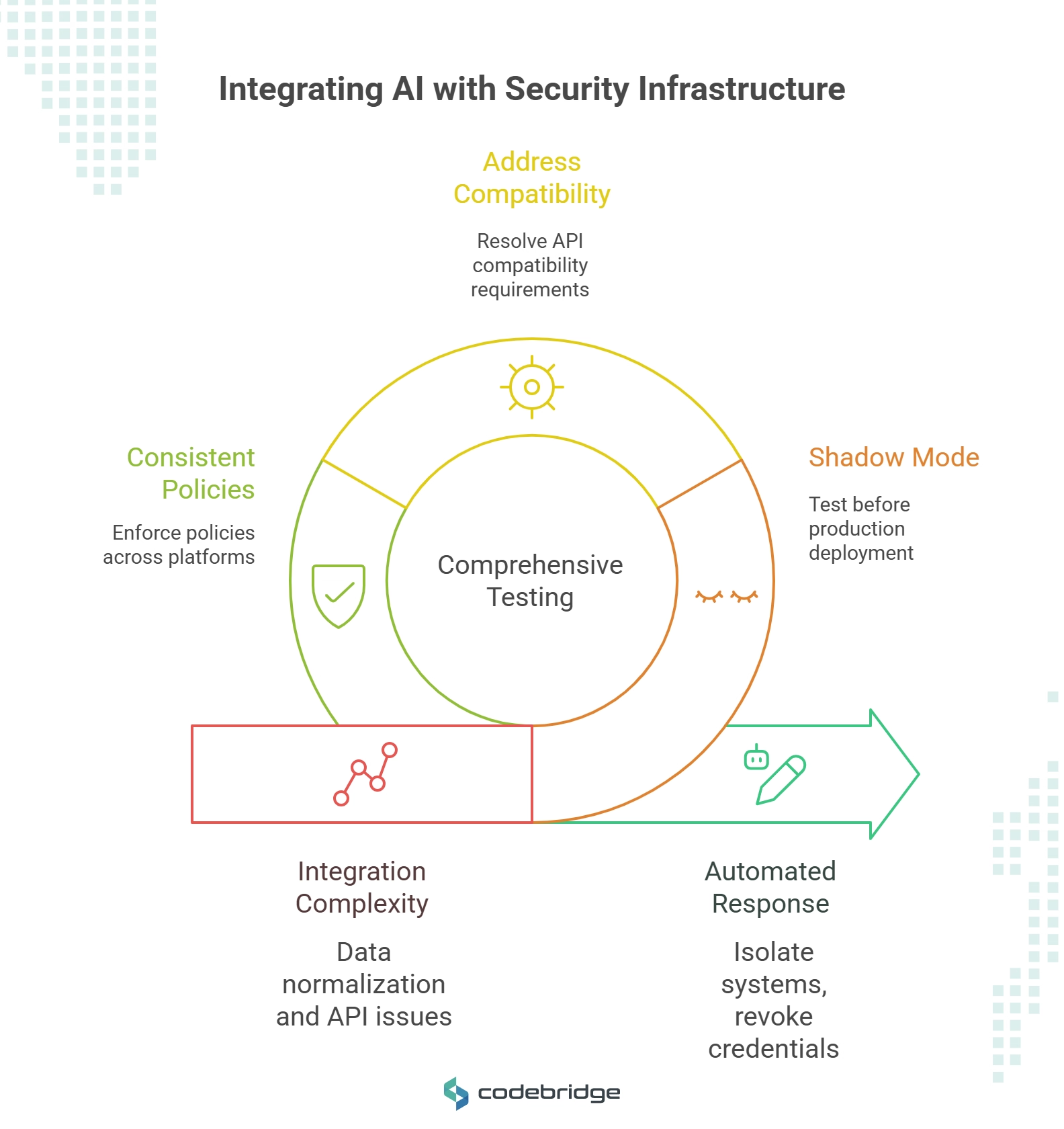

Integration with Existing Security Infrastructure

Successful deployment of AI-powered security tools requires seamless integration with existing Security Information and Event Management (SIEM) systems, Security Orchestration, Automation, and Response (SOAR) platforms, and Identity and Access Management (IAM) solutions. This integration enables automated response workflows that can isolate compromised systems, revoke access credentials, and initiate incident response procedures without human intervention.

However, integration complexity presents significant challenges. Organizations must address data normalization issues, API compatibility requirements, and the need for consistent policy enforcement across multiple security platforms. Best practices emphasize the importance of comprehensive testing in shadow mode before deploying AI systems in production environments.

What should you know about artificial intelligence security tools?

AI security tools generally fall into three primary categories, each addressing different aspects of the threat landscape:

Detection and Analysis Tools utilize supervised and unsupervised machine learning to identify threats across multiple data sources. These tools analyze network traffic, endpoint behavior, user activity patterns, and application logs to identify indicators of compromise. Advanced systems employ deep learning architectures that can identify subtle patterns in data that traditional rule-based systems would miss entirely.

Automated Response and Orchestration Platforms leverage AI to coordinate security responses across multiple systems and tools. These platforms can automatically contain threats, gather forensic evidence, and initiate remediation procedures based on predefined playbooks that are continuously refined through machine learning. The most sophisticated platforms employ decision engines that can adapt response strategies based on the specific characteristics of each incident.

Proactive Defense and Testing Solutions generate synthetic attack scenarios to test and harden defensive systems. These tools employ adversarial AI techniques to discover vulnerabilities in security controls before attackers can exploit them. Red teaming platforms use AI to simulate sophisticated multi-stage attacks that test the entire security ecosystem rather than individual components.

Implementation Methodology and Best Practices

Successful AI security implementation follows a structured approach that minimizes risk while maximizing effectiveness:

Phase 1: Comprehensive Assessment involves detailed analysis of existing security capabilities, identification of data quality issues, and evaluation of infrastructure requirements. Organizations must assess their readiness for AI implementation, including data governance capabilities, technical expertise, and integration requirements.

Phase 2: Controlled Pilot Deployment focuses on implementing AI systems in shadow mode, where they operate alongside existing security tools without taking automated actions. This approach allows organizations to validate model performance against real threats while building confidence in AI-generated recommendations.

Phase 3: Graduated Production Deployment involves carefully expanding AI system authority and scope. Initial deployments typically focus on low-risk use cases with high human oversight, gradually expanding to more critical applications as confidence and expertise develop.

Phase 4: Continuous Optimization and Maintenance emphasizes the importance of ongoing model retraining, performance monitoring, and threat intelligence integration. AI systems require continuous refinement to maintain effectiveness against evolving threats.

Evaluation Frameworks and Success Metrics

Evaluating AI security tool effectiveness requires comprehensive metrics that go beyond traditional security measures. Key performance indicators include detection accuracy and precision, false positive and false negative rates, mean time to detection and response, operational impact on security teams, and integration effectiveness with existing tools.

Organizations should also measure qualitative factors such as analyst satisfaction, decision confidence, and the ability to handle novel threat types. Regular red teaming exercises can validate AI system effectiveness against sophisticated attack scenarios that haven't been encountered in production environments.

Which examples of AI in cyber security illustrate real case studies?

Financial Sector Deployments

A major European bank implemented graph analytics powered by machine learning to map transaction flows and detect money laundering operations. The system analyzes millions of transactions in real-time, identifying suspicious patterns that would be impossible for human analysts to detect. Implementation reduced investigation time by 60% while improving detection accuracy for complex financial crimes.

The bank's approach involved creating dynamic risk scores for customers based on transaction patterns, relationship networks, and external intelligence sources. Machine learning models continuously adapt to new money laundering techniques, maintaining effectiveness against evolving financial crimes.

Healthcare AI Security Implementations

A large healthcare provider deployed AI-powered correlation engines to protect electronic health records from unauthorized access. The system monitors user behavior patterns and access requests, immediately flagging anomalous activities that may indicate insider threats or compromised credentials.

The implementation correlates EHR access logs with network telemetry, identifying compromised credentials in under two minutes and preventing unauthorized data exfiltration. This approach proved particularly effective against sophisticated attacks that attempt to blend in with normal healthcare workflows.

Critical Infrastructure Protection

FTSE 100 companies have deployed convolutional neural networks and audio-spectral analysis systems to identify deepfake audio attacks targeting executives. These systems analyze voice calls in real-time, detecting synthetic voice generation attempts that traditional voice authentication systems cannot identify.

The deployment reduced successful CEO fraud attempts by 85%, demonstrating the effectiveness of AI-powered defenses against AI-generated attacks. The system continuously improves its detection capabilities by analyzing new deepfake generation techniques and updating its models accordingly.

Quantified Business Impact and Return on Investment

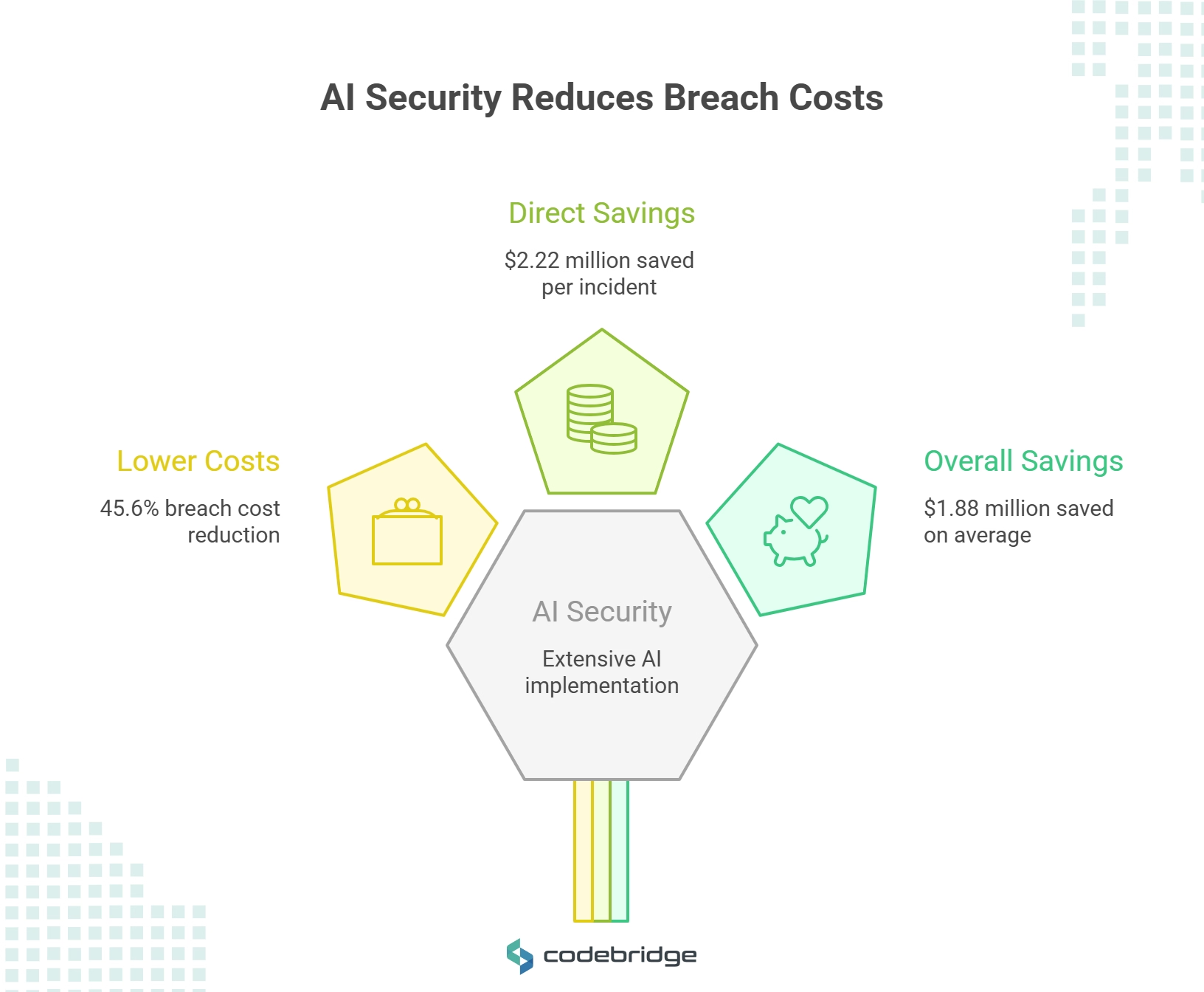

Organizations implementing extensive AI security measures report significantly lower breach costs compared to their peers.

According to IBM's comprehensive analysis, organizations leveraging AI extensively in prevention workflows experience 45.6% lower breach costs, saving an average of $2.22 million per incident through faster detection and containment (IBM Cost of a Data Breach Report 2024).

The financial benefits extend beyond direct cost savings. Organizations using extensive AI and automation across all security functions save $1.88 million compared to those not using AI, with breach costs of $3.84 million versus $5.72 million (IBM Security Analysis). These savings reflect faster incident response, reduced investigation time, and minimized business disruption.

What benefits of AI in cyber security drive ROI and business impact?

AI implementation delivers substantial operational benefits beyond cost reduction. Organizations report 50% reduction in manual alert triage, freeing security analysts to focus on high-value investigative work rather than routine alert processing. Advanced systems achieve up to 70% reduction in false positive alerts, dramatically improving team productivity and reducing alert fatigue.

The speed advantages are equally compelling. Organizations using extensive AI identify and contain breaches nearly 100 days faster than others, enabling rapid response that can prevent minor incidents from escalating into major breaches.

Scalability and Performance Benefits

AI-powered security systems demonstrate superior scalability compared to traditional approaches. They can analyze vast amounts of data without linear increases in staffing requirements, making them particularly valuable for large enterprises with complex security environments. These systems maintain consistent performance levels even as data volumes and threat complexity increase.

How does AI threat detection deliver advanced capabilities and what are its limitations?

Modern AI threat detection systems employ sophisticated algorithms, including deep learning-based anomaly scoring, graph neural networks for relationship analysis, and ensemble methods that combine multiple detection approaches. These systems can identify previously unknown attack patterns by analyzing subtle deviations from established baselines.

Behavioral analysis capabilities enable the detection of insider threats and compromised accounts by monitoring user activity patterns and identifying activities that deviate from established norms. These systems are particularly effective against advanced persistent threats that attempt to maintain long-term presence in target networks through legitimate-appearing activities.

Zero-day discovery represents a critical capability, as AI systems can identify novel attack techniques that haven't been previously documented or analyzed. This capability is essential for protecting against state-sponsored attacks and advanced criminal operations that develop custom tools and techniques.

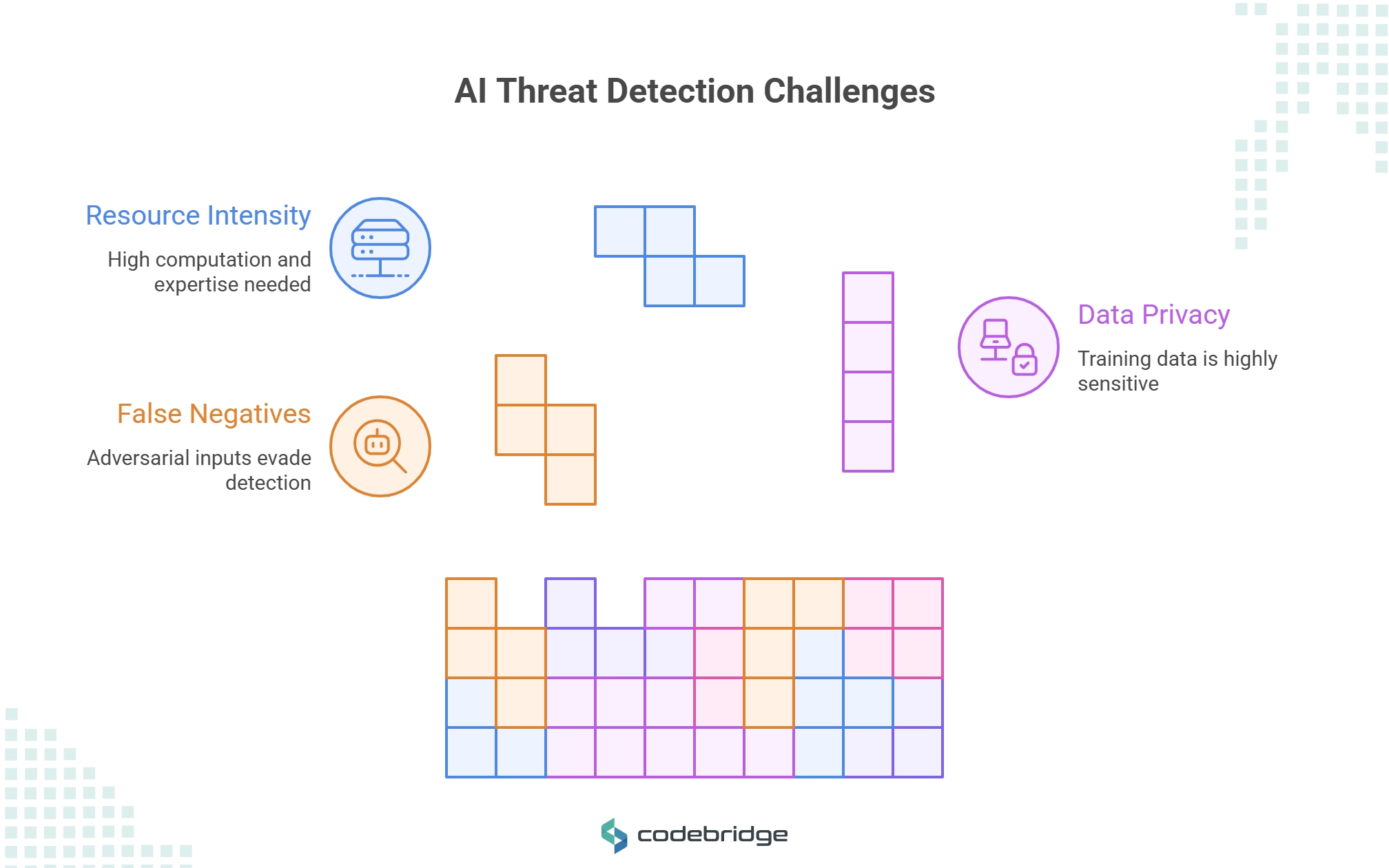

System Limitations and Risk Factors

Despite their capabilities, AI threat detection systems face significant limitations that organizations must understand and address. False negatives remain problematic when models face adversarially crafted inputs specifically designed to evade detection. Attackers increasingly use AI to generate attacks that exploit specific vulnerabilities in detection algorithms.

Data privacy concerns arise from the need to train models on sensitive security logs and user behavior data. Organizations must balance detection effectiveness with privacy requirements, particularly in regulated industries with strict data protection mandates.

Resource intensity represents a significant barrier to implementation, as effective AI systems require substantial computational resources including GPU clusters, high-speed networking, and specialized expertise. These requirements can be prohibitive for smaller organizations or those with limited IT budgets.

Performance Optimization and Benchmarking

Rigorous performance measurement is essential for maintaining AI system effectiveness. Key performance indicators must include precision and recall metrics that accurately reflect detection performance, processing latency measurements that ensure real-time response capabilities, and resource utilization monitoring that validates cost-effectiveness.

Benchmarking should include regular testing against known attack patterns, novel threat scenarios, and adversarial examples designed to test system resilience. Organizations should also conduct regular red teaming exercises that validate AI system performance against sophisticated multi-stage attacks.

What must organizations know about artificial intelligence security risks?

Generative AI introduces novel risk categories that traditional cybersecurity frameworks don't adequately address. Model theft represents a significant concern, where attackers can replicate proprietary AI models through repeated API queries or by extracting model parameters from deployment environments. This threat is particularly serious for organizations that have invested heavily in developing custom AI capabilities.

Data poisoning attacks represent another critical risk, where malicious actors inject corrupted data into training datasets to compromise model behavior. Research demonstrates that these attacks can reduce model accuracy by up to 27% in critical applications (arXiv), potentially undermining business processes that depend on AI decision-making.

Adversarial Attack Vectors and Defense Strategies

Input manipulation attacks exploit AI system vulnerabilities by crafting inputs specifically designed to cause misclassification or inappropriate responses. These attacks can be particularly dangerous in security applications, where adversarial inputs might cause legitimate activities to be flagged as threats or, conversely, allow malicious activities to pass undetected.

Defense against adversarial attacks requires multiple complementary strategies. Adversarial training exposes models to manipulated inputs during development, improving their resilience against input-based attacks. Input sanitization and validation can detect and filter potentially malicious inputs before they reach AI models.

Ensemble methods that combine multiple AI models can provide enhanced resilience, as attacks that successfully fool one model may fail against others in the ensemble. Continuous monitoring and anomaly detection can identify unusual patterns in AI system behavior that may indicate ongoing attacks.

Governance Failures and Shadow AI Risks

One of the most significant enterprise risks comes from ungoverned AI deployment. 97% of organizations experiencing AI-related security incidents lacked proper AI access controls, while 63% had no governance policies for managing AI systems (IBM 2025 Data Breach Report).

Shadow AI – unauthorized AI tool usage by employees, adds an average of $670,000 to breach costs, reflecting the risks associated with unmanaged AI implementations that lack proper security controls and monitoring capabilities.

Comprehensive Regulatory Compliance Framework for AI Security

The regulatory landscape for AI security is rapidly evolving, with multiple frameworks providing guidance for organizations implementing AI systems. The NIST AI Risk Management Framework (AI RMF 1.0) provides voluntary guidance for managing AI risks across the entire system lifecycle, emphasizing trustworthiness, safety, security, resilience, and explainability (NIST AI RMF).

The framework establishes four core functions that organizations must implement: Govern (establishing risk management culture and policies), Map (identifying AI risks within specific contexts), Measure (assessing and tracking identified risks), and Manage (prioritizing and mitigating risks through appropriate controls).

ISO/IEC 27001 requirements for Information Security Management Systems must be adapted for AI-specific risks, including data minimization principles, user consent mechanisms for AI processing, and specialized audit procedures for AI decision-making systems.

Zero Trust Architecture for AI Systems

NIST SP 800-207 defines Zero Trust Architecture principles that are particularly relevant for AI system security (NIST Zero Trust). The framework's core tenets require continuous verification of all entities, including AI systems and their data sources, strict access controls based on dynamic risk assessment, and comprehensive monitoring of all AI system interactions.

Zero Trust implementation for AI systems involves several critical components: Policy engines that evaluate AI system access requests based on current threat intelligence and behavioral baselines, Policy administrators that manage AI system permissions and access controls dynamically, and Policy enforcement points that control AI system access to data and computational resources.

The framework emphasizes the importance of per-session access control for AI systems, ensuring that each AI operation is individually authorized rather than granting broad, persistent access rights. This approach is particularly important for AI systems that process sensitive data or make high-stakes decisions.

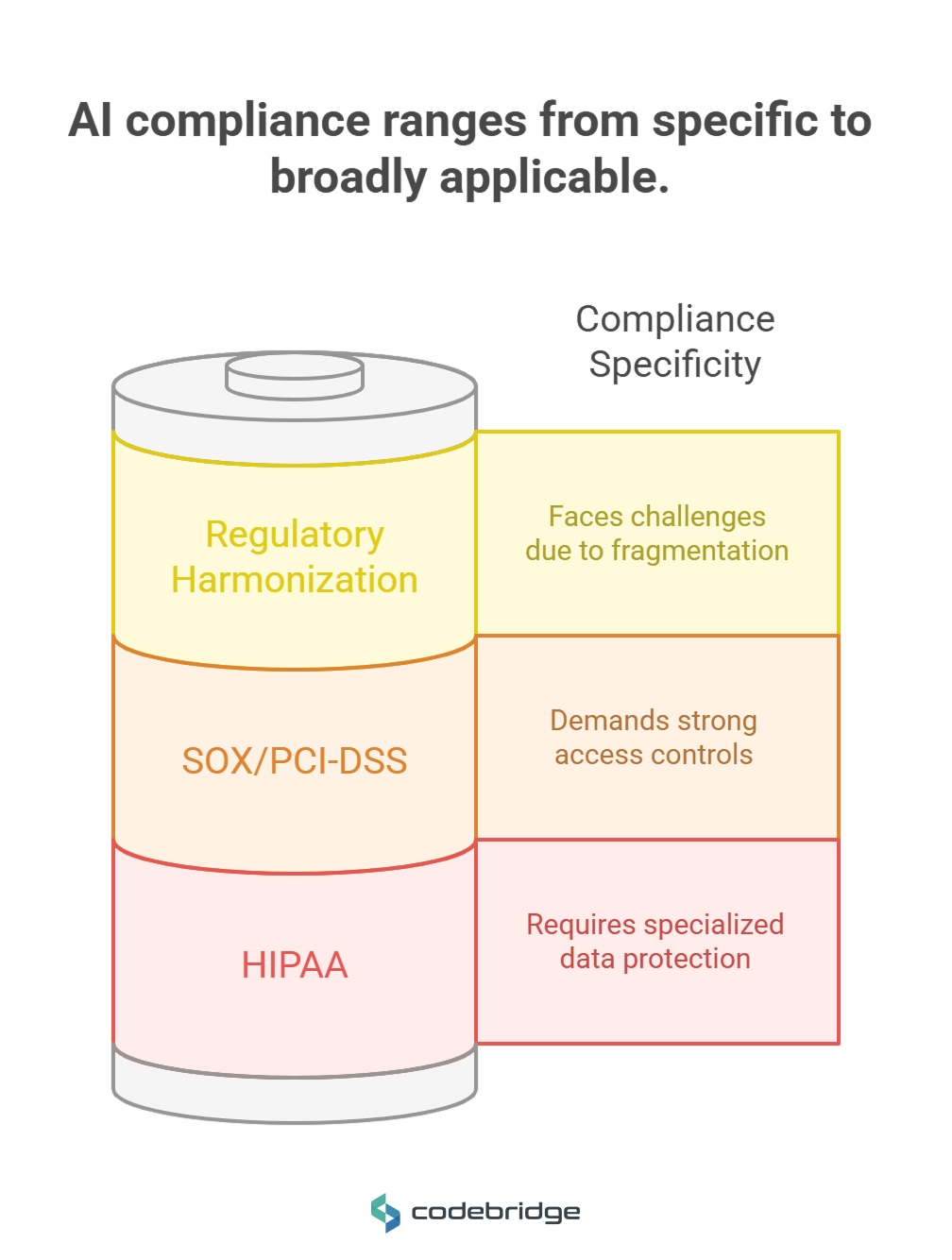

Sector-Specific Compliance Requirements

Healthcare (HIPAA) Compliance for AI systems requires specialized controls including encryption of Protected Health Information (PHI) during AI processing, audit trails for all AI access to patient data, and risk assessments that specifically address AI-related privacy risks. Healthcare organizations must ensure that AI systems maintain the confidentiality, integrity, and availability of PHI while providing the clinical decision support capabilities that improve patient outcomes.

Financial Services Regulation under frameworks like SOX and PCI-DSS requires AI systems to maintain detailed audit trails, implement strong access controls for financial data processing, and provide explainable decision-making for regulatory reporting. Financial institutions must demonstrate that AI systems don't introduce bias or errors that could result in discriminatory lending practices or financial reporting inaccuracies.

International Regulatory Harmonization remains challenging, with 76% of CISOs reporting regulatory fragmentation as a major obstacle to implementing consistent AI security controls across global operations. Organizations must navigate different requirements across jurisdictions while maintaining consistent security standards.

How can executive teams address AI security concerns effectively?

Executive teams require structured approaches to AI security decision-making that balance innovation opportunities with risk management imperatives. 72% of organizations report increased AI cyber-risks, necessitating board-level oversight and strategic planning for AI security investments.

Effective risk assessment methodologies must quantify potential AI-driven threat scenarios and their financial impact, evaluate current AI security posture against industry benchmarks, assess regulatory compliance requirements and potential penalties, and determine resource requirements for implementing adequate AI security controls.

Budget allocation strategies should consider both direct costs (AI security tools, infrastructure, personnel) and indirect costs (training, process changes, regulatory compliance). Organizations typically allocate 10-15% of their overall cybersecurity budget to AI-specific security measures, though this percentage is increasing as AI adoption accelerates.

Governance Structure and Organizational Alignment

Successful AI security programs require clear governance structures that span technical and business functions. AI Ethics and Security Committees should include representatives from IT security, legal and compliance, business units using AI, and executive leadership. These committees must establish policies for AI system development, deployment, and ongoing monitoring while ensuring alignment with overall business objectives.

Cross-functional coordination is essential for addressing AI security risks that span traditional organizational boundaries. Security teams must work closely with data science groups, software development teams, and business units to ensure that security considerations are integrated throughout the AI lifecycle.

Communication and Board Reporting

Executive communication about AI security requires clear, actionable metrics that enable informed decision-making. Key performance indicators should include AI system security posture scores, number and severity of AI-related security incidents, compliance status with relevant AI security frameworks, and return on investment for AI security initiatives.

Regular board reporting should emphasize business impact rather than technical details, focusing on how AI security investments support business objectives, protect company reputation, and ensure regulatory compliance. Reports should include trend analysis that demonstrates improving or degrading security posture over time.

What is the strategic balance between cyber security vs artificial intelligence?

Organizations face complex decisions about balancing investments in traditional cybersecurity controls with emerging AI-enhanced security capabilities. While legacy security tools remain foundational for protecting against established threat types, AI-enhanced solutions provide adaptive defenses that evolve alongside emerging threats.

Cost-benefit analysis must consider total cost of ownership including not only initial acquisition costs but also ongoing operational expenses, training requirements, and integration complexity. Return on investment calculations should account for quantifiable benefits like reduced breach costs and operational efficiency improvements, as well as intangible benefits like enhanced threat visibility and improved security team effectiveness.

The global average cost of data breaches reached $4.88 million in 2024, representing a 10% increase from the previous year (IBM Security). This escalating cost trend underscores the importance of investing in advanced security technologies that can prevent or minimize breach impact.

Risk Assessment Methodologies and Decision Frameworks

Effective technology selection requires sophisticated risk assessment methodologies that account for both current threat landscapes and future security challenges. Organizations must evaluate their specific risk profiles, considering factors like industry sector, geographic presence, regulatory requirements, and existing security maturity levels.

Quantitative risk models should incorporate probability distributions for different threat scenarios, potential impact ranges for various attack types, effectiveness ratings for different security technologies, and sensitivity analysis for key assumptions about threat evolution and technology effectiveness.

Qualitative assessments must address factors that are difficult to quantify, including organizational readiness for AI adoption, cultural alignment with new security approaches, and strategic importance of maintaining security technology leadership.

Future Outlook: AI Security Evolution Through 2025 and Beyond

The AI security landscape will continue evolving rapidly through 2025 and beyond, driven by advances in both offensive and defensive AI capabilities. Explainable AI (XAI) technologies will become increasingly important for security applications, as organizations need to understand and validate AI-driven security decisions (DARPA XAI Initiative).

XAI techniques like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations) are being integrated into cybersecurity platforms to provide transparent threat detection and incident analysis (Cybersecurity XAI Research). These technologies enable security analysts to understand why AI systems flagged specific activities as threats, improving decision confidence and reducing false positive rates.

Automated threat intelligence sharing will become more sophisticated, with AI systems collaborating across organizations to identify and respond to emerging threats. This collaboration will be essential for staying ahead of AI-powered attacks that can evolve and adapt faster than human analysts can track.

Regulatory Evolution and Compliance Requirements

The regulatory environment will continue evolving to address AI-specific risks and requirements. The White House Executive Order on AI mandates red teaming for powerful AI systems before public deployment, establishing precedent for broader AI testing requirements.

AI Red Teaming will become a standard practice for enterprise AI deployment, with specialized services and tools emerging to support systematic adversarial testing (Mindgard AI and Practical DevSecOps). These testing approaches simulate sophisticated attack scenarios to identify vulnerabilities before they can be exploited by real adversaries.

The EU AI Act and similar regulations will drive standardization of AI risk assessment and mitigation practices, creating compliance requirements that extend beyond traditional cybersecurity frameworks to address AI-specific ethical and safety concerns.

Industry Transformation and Competitive Implications

Organizations that successfully integrate AI security capabilities will gain significant competitive advantages through reduced security overhead, faster incident response, and enhanced threat visibility. 74% of IT security professionals report their organizations are suffering significant impact from AI-powered threats while 97% of cybersecurity professionals fear their organizations will face AI-generated security incidents.

The organizations that proactively address these challenges through comprehensive AI security programs will be better positioned to leverage AI technologies safely and effectively, while those that fail to adapt may find themselves increasingly vulnerable to sophisticated AI-powered attacks.

Continuous adaptation and learning will become essential organizational capabilities, as the AI security landscape will continue evolving at unprecedented speed. Organizations must build internal expertise, establish strong vendor partnerships, and maintain flexible architectures that can adapt to emerging threats and technologies.

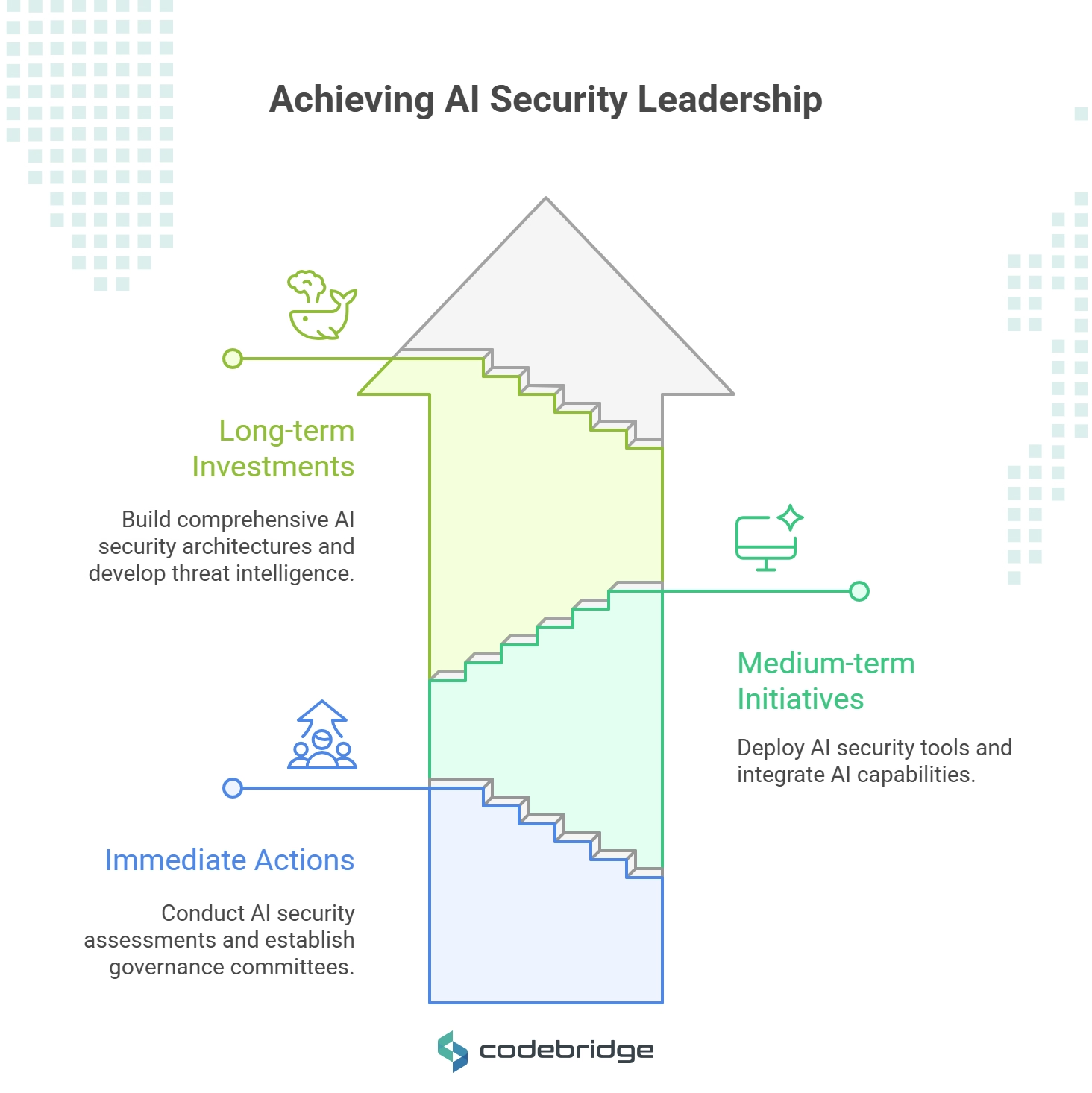

Key Strategic Priorities for Enterprise Leaders:

Immediate Actions (0-6 months): Conduct comprehensive AI security assessments, establish AI governance committees, implement shadow AI discovery and controls, and begin AI security tool pilot programs in controlled environments.

Medium-term Initiatives (6-18 months): Deploy AI-powered security tools in production environments, integrate AI capabilities with existing security infrastructure, develop internal AI security expertise through training and hiring, and establish regular AI red teaming programs.

Long-term Strategic Investments (18+ months): Build comprehensive AI security architectures that span the entire enterprise, develop advanced AI threat intelligence capabilities, establish leadership positions in AI security innovation, and create organizational cultures that embrace continuous adaptation to emerging threats.

The organizations that begin this transformation today will be best positioned to thrive in an AI-driven future, while those that delay risk becoming increasingly vulnerable to sophisticated threats that traditional security approaches cannot address.

Conclusion and Strategic Recommendations

The transformation of cybersecurity through generative AI represents both the greatest opportunity and most significant challenge facing enterprise security organizations today. The statistical evidence is compelling: organizations implementing extensive AI security measures achieve 45.6% lower breach costs, identify threats nearly 100 days faster, and demonstrate superior resilience against sophisticated attacks.

However, success requires more than simply deploying AI-powered tools. Organizations must fundamentally rethink their approach to cybersecurity, embracing continuous verification principles, implementing comprehensive governance frameworks, and building organizational capabilities that can adapt to rapidly evolving threat landscapes.

The strategic imperative is clear: organizations that master AI security integration will gain sustainable competitive advantages through enhanced threat protection, operational efficiency, and innovation capability. Those that fail to adapt will find themselves increasingly vulnerable to AI-powered attacks that exploit traditional security blind spots.

Schedule a consultation with Codebridge's AI Security Experts for a comprehensive assessment of your current capabilities and a customized roadmap for implementing cutting-edge AI security solutions. Our team of specialists can help you navigate the complex landscape of AI threats and defenses, ensuring your organization stays ahead of emerging risks while maximizing the business value of AI technologies.

FAQ

What are the most effective AI security solutions for enterprises in 2026?

AI security solutions use machine learning and anomaly detection to identify threats in real time. They integrate automated incident response to contain attacks instantly. Enterprise platforms now offer unified dashboards combining behavioral analysis, predictive modeling, and zero-trust controls. These solutions streamline security operations and reduce manual workloads.

What is AI security and why is it critical for modern businesses?

AI security refers to measures that protect AI models from adversarial inputs, data poisoning, and unauthorized access. It safeguards the integrity and reliability of AI-driven systems used in decision-making. With AI now embedded in core business functions, robust AI security prevents costly breaches and maintains compliance. It’s essential for ensuring trustworthy AI outputs.

What are the top AI security risks organizations face today?

AI security risks include adversarial attacks that manipulate model inputs and data poisoning that corrupts training datasets. Model extraction attacks can steal intellectual property, while prompt injections target language models. These threats exploit AI’s reliance on data and algorithms, undermining system reliability. Awareness of these risks is key to securing AI deployments.

How to secure AI models and prevent adversarial attacks?

Securing AI models involves adversarial training, where models learn to resist manipulated inputs, and robust input validation pipelines that filter malicious data. Differential privacy techniques protect sensitive training data from inference attacks. Implementing real-time monitoring flags anomalous behavior, while continual security audits test model resilience. Together, these practices strengthen AI defenses.

Which AI cybersecurity software provides the best ROI for businesses?

AI cybersecurity software combines automated threat detection, predictive analytics, and orchestration of incident response. Platforms with integrated machine learning modules deliver rapid threat identification and streamlined workflows. ROI is maximized when solutions scale with AI infrastructure and offer compliance automation. Key features include unified visibility, rule-less detection, and easy integration with existing security stacks.

How does generative AI cybersecurity differ from traditional security approaches?

Generative AI cybersecurity leverages AI models to simulate attack scenarios and predict emerging threats before they occur. Unlike signature-based systems, it uses synthetic data generation and continuous learning to adapt defenses dynamically. This proactive stance enables detection of zero-day exploits and novel attack vectors. As a result, generative AI security offers smarter, faster protection.