The race to implement AI in startup environments has never been more critical. With 83% of companies reporting AI as a top business priority and venture funding increasingly favoring AI-enabled startups, founders who delay risk falling behind competitors who are already capturing market share through intelligent automation and enhanced user experiences. Our custom software development expertise helps you navigate complex technical decisions while delivering measurable business impact.

This comprehensive guide walks you through how to implement AI strategically, from initial assessment through production deployment. You'll discover proven frameworks for identifying high-impact use cases, building robust data foundations, and delivering measurable ROI within 90-180 days, all while avoiding common pitfalls that drain resources without moving business metrics.

.avif)

Why Now? Market Dynamics & Founder Imperatives

Nearly all companies are investing in AI, and 92% plan to increase their investment over the next three years, according to insights from McKinsey’s report. Users now expect personalized experiences, instant support, and intelligent features as table stakes. Meanwhile, investors scrutinize burn rates more closely, demanding startups achieve more with less. AI offers a unique opportunity to satisfy both pressures simultaneously, enhancing product capabilities while reducing operational costs.

Consider the numbers that demonstrate AI's immediate impact:

%20(1).avif)

- Customer support AI achieves 40-60% ticket deflection rates

- AI-powered code generation accelerates development cycles by 25-35%

- Operational cost reductions of 15-30% through intelligent automation

- Revenue growth of 10-25% from personalized user experiences

For cash-conscious startups, these efficiency gains translate directly to extended runway and competitive advantage.

Where AI Actually Moves the Needle in Software Businesses

Not all AI implementations create equal value. The highest-impact applications typically fall into four strategic categories:

Product Intelligence:

- Recommendation engines for content and features

- Personalization algorithms for user experiences

- Intelligent search and discovery systems

Operational Efficiency:

- Automated testing and quality assurance

- Code review and security scanning

- Deployment optimization and monitoring

Growth Acceleration:

- Churn prediction and retention modeling

- Pricing optimization and dynamic pricing

- Lead scoring and sales forecasting

Customer Experience:

- Intelligent support chatbots and routing

- Automated onboarding and user guidance

- Proactive issue detection and resolution

The key is identifying where AI can compress time-to-value cycles or unlock revenue opportunities that were previously impossible. A B2B SaaS platform might use AI to automatically categorize feature requests and predict implementation effort, while an e-commerce startup could deploy dynamic pricing algorithms that respond to market conditions in real-time.

Callout: Avoid Vanity Pilots. Every AI initiative should tie directly to revenue growth, margin improvement, or cycle time reduction. Resist the temptation to build "AI for AI's sake", focus on use cases where intelligent automation solves genuine business problems with measurable outcomes.

AI Strategy for Startups, A CEO/CTO Framework

Successful AI implementation begins with systematic opportunity identification across your entire value chain:

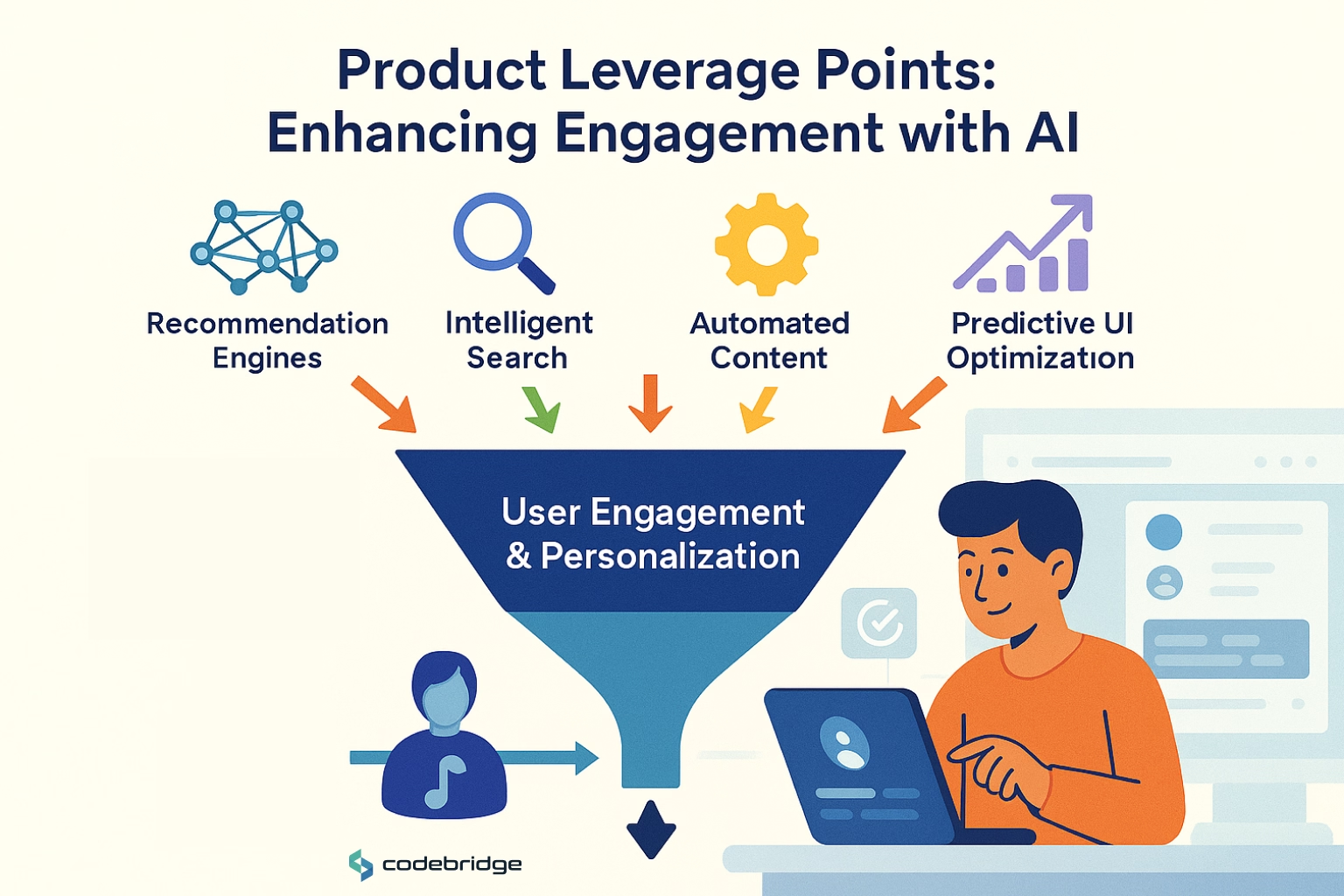

Product Leverage Points:

- Recommendation engines for enhanced user engagement

- Intelligent search and content discovery

- Automated content generation and curation

- Predictive user interface optimization

Engineering Opportunities:

- AI-powered code generation and completion

- Automated test creation and maintenance

- Deployment optimization and rollback intelligence

- Performance monitoring and anomaly detection

Go-to-Market Applications:

- Lead scoring and qualification automation

- Sales forecasting and pipeline management

- Marketing personalization and campaign optimization

- Customer segmentation and targeting

Support Functions:

- Intelligent chatbots and ticket routing

- Knowledge management and documentation

- Automated escalation and priority assignment

- Customer health scoring and intervention

The framework for evaluation remains consistent: assess each opportunity based on technical feasibility, business impact, and implementation complexity. High-impact, low-complexity initiatives become your starting point, while complex transformational projects require longer-term planning and dedicated resources.

Objective Tree → Initiative Shortlist → Success Metrics

Begin with your top-level business objectives, whether that's reducing churn, accelerating sales cycles, or improving product engagement. Break these down into specific, measurable outcomes that AI could influence. For example, "reduce churn" might decompose into "identify at-risk accounts 60 days earlier" and "automate retention campaigns based on usage patterns."

Transform each outcome into a concrete AI initiative with defined success criteria. Your churn reduction objective might yield initiatives like "implement behavioral scoring model" (success metric: 20% improvement in early warning accuracy) and "deploy automated email campaigns" (success metric: 15% increase in retention for flagged accounts).

Operating Model: Ownership, Budget, Cadence, Risk Gates

Establish clear ownership across three layers: business owners who define requirements and measure outcomes, technical owners who architect and implement solutions, and operational owners who monitor performance and handle escalations. Budget allocation should follow a 70-20-10 pattern: 70% for proven use cases, 20% for promising experiments, and 10% for exploratory research.

Implement monthly review cycles with quarterly planning horizons. Define explicit risk gates, points where initiatives must demonstrate progress against success metrics or face termination. This disciplined approach prevents AI projects from becoming resource sinks without clear value delivery

Data Foundations You Need Before Shipping AI

AI implementations succeed or fail based on data quality and accessibility. Your comprehensive readiness checklist should verify:

Schema Consistency:

- Standardized naming conventions across all systems

- Unified data types and formats

- Version control for schema changes and migrations

- Documentation of data transformation logic

Data Quality Standards:

- Accuracy metrics exceeding 95% thresholds

- Completeness validation for critical fields

- Consistency checks across data sources

- Freshness monitoring and staleness alerts

Lineage and Governance:

- Complete tracking for model training datasets

- Audit trails for compliance requirements

- Data source documentation and ownership

- Change impact analysis capabilities

Privacy and Consent:

- Explicit user consent for AI processing where required

- Geographic compliance with GDPR, CCPA regulations

- Data retention and deletion policies

- Privacy impact assessments for AI use cases

Data schema standardization proves particularly critical for startups with rapid product evolution. Poor data quality multiplies throughout AI pipelines, making upfront investment in data cleanliness essential for reliable model performance.

Feature Store & Event Pipelines; PII Handling and Masking

Modern AI architectures depend on feature stores for consistent data serving across training and inference environments. Implement automated pipelines that compute, store, and serve features while maintaining strict access controls and audit capabilities. Event-driven architectures ensure real-time feature updates without complex batch processing dependencies.

PII handling demands special attention in AI systems. Implement automated detection and masking for sensitive data, maintain separate consent databases, and establish clear data retention policies. Consider tokenization strategies that preserve utility for model training while protecting individual privacy.

Choosing a Vector Database and Embedding Strategy

Vector database selection significantly impacts AI system performance and scalability. Evaluate options based on these critical factors:

Performance Requirements:

- Query latency targets (typically <100ms for real-time applications)

- Throughput capacity for concurrent requests

- Memory usage and storage efficiency

- Index building and update speeds

Popular Vector Database Options:

- Pinecone: Managed service for simplicity and reliability

- Milvus: Open-source solution for flexibility and customization

- pgvector: PostgreSQL extension for existing database integration

- Weaviate: GraphQL-based with built-in ML capabilities

- Qdrant: High-performance with payload filtering

Embedding Strategy Considerations:

- Text applications: Sentence transformers or LLM embeddings

- Image/audio: Specialized embedding models (CLIP, WAV2VEC)

- Multimodal: Cross-modal embedding architectures

- Domain-specific: Fine-tuned embeddings for industry terminology

Consider embedding dimensionality trade-offs; higher dimensions capture more semantic information, but increase storage and computation costs. Most applications perform well with 384-768 dimensional embeddings, while specialized use cases may require up to 1536 dimensions.

MLOps vs LLMOps: What Changes with Generative AI?

Traditional MLOps focuses on structured data pipelines and deterministic model outputs. LLMOps introduces new complexities around prompt management, non-deterministic responses, and evaluation methodologies for generated content. Your infrastructure must accommodate both paradigms as most startups will deploy hybrid AI systems.

Environment management becomes more complex with LLMOps due to model size and computational requirements. Implement tiered environments with different model configurations, lightweight models for development, full-scale models for staging, and optimized production deployments. Version control must track prompts, model configurations, and fine-tuning datasets alongside traditional code changes.

Evaluation sets for generative AI require human feedback loops and subjective quality assessments. Establish golden datasets with expert annotations, implement automated evaluation metrics where possible, and plan for ongoing human evaluation as model outputs evolve.

Latency/Cost SLOs, Prompt/Version Control, Secret Management

LLM applications demand strict service level objectives around response latency and computational costs. Define p95 latency targets (typically 1-3 seconds for interactive applications) and cost-per-request budgets that align with your unit economics. Implement circuit breakers and fallback mechanisms to maintain service availability during high-demand periods.

Prompt engineering introduces new version control challenges. Treat prompts as code with proper branching, review processes, and deployment pipelines. Maintain prompt libraries with A/B testing capabilities and rollback mechanisms for poorly performing variations.

Secret management becomes critical when integrating multiple AI services and APIs. Implement automated key rotation, separate secrets for different environments, and monitoring for unusual API usage patterns that might indicate security breaches.

Retrieval Augmented Generation (RAG) and Grounding Patterns

Retrieval Augmented Generation (RAG) patterns enable AI systems to access current information and domain-specific knowledge without expensive model retraining. Implement RAG architectures with document chunking strategies, semantic search capabilities, and relevance filtering to ensure generated responses remain grounded in factual information.

RAG system performance depends heavily on retrieval quality and context assembly logic. Optimize chunk sizes for your content types (typically 200-500 tokens for text), implement hybrid search combining semantic and keyword approaches, and establish relevance thresholds to filter low-quality context.

AI in Software Development, Engineering Use Cases

AI code generation transforms development velocity when implemented with appropriate guardrails. Modern tools offer significant productivity improvements:

Leading Code Generation Tools:

- GitHub Copilot: Integrated IDE experience with broad language support

- Amazon Code Whisperer: AWS-optimized with security scanning

- Tabnine: Privacy-focused with on-premises deployment options

- Replit Ghostwriter: Web-based collaborative coding assistance

- OpenAI Codex: API-based integration for custom workflows

Implementation Best Practices:

- Establish code review policies for AI-generated content

- Integrate automated security scanning in CI/CD pipelines

- Provide developer training on AI-assisted workflows

- Monitor code quality metrics and developer satisfaction

- Maintain intellectual property and licensing compliance

AI test generation offers similar productivity gains with reduced risk profiles.

Test Generation Capabilities:

- Unit test creation based on function signatures and logic

- Integration test scenarios from API specifications

- Edge case identification and boundary condition testing

- Regression test maintenance for code changes

- Performance test script generation for load scenarios

Focus on unit test generation initially, then expand to integration and end-to-end testing as confidence grows. Establish clear policies around AI-generated code ownership and maintain human oversight for critical system components.

AI Code Review & Security Scanning with Guardrails

AI code review systems can identify potential bugs, security vulnerabilities, and code quality issues faster than traditional manual reviews. Tools like DeepCode, Amazon CodeGuru, and open-source alternatives provide automated feedback on pull requests while maintaining developer workflow integration.

Security scanning becomes more sophisticated with AI-powered static analysis tools that understand code context and identify subtle vulnerability patterns. Implement these tools as mandatory gates in your CI/CD pipeline, but maintain human security review for high-risk changes.

Establish feedback loops between AI recommendations and developer acceptance rates. This data improves AI system accuracy over time and helps refine organizational coding standards based on actual developer preferences and practices.

DevEx: Flaky Test Triage, Incident Summarization, PR Bots

Developer experience improvements through AI can significantly impact team productivity and satisfaction:

Flaky Test Management:

- Automated detection of inconsistent test failures

- Historical pattern analysis for root cause identification

- Smart retry mechanisms with exponential backoff

- Priority ranking based on impact and frequency

- Integration with CI/CD systems for automatic handling

Incident Response Automation:

- Real-time log aggregation and pattern recognition

- Automatic severity classification and escalation

- Stakeholder notification based on impact assessment

- Post-incident report generation with timeline reconstruction

- Knowledge base updates from resolution patterns

Pull Request Optimization:

- Automated description generation from code changes

- Intelligent reviewer suggestion based on expertise mapping

- Review complexity estimation and timeline prediction

- Status tracking and completion monitoring

- Code quality metrics and improvement recommendations

These systems learn from team patterns over time, becoming more accurate at estimating review complexity and identifying appropriate reviewers while reducing administrative overhead for development teams.

Product & Growth Use Cases with Fast Payback

AI personalization drives user engagement and revenue growth through tailored content, feature recommendations, and user experience optimization. Implement recommendation systems that learn from user behavior, preferences, and contextual signals to deliver increasingly relevant experiences over time.

Start with collaborative filtering for content recommendations, then expand to hybrid approaches combining collaborative, content-based, and contextual signals. Measure success through engagement metrics like session duration, feature adoption rates, and conversion improvements.

Churn Prediction & Retention Playbooks

Churn prediction models identify at-risk customers weeks or months before they actually leave, providing time for proactive retention efforts. Build models using behavioral signals, usage patterns, support interactions, and billing history to generate risk scores with actionable insights.

Implement automated retention playbooks triggered by churn risk scores. These might include personalized email campaigns, product recommendations, support outreach, or special offers tailored to specific churn risk factors. Track effectiveness through cohort retention analysis and lifetime value improvements.

AI Customer Support Chatbot & Deflection Metrics

AI customer support chatbots reduce support costs while improving response times for common inquiries. Modern LLM-powered chatbots handle complex queries with natural language understanding and can escalate to human agents when necessary.

Measure chatbot effectiveness through deflection rates, resolution accuracy, and customer satisfaction scores. Start with FAQ automation and knowledge base queries, then expand to more complex support scenarios as accuracy improves. Maintain clear escalation paths and human oversight for sensitive issues.

Pricing Optimization AI

Pricing optimization AI enables dynamic pricing strategies that respond to market conditions, customer segments, and competitive pressures in real-time. These systems can increase revenue by 5-15% through better price elasticity understanding and personalized pricing strategies.

Implement A/B testing frameworks for pricing experiments and establish clear boundaries around pricing automation. Consider customer perception and competitive positioning when designing pricing algorithms, and maintain human oversight for significant price changes.

How to Implement AI, 90/180-Day Roadmap

0–30 Days: Discovery, Data Audit, Evaluation Baselines

Your first 30 days should focus on comprehensive discovery and baseline establishment:

Stakeholder Discovery Activities:

- Cross-functional interviews with product, engineering, and business teams

- Pain point identification and opportunity mapping sessions

- Current workflow documentation and inefficiency analysis

- Resource assessment and capacity planning discussions

- Success criteria definition and measurement framework

Data Infrastructure Assessment:

- Quality audits assessing accuracy, completeness, and consistency

- Accessibility evaluation for AI processing requirements

- Compliance review for regulatory requirements (GDPR, CCPA)

- Integration complexity analysis for existing systems

- Storage and computational capacity evaluation

Baseline Metric Establishment:

- Customer support response time and resolution rates

- Code review cycle lengths and bottleneck identification

- Current churn rates and customer lifetime values

- Development velocity and deployment frequency metrics

- User engagement and product adoption baselines

These baselines become essential for measuring AI impact and calculating ROI throughout your implementation journey. Create detailed technical assessments of your current infrastructure's AI readiness, including computational capacity, data pipeline maturity, and integration complexity for planned AI services.

31–90 Days: Pilot(s), RAG POC, Policy & Guardrails

Days 31-90 focus on practical implementation and policy establishment:

Pilot Project Launch:

- 1-2 focused initiatives with clearly defined success criteria

- Limited scope to demonstrate technical feasibility quickly

- Measurable business value delivery within 90-day timeframe

- Risk mitigation through controlled user exposure

- Regular checkpoint reviews and course correction capabilities

RAG Implementation Foundation:

- Document processing pipeline development and testing

- Embedding strategy validation with sample content

- Retrieval quality assessment and optimization

- Integration testing with existing knowledge systems

- Performance benchmarking for latency and accuracy

Governance Framework Development:

- AI usage policies covering appropriate applications

- Data handling procedures and privacy protection measures

- Model deployment approval processes and checkpoints

- Risk management procedures and escalation protocols

- Training programs for teams using AI systems

Essential Guardrail Systems:

- Content filtering for inappropriate or harmful outputs

- PII detection and automatic redaction capabilities

- Output validation and quality assurance processes

- User feedback collection and continuous improvement loops

- Security monitoring and threat detection protocols

These foundational elements support multiple AI use cases including customer support, internal knowledge management, and content generation while maintaining security and compliance standards.

91–180 Days: Productionization, SLAs, Monitoring, Scaling

The final 90 days focus on production deployment and scaling successful pilots. Implement comprehensive monitoring systems tracking both business metrics and technical performance indicators. Establish service level agreements for AI system availability, latency, and accuracy.

Build automated deployment pipelines for AI models and establish version control processes for model updates. Implement feedback loops capturing user interactions and model performance data to support continuous improvement efforts.

Scale successful AI implementations across additional use cases and user segments while maintaining quality and performance standards established during pilot phases.

Risk, Security & Compliance

AI systems introduce new attack vectors requiring specific security measures:

Data Leakage Prevention:

- Model training data extraction protection through differential privacy

- Prompt engineering safeguards against information disclosure

- Inference-time data exposure monitoring and prevention

- Data minimization principles in model training and deployment

- Output filtering to prevent sensitive information leakage

Prompt Injection Attack Mitigation:

- Input validation and sanitization for user prompts

- Template restrictions limiting system prompt modification

- Output monitoring for policy violations and unexpected responses

- Rate limiting and anomaly detection for suspicious patterns

- Sandboxing for AI model execution environments

Supply-Chain Security:

- Vendor security assessments and due diligence processes

- Pre-trained model provenance verification and validation

- Third-party AI tool security reviews and approval workflows

- Network segmentation for AI service connections

- Continuous monitoring for unusual system behavior patterns

Implement these security layers as foundational elements rather than afterthoughts, ensuring protection throughout your AI system lifecycle from development through production deployment.

PII Redaction, RBAC, Audit Trails, Model Isolation

PII redaction systems must operate reliably across all AI processing stages. Implement automated detection for various PII types, including names, addresses, financial information, and identifiers. Consider tokenization strategies that preserve data utility while protecting individual privacy.

Role-based access control (RBAC) for AI systems requires granular permissions covering model access, training data, and inference capabilities. Implement least-privilege principles and regular access reviews to maintain appropriate security boundaries.

Comprehensive audit trails should track all AI system interactions, including model training events, inference requests, and administrative actions. These logs support compliance requirements and incident investigation while enabling usage pattern analysis.

Policy & Governance: Acceptable Use, Human-in-the-Loop

Establish clear acceptable use policies for AI systems covering appropriate applications, prohibited use cases, and escalation procedures for edge cases. These policies should address both technical capabilities and ethical considerations around AI deployment.

Human-in-the-loop processes provide safety nets for critical AI decisions while supporting continuous improvement through human feedback. Design these processes to minimize workflow disruption while maintaining quality oversight for high-risk scenarios.

ROI, Metrics & Model Evaluation

AI ROI measurement requires tracking both business impact and technical performance metrics. Primary business KPIs typically include revenue growth from personalization, margin improvement through automation, cycle time reduction in development workflows, and cost savings from support deflection.

Establish clear attribution methods linking AI capabilities to business outcomes. This might involve cohort analysis comparing AI-enabled versus traditional workflows, A/B testing of AI features against baseline experiences, or time-series analysis showing performance changes following AI deployment.

Calculate lifetime value improvements from AI-enhanced customer experiences, operational cost reductions from automated workflows, and productivity gains from AI-assisted development processes.

Model Metrics: Accuracy, Hallucination Rate, Latency, Cost

Technical metrics provide operational insights essential for maintaining AI system performance. Track accuracy metrics appropriate for your specific use cases, classification accuracy, retrieval precision/recall, or generation quality scores, depending on AI application types.

Hallucination monitoring becomes critical for generative AI applications. Implement automated factuality checking where possible and maintain human evaluation processes for outputs requiring high accuracy. Track hallucination rates over time to identify model drift or degradation issues.

Monitor latency and cost metrics to ensure AI systems remain within operational budgets and performance requirements. These metrics inform scaling decisions and optimization priorities as AI usage grows.

Continuous Evaluation: Golden Sets, A/B, Offline + Online

Implement multi-layered evaluation systems combining offline testing with online performance monitoring. Maintain golden datasets with expert annotations for consistent quality assessment across model updates and configuration changes.

A/B testing frameworks enable safe deployment of AI improvements while measuring real-world impact on user behavior and business metrics. Design tests with appropriate statistical power and monitor for both intended improvements and unintended consequences.

Combine offline evaluation using historical data with online monitoring of live system performance. This hybrid approach catches issues that might not appear in offline testing while maintaining confidence in model quality before deployment.

Cost, Timeline & Team

AI implementation requires diverse expertise across multiple disciplines. Product managers define requirements and success metrics while coordinating between technical and business stakeholders. Data engineers build and maintain pipelines supporting AI workflows with appropriate quality and governance controls.

ML/LLM engineers develop and deploy models while platform engineers provide infrastructure, monitoring, and operational support. Security specialists ensure AI systems meet compliance requirements and protect against emerging AI-specific threats.

Plan for skill development across existing team members rather than hiring specialists for every role. Many AI capabilities can be implemented by existing developers with appropriate training and tooling support.

Budget Ranges for POC → Production (Compute, Tooling, People)

AI project budgets typically span three phases with different cost profiles. Proof-of-concept phases require $10-50K investments covering initial tooling, limited compute resources, and consultant or contractor support for specialized expertise.

Pilot implementations range from $50-200K including production-ready infrastructure, monitoring systems, and dedicated team capacity. Full production deployments can cost $200K-1M+ annually, depending on scale, computational requirements, and team size.

Consider ongoing operational costs, including compute resources, API fees for third-party AI services, tooling subscriptions, and team expansion as AI capabilities mature and scale across your organization.

Should startups build custom AI models or use existing APIs?

Most startups should start with existing APIs and services to minimize complexity and accelerate time-to-value. Consider custom models only when existing solutions cannot meet specific requirements around performance, cost, security, or competitive differentiation. Custom development makes sense as a second-phase initiative after proving value with commercial solutions.

Transform your startup's growth trajectory through strategic AI implementation. Book a free consultation to discuss your AI roadmap and accelerate your competitive advantage.

FAQ

Why should startups implement AI in their software strategy?

Startups should implement AI to enhance software growth by automating repetitive tasks, improving decision-making, and delivering personalized user experiences. AI technologies like machine learning and predictive analytics help startups innovate faster, optimize development cycles, and gain a competitive edge in their markets.

How can startups start implementing AI in their products?

To implement AI in startup software, begin with these steps:

- Identify key pain points that AI can solve.

- Collect and clean data relevant to your product.

- Choose an AI model or API suited to your use case (e.g., NLP, recommendation systems).

- Test with small prototypes, gather user feedback, and iterate.

This structured approach ensures that AI adoption supports measurable growth.

What are the main benefits of implementing AI for startup growth?

The biggest benefits of implementing AI in startups include faster development, smarter automation, better customer insights, and cost savings. AI helps startups scale operations without adding headcount, reduce errors in manual workflows, and personalize experiences that drive user retention and revenue growth.

What challenges do startups face when implementing AI?

Startups often face challenges such as limited data quality, lack of in-house expertise, and integration complexity when they try to implement AI. Overcoming these requires clear goals, access to the right talent or AI partners, and using scalable AI tools that fit the startup’s technical maturity and budget.

Which AI tools are best for startups aiming to boost software growth?

The best AI tools for startups depend on your use case. For product analytics, tools like Mixpanel AI or Amplitude are effective. For automation and code generation, OpenAI’s APIs, GitHub Copilot, or Hugging Face models are popular. Cloud AI services from AWS, Google Cloud, or Azure AI offer scalable and cost-efficient infrastructure for startups.

How does implementing AI impact a startup’s ROI and long-term growth?

When startups strategically implement AI, they often see a strong return on investment through higher productivity, improved customer engagement, and faster innovation. Over time, AI-driven insights help refine product roadmaps, reduce churn, and scale growth more sustainably compared to traditional development approaches.

%20(1).jpg)

%20(1)%20(1)%20(1).jpg)