Choosing the right best technology stack for startups determines whether your company scales smoothly or struggles with technical debt, hiring challenges, and architectural limitations. The technology landscape offers more powerful tools than ever, but the abundance of choices makes strategic selection more critical than ever.

For expert guidance tailored to your specific requirements, explore our mobile app development services.

This comprehensive guide cuts through the hype to help you build a startup tech stack that grows with your business.

How to Think About Your Startup Tech Stack (First Principles)

The foundation of a smart technology stack for startup decisions starts with reverse engineering from business outcomes. Are you racing to market with an MVP to secure funding? Building a regulated fintech product? Creating a global consumer app? Each scenario demands different architectural trade-offs.

%20(2).avif)

- Time constraints often favor familiar technologies over optimal ones. A team fluent in React and Node.js will ship faster than one learning Go and Vue, regardless of theoretical performance advantages. Budget realities compound this; startups can't afford the luxury of extended learning curves when the runway is limited.

- Hiring market dynamics significantly influence stack viability. React developers are abundant and affordable; specialized Rust engineers cost 40-60% more and require longer searches. Factor in your location, remote work policies, and competition for talent when making technology choices. Compliance requirements can eliminate entire categories of solutions. HIPAA-regulated startups may need specific cloud configurations, data residency controls, or audit-friendly architectures that rule out certain managed services.

MVP vs Scale Requirements; Avoid Premature Complexity

The biggest startup tech stack mistake is optimizing for problems you don't yet have. Instagram famously ran on Django and PostgreSQL until they had millions of users; a premature microservices architecture would have killed their velocity.

- MVP-first principles. Suggest choosing boring, well-documented technologies with large communities. Boring doesn't mean outdated; it means proven, stable, and predictable. Your first technical priority is validating product-market fit, not showcasing architectural sophistication.

- Scale transition planning. Matters more than initial scale readiness. Design modular systems that can evolve from monoliths to microservices, from single databases to sharded architectures, from manual deployments to sophisticated CI/CD pipelines. The key is choosing technologies that support this evolution rather than forcing expensive rewrites.

Architecture Choices: Monolith vs Microservices, Serverless vs Containers

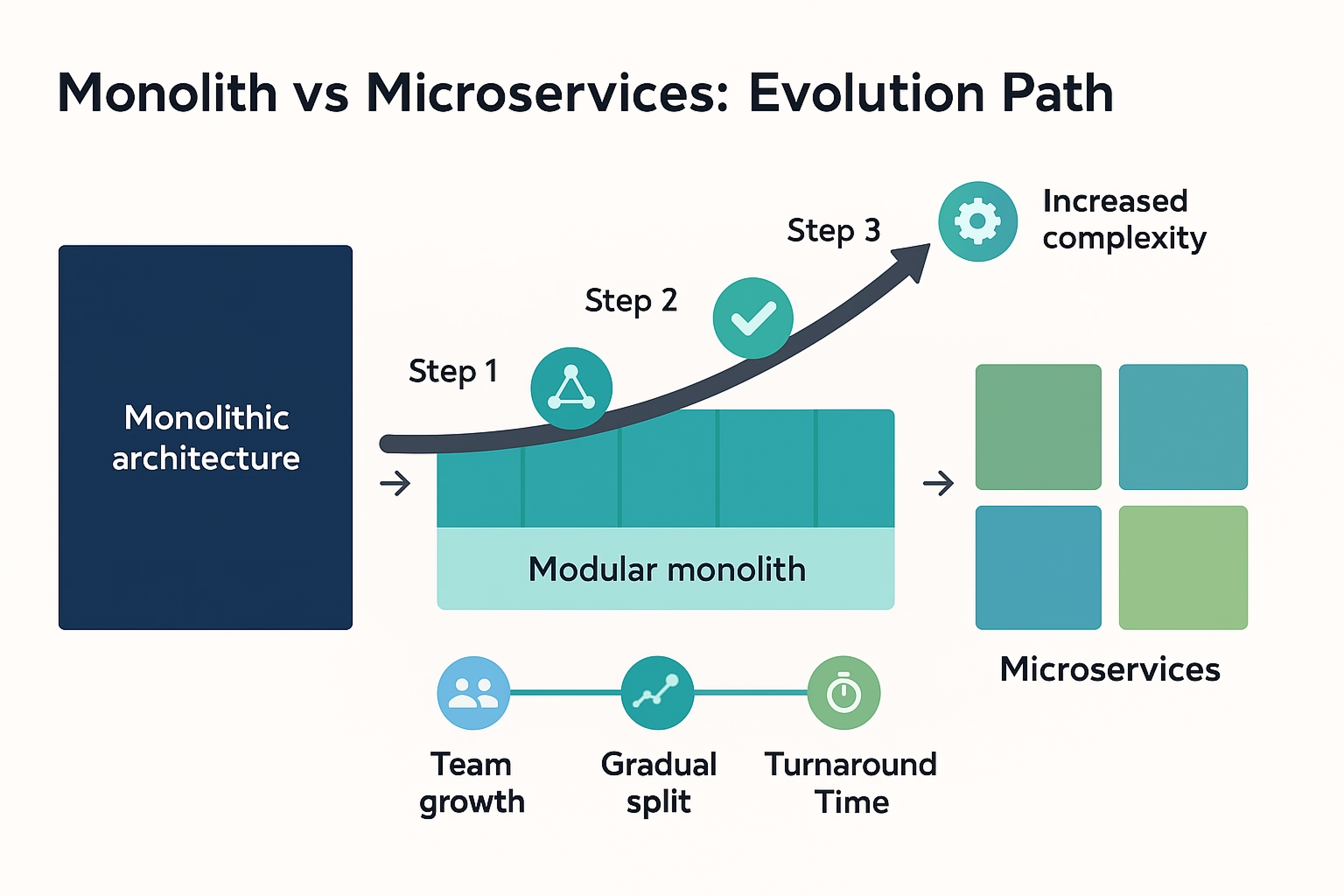

Monolith vs microservices isn't a permanent choice; it's an evolution path. Start monolithic for speed and simplicity, then extract services as team size and complexity demand it. Netflix, Amazon, and Shopify all followed this pattern rather than beginning with distributed architectures.

Modern monoliths using modular design patterns can support substantial scale. Shopify's core still runs as a Rails monolith handling Black Friday traffic while selectively extracting performance-critical services.

Serverless vs containers represents a build-versus-control trade-off. Serverless platforms like Vercel, AWS Lambda, or Google Cloud Functions excel for event-driven workloads, eliminate infrastructure management, and provide automatic scaling. However, they introduce vendor lock-in, cold start latency, and cost unpredictability at high scale.

Containers offer more control, predictable costs, and portability across providers, but require orchestration expertise and infrastructure management. For most startups, serverless provides faster initial progress while containers become attractive as requirements become more specific and teams gain operational maturity.

Frontend Frameworks: React, Vue, Angular

React. dominates startup adoption for compelling reasons: the largest talent pool, extensive ecosystem, and mature tooling. Its component-based architecture and virtual DOM provide excellent performance for interactive applications. The learning curve is moderate, and most developers can contribute productively within weeks. React's flexibility is both a strength and a weakness. The ecosystem provides solutions for everything, but teams must make integration decisions that can introduce complexity. State management options range from built-in hooks to Redux to Zustand, each with different trade-offs.

Vue. offers an attractive middle ground with gentler learning curves and more opinionated conventions than React. Its reactive system provides excellent update performance, especially for smaller to medium applications. Vue's smaller ecosystem means fewer choices but better integration between official packages. The hiring challenge with Vue is real, while growing rapidly, the talent pool remains smaller than React. However, Vue's approachability means React developers can transition relatively quickly.

Angular. provides the most comprehensive framework with built-in solutions for routing, state management, testing, and form handling. It's powerful for complex applications but requires TypeScript knowledge and has the steepest learning curve. Angular works well for enterprise scenarios where teams appreciate comprehensive tooling and strong conventions.

API Style: REST vs GraphQL; BFF (Backend-for-Frontend) Patterns

REST APIs remain the default choice for most startup scenarios due to simplicity, caching benefits, and universal client support. REST's stateless nature scales well, and standard HTTP status codes provide clear error-handling patterns. Tooling for REST API development, testing, and documentation is mature and widely understood.

GraphQL shines when frontend teams need flexible data fetching, especially in scenarios with multiple client applications (web, mobile, admin panels) requiring different data shapes. GraphQL eliminates over-fetching and provides strongly typed schemas that improve development velocity once implemented.

The GraphQL complexity tax is significant: query optimization, N+1 problems, caching complexity, and learning curve for both frontend and backend teams. Most startups should start with REST and evaluate GraphQL once API proliferation becomes a bottleneck.

Backend-for-Frontend (BFF) patterns provide an architectural middle ground, where dedicated backend services optimize data delivery for specific client needs. BFF layers can aggregate multiple microservices, reshape data for client consumption, and handle client-specific authentication or caching logic.

Backend Choices, Languages & Frameworks

Node.js. Excels in scenarios requiring real-time features, heavy API usage, or teams wanting JavaScript uniformity across the stack. Its event-driven architecture handles concurrent I/O efficiently, making it ideal for chat applications, collaborative tools, or API-heavy workflows. The npm ecosystem provides solutions for virtually any requirement. Node.js performance limitations emerge with CPU-intensive tasks, where the single-threaded event loop becomes a bottleneck. Memory usage can be unpredictable, especially with poor garbage collection patterns.

Python. Provides the fastest development velocity for most scenarios, especially those involving data processing, machine learning, or rapid prototyping. Its syntax reduces bug rates and onboarding time. Python's ecosystem includes mature frameworks like Django for full-featured applications or FastAPI for high-performance APIs. Python's main limitation is execution speed, typically 3-5x slower than compiled languages for CPU-bound tasks. However, for I/O-bound applications (most web services), this difference is often negligible compared to database or network latency.

Go. delivers excellent performance with simpler deployment and scaling characteristics than Java or C#. Its compiled nature eliminates runtime dependencies, and built-in concurrency primitives handle high-throughput scenarios elegantly. Go's opinionated design reduces bike-shedding and improves code consistency. The Go trade-off is ecosystem maturity; while growing rapidly, it has fewer third-party libraries than Node.js or Python. Go's syntax and paradigms require learning investment from developers familiar with object-oriented languages.

Sync vs Async I/O, Background Jobs, Message Queues

Synchronous I/O models simplify development and debugging but limit concurrency. Traditional frameworks like Django, Rails, or Express with blocking I/O can handle modest loads (hundreds of concurrent connections) with simpler code patterns.

Asynchronous I/O becomes essential for high-concurrency scenarios. Node.js provides async by default, Python offers asyncio/FastAPI, and Go's goroutines handle concurrency elegantly. The programming complexity increases, but throughput improvements can be dramatic, 10x or more for I/O-bound workloads.

Background job processing separates long-running tasks from web request cycles. Popular solutions include Celery (Python), Sidekiq (Ruby), Bull (Node.js), or cloud-native options like AWS SQS with Lambda. Choose based on durability requirements, throughput needs, and operational complexity tolerance.

Message queues enable reliable communication between services and handle traffic spikes by buffering work. Redis provides lightweight pub/sub and job queues, while Apache Kafka handles high-throughput streaming scenarios. Managed services like AWS SQS/SNS reduce operational overhead.

Databases & Caching

PostgreSQL provides the most comprehensive feature set with excellent support for complex queries, JSON documents, full-text search, and custom extensions. Its MVCC (Multi-Version Concurrency Control) handles concurrent transactions elegantly, and the pgvector extension adds vector search capabilities for AI applications.

PostgreSQL's complexity can overwhelm simple use cases, and its resource requirements are higher than MySQL. However, its advanced features often eliminate the need for additional specialized databases.

MySQL optimizes for read-heavy workloads with simpler administration and broader hosting support. It provides excellent performance for straightforward relational data patterns and integrates easily with content management systems and e-commerce platforms.

MySQL's limitations include weaker support for complex queries, limited JSON functionality compared to PostgreSQL, and historically weaker consistency guarantees. However, recent versions have addressed many traditional concerns.

MongoDB excels when schema flexibility is paramount, content management, product catalogs, or applications with evolving data structures. Its horizontal scaling capabilities and native JSON storage reduce impedance mismatch between application objects and database storage.

MongoDB's trade-offs include eventual consistency challenges, complex transaction handling across documents, and higher memory usage. Query optimization requires different expertise than SQL databases.

Caching (Redis), Search (OpenSearch/Elastic), Analytics Warehouse

- Redis. Serves multiple roles in modern applications: session storage, caching layer, pub/sub messaging, and lightweight data structures. Its in-memory architecture provides microsecond latency, and persistence options balance durability with performance.

- Redis scaling requires partitioning strategies (sharding) or managed services like AWS ElastiCache. Memory costs can be significant for large datasets, but the performance benefits often justify the expense.

- Search engines. Like Elasticsearch or OpenSearch handle full-text search, log aggregation, and complex analytical queries that relational databases handle poorly. They provide fuzzy matching, relevance scoring, and faceted search capabilities essential for user-facing search features.

- Search infrastructure requires careful resource planning; Elasticsearch clusters can be memory and storage intensive. Managed services like AWS OpenSearch or Elastic Cloud reduce operational complexity but increase costs.

- Analytics warehouses. Separate reporting workloads from transactional systems. Cloud data warehouses like BigQuery, Redshift, or Snowflake provide SQL interfaces with massive parallel processing capabilities. For startups, these typically become relevant after reaching significant data volumes or analytical complexity.

AWS vs Azure vs GCP (Managed Services, Regional Coverage, Pricing Posture)

AWS provides the broadest service catalog and most mature ecosystem, making it the default choice for startups needing diverse capabilities. Its global infrastructure supports worldwide expansion, and the extensive third-party tooling ecosystem reduces vendor dependency risks. AWS's documentation and community knowledge base are unmatched.

AWS complexity can be overwhelming; the service catalog includes hundreds of options with overlapping capabilities. Pricing models are intricate, and costs can spiral without careful monitoring. However, this complexity often reflects real-world requirements rather than artificial limitations.

Microsoft Azure integrates naturally with existing Microsoft investments and provides strong hybrid cloud capabilities for organizations with on-premises infrastructure. Azure's enterprise features, compliance certifications, and support for .NET workloads make it attractive for business-focused applications.

Azure's pricing can be expensive for teams not already committed to Microsoft ecosystems. The learning curve is steep for teams without Windows Server or Microsoft development experience.

Google Cloud Platform (GCP) offers competitive pricing, cutting-edge AI/ML services, and developer-friendly interfaces. GCP's strength in data analytics, container orchestration (Kubernetes), and artificial intelligence make it compelling for technically sophisticated startups.

GCP's smaller enterprise adoption means fewer third-party integrations and community resources. Some services lag AWS in maturity or feature completeness, though the gap is narrowing rapidly.

Managed Services vs DIY (RDS, Firebase, App Engine, Cloud Run, ECS/EKS)

Managed databases like AWS RDS, Google Cloud SQL, or Azure Database eliminate server management, backup complexity, and scaling challenges. They cost 2-3x more than self-hosted alternatives but include monitoring, security patches, and professional support.

The DIY path requires significant DevOps expertise and ongoing maintenance but provides maximum control and cost optimization. For most startups, managed services are worth the premium until reaching substantial scale or specific customization requirements.

Platform-as-a-Service options like Google App Engine, AWS Elastic Beanstalk, or Azure App Service provide application hosting with minimal infrastructure management. They excel for web applications with standard requirements but can become restrictive as applications grow complex.

Container services like AWS ECS, Google Cloud Run, or Azure Container Instances bridge PaaS convenience with infrastructure control. They handle container orchestration while providing more flexibility than traditional PaaS platforms.

Best Tech Stack for Mobile App: Native vs Cross-Platform

Native development with Swift (iOS) and Kotlin (Android) provides the best user experience, complete API access, and optimal performance. Native apps integrate seamlessly with platform conventions and can leverage the latest OS features immediately upon release. The native approach requires separate teams with platform-specific expertise, doubling development and maintenance costs. Feature parity between platforms requires careful coordination, and development velocity is inherently slower.

React Native enables JavaScript developers to build mobile applications with 80-90% code reuse across platforms. The large React ecosystem provides familiar patterns and extensive third-party libraries. Hot reloading accelerates development cycles, and the Meta backing ensures long-term platform stability. React Native's bridge architecture can introduce performance bottlenecks, especially for animation-heavy or computationally intensive applications. Platform-specific functionality often requires native module development, reducing the cross-platform benefits.

Flutter compiles to native code and renders using its own graphics engine, providing consistent performance and visual fidelity across platforms. Dart's design enables high-performance applications with smooth animations and excellent development tooling. Flutter's main adoption barrier is Dart language learning requirements. While Google backs Flutter strongly, the ecosystem is newer than React Native's, and finding experienced Flutter developers can be challenging.

Offline-First, Push, Deep Links, App Store Policies

Offline-first architecture becomes crucial for mobile applications where network connectivity is unreliable. Both React Native and Flutter provide local storage solutions, but implementation patterns vary significantly. Native development offers the most sophisticated offline capabilities through platform-specific APIs.

Push notification implementation requires platform-specific setup regardless of development approach. Cross-platform frameworks provide abstraction layers, but underlying configuration complexity remains. Consider notification volumes, targeting sophistication, and analytics requirements when choosing providers.

Deep linking enables seamless navigation from external sources into specific application screens. Universal links (iOS) and app links (Android) require different implementation approaches that cross-platform frameworks attempt to unify.

App store policies increasingly influence technical architecture decisions. Apple's App Tracking Transparency, Google's billing policy changes, and both platforms' performance requirements affect framework choice and implementation approaches.

DevOps, Security & Observability (SLOs Without the Drama)

Continuous Integration starts with automated testing on every pull request and standardized development environments using Docker or similar containerization. GitHub Actions, GitLab CI, or CircleCI provide adequate functionality for most startups without complex enterprise requirements.

Infrastructure-as-Code using Terraform, AWS CDK, or cloud-native solutions like Pulumi enables reproducible deployments and environment management. Start simple with basic resource provisioning before adding complex orchestration or multi-environment management.

Observability foundations include structured logging, basic metrics collection, and error tracking. Tools like DataDog, New Relic, or open-source solutions like Grafana provide visualization and alerting capabilities. Avoid observability complexity until you have traffic that justifies the operational overhead.

Logging strategies should use structured formats (JSON), include correlation IDs for request tracking, and implement log levels appropriately. Centralized logging with services like AWS CloudWatch, Google Cloud Logging, or self-hosted ELK stacks enables debugging across distributed services.

Identity: Authentication & Authorization (OIDC/SAML), Secrets, Vaults

Authentication providers like Auth0, Firebase Auth, or AWS Cognito handle the complexity of secure user management, social login integration, and compliance requirements. Custom authentication systems are rarely justified for startups unless serving highly regulated industries.

Authorization patterns should separate authentication (who you are) from authorization (what you can do). Implement role-based or attribute-based access control systems that can evolve with business requirements.

Secrets management using AWS Secrets Manager, HashiCorp Vault, or cloud-native solutions prevents credentials from appearing in code repositories. Rotate secrets regularly and use different credentials for different environments.

OIDC and SAML support becomes important for enterprise customers requiring single sign-on capabilities. Plan for these requirements early if targeting business customers, as retrofitting SSO support can be architecturally challenging.

Cost Optimization & Error Budgets; Staging/Perf Envs

- Cloud cost optimization requires monitoring tools like AWS Cost Explorer, Google Cloud Billing, or third-party services like CloudHealth. Implement automated resource cleanup, right-size instances based on utilization, and use spot instances for non-critical workloads.

- Error budgets provide quantitative approaches to reliability decisions. Define service level objectives (SLOs) based on user impact rather than arbitrary uptime percentages. Use error budgets to balance feature velocity with reliability investments.

- Staging environments should mirror production architecture while using smaller resource allocations. Automate data seeding and use feature flags to test changes safely. Consider using blue-green or canary deployments for zero-downtime production updates.

- Performance testing environments help identify bottlenecks before they affect users. Tools like k6, Artillery, or cloud-based load testing services can simulate realistic traffic patterns and identify scaling limitations.

AI-Ready Stack (Data Pipelines, Feature Store, PII Masking)

Data pipeline architecture becomes critical for AI applications that require clean, structured data for training and inference. Modern data stacks using tools like Airbyte, Fivetran, or custom ETL processes ensure data quality and availability for AI workloads.

Feature stores like Feast, Tecton, or cloud-native solutions help manage the lifecycle of machine learning features, ensuring consistency between training and serving environments. For most startups, simple feature storage in existing databases suffices until ML complexity justifies specialized tooling.

PII masking and data privacy require careful architectural planning, especially for AI applications processing user data. Implement data anonymization, pseudonymization, or synthetic data generation to enable AI development while maintaining privacy compliance.

LLM Integration, RAG Patterns, Vector Database Options

Large Language Model integration can use API-based services like OpenAI, Anthropic Claude, or self-hosted models like Llama for more control. API services provide faster implementation and better reliability, while self-hosted options offer cost optimization and data privacy benefits.

Retrieval-Augmented Generation (RAG) patterns combine LLMs with external knowledge bases to provide accurate, up-to-date responses. RAG architectures require vector databases, embedding generation, and retrieval systems that add complexity but significantly improve AI application accuracy.

Vector database options include managed services like Pinecone, Weaviate Cloud, or self-hosted solutions like Milvus, Chroma, or PostgreSQL with pgvector. Choose based on scale requirements, budget constraints, and integration complexity with existing infrastructure.

Popular vector database choices for different scenarios:

- Pinecone: Managed, scalable, enterprise-ready

- Weaviate: Open-source with GraphQL API, hybrid search

- Chroma: Simple, lightweight, great for prototypes

- pgvector: PostgreSQL extension, familiar SQL interface

Eval/Monitoring for AI Features (Latency/Cost/Quality)

AI model evaluation requires different metrics than traditional software: response quality, factual accuracy, bias detection, and prompt injection resistance. Implement automated testing for common failure modes and human evaluation for quality assessment.

Cost monitoring for AI features tracks token usage, API calls, and compute costs that can scale unpredictably with user behavior. Implement usage caps, caching strategies, and efficient prompt design to control costs while maintaining functionality.

Latency optimization for AI applications includes prompt caching, model response streaming, and efficient vector search indexing. User experience suffers significantly when AI features introduce multi-second delays, so performance monitoring is critical.

Quality assurance for AI outputs requires ongoing evaluation as models and data evolve. Implement feedback loops, A/B testing for different prompts or models, and monitoring for output degradation over time.

Cost & TCO Considerations

Managed service premiums typically range from 200-400% of DIY alternatives but include security patches, backup management, monitoring, and professional support. For most startups, this premium is justified by reduced operational overhead and faster development velocity.

Serverless cost models work well for variable workloads but can become expensive at high, consistent utilization. Monitor cost per invocation and consider container alternatives when monthly costs exceed equivalent dedicated server pricing.

Data egress charges accumulate quickly for bandwidth-intensive applications. CDN services, regional data placement, and caching strategies can reduce egress costs significantly. Plan for these costs when designing data-heavy architectures or serving media content.

Support tier investments become valuable as applications gain business criticality. Enterprise support contracts provide faster response times, technical account management, and architectural guidance that can prevent expensive outages or performance issues.

Weighted Criteria: Team Skills, Time-to-Market, Performance, Cost, Compliance

Decision matrix methodology provides structured approaches to technology evaluation by weighting factors according to business priorities. Assign percentage weights to criteria like team skills (25%), time-to-market (25%), performance (20%), cost (15%), and compliance (15%) based on your startup's specific constraints.

Scoring consistency requires defined evaluation criteria for each technology option. Use scales like 1-10 or 1-100 with specific anchors (e.g., 90+ = "team has expert-level experience," 70-89 = "team has working knowledge," 50-69 = "team needs moderate training").

Bias mitigation includes involving multiple stakeholders in evaluation and questioning assumptions about familiar technologies. Teams often overweight technologies they know well and underestimate learning curve impacts for unfamiliar options.

Scenario analysis tests decision matrix results across different future conditions, what if hiring becomes more difficult, what if performance requirements increase, what if compliance requirements change. Robust technology choices perform well across multiple scenarios.

Final Conclusion

Strategic technology stack for startup selection balances immediate execution needs with long-term scalability requirements. The frameworks and methodologies outlined here provide systematic approaches to these critical decisions, but successful implementation requires ongoing adaptation as your business evolves.

Key principles for stack selection:

- Start with business constraints, not technology preferences

- Choose boring, proven technologies for core components

- Design for evolution rather than initial perfection

- Factor in team skills and hiring market realities

- Plan migration paths before you need them

The most successful startups focus on building exceptional products rather than showcasing technological sophistication. Your best technology stack for startups is the one that enables rapid iteration, reliable operation, and sustainable team growth.

Whether you're building web applications, mobile experiences, or AI-powered products, the right technology foundation accelerates every aspect of product development and business growth. Book a free consultation to develop a technology strategy that scales with your success.

FAQ

What is a technology stack for startups?

A technology stack for startups is the combination of programming languages, frameworks, databases, and tools used to build and run a digital product. It typically includes the frontend, backend, database, and DevOps layers. Choosing the best technology stack ensures faster development, scalability, and long-term maintainability.

Why is choosing the best technology stack important for startup growth?

Selecting the best technology stack for startups directly impacts product performance, scalability, and delivery speed. The right stack allows startups to iterate quickly, reduce development costs, and adapt easily to market changes. Poor tech stack choices can slow growth and create technical debt that’s costly to fix later.

What is the best technology stack for startups in 2025?

In 2025, the best technology stack for startups often includes:

- Frontend: React, Vue.js, or Next.js

- Backend: Node.js, Python (Django/FastAPI), or Go

- Database: PostgreSQL or MongoDB

- Cloud & DevOps: AWS, Azure, or Google Cloud

- AI & Analytics: OpenAI APIs, TensorFlow, or Snowflake

This modern stack supports rapid growth, cloud scalability, and AI integration for smarter products.

How can startups choose the right technology stack?

To choose the best technology stack, startups should consider:

- Project goals and complexity

- Team expertise and resources

- Time-to-market requirements

- Scalability and long-term maintenance

It’s best to consult with experienced CTOs or software development partners who can align the tech stack with business goals and future growth.

What are the common mistakes when choosing a startup technology stack?

Common mistakes include selecting trendy frameworks without validation, ignoring scalability, and underestimating integration needs. Startups should avoid overengineering in early stages, instead, focus on a lean and flexible stack that supports rapid MVP development and can evolve as the product grows.

How does the technology stack affect startup success and fundraising?

A well-chosen technology stack can make or break startup success. Investors often assess whether a product is built on scalable, modern, and maintainable technologies. A strong stack helps attract talent, reduce technical risk, and showcase readiness for rapid growth, all key factors in successful fundraising rounds.

%20(1).jpg)

%20(1)%20(1)%20(1).jpg)