The artificial intelligence revolution is reshaping how businesses operate, compete, and scale. For CTOs and technical leaders, the challenge isn't just understanding AI capabilities; it's identifying which AI startup ideas and AI app ideas will deliver measurable business value. This guide explores proven AI concepts, implementation strategies, and real-world case studies to help you navigate the AI landscape with confidence and build solutions that matter.

For organizations ready to transform AI concepts into competitive advantages, custom software development expertise provides the technical foundation and strategic guidance needed for successful implementation.

From GenAI agents to predictive analytics platforms, we'll examine opportunities across industries and functions, providing the technical depth and strategic framework needed to transform innovative concepts into successful AI ventures.

Why AI, Founder & CTO Imperatives in 2025

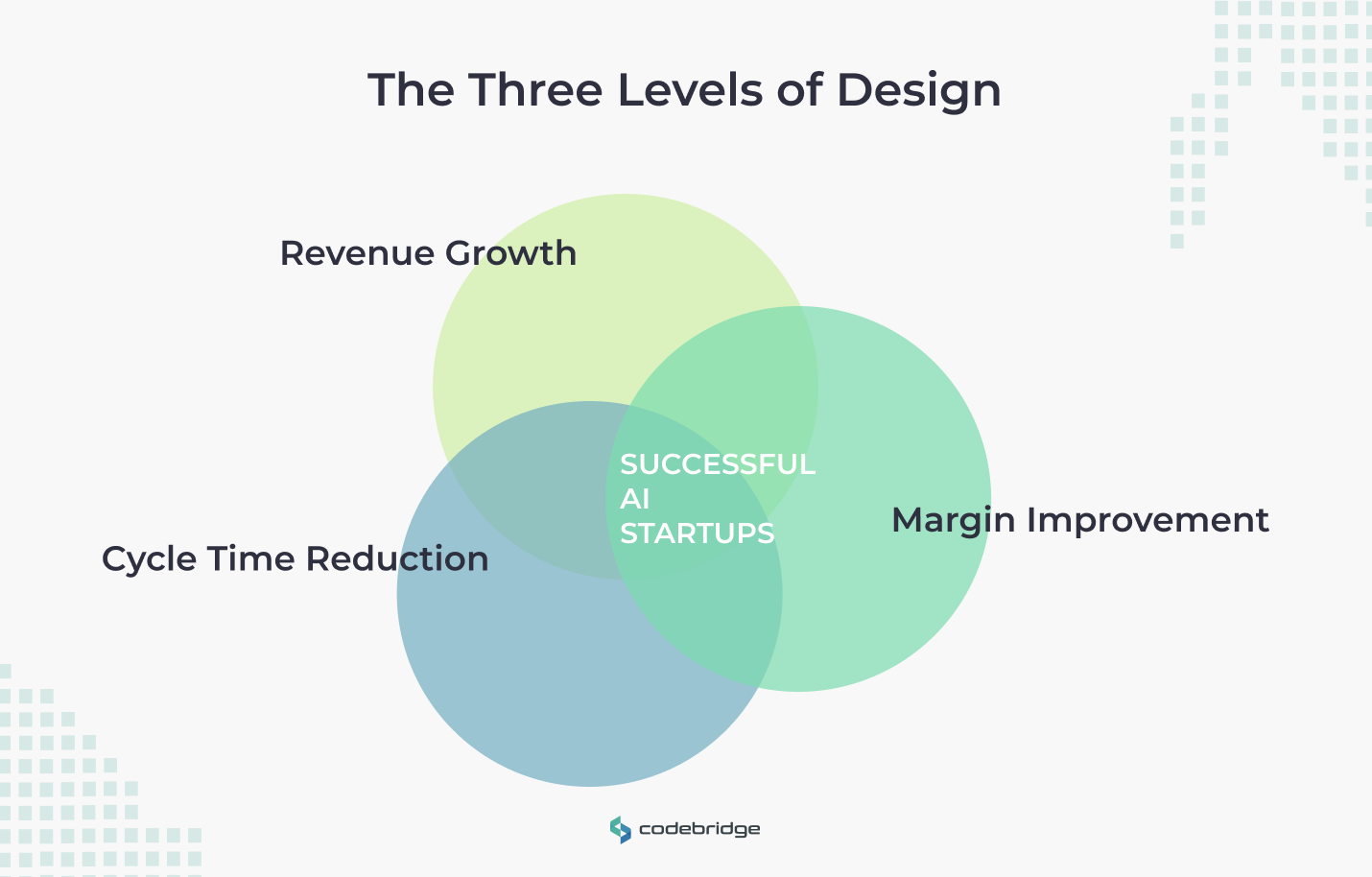

The AI gold rush has produced countless demos and prototypes, but successful AI startups focus relentlessly on three core business drivers:

- revenue growth

- margin improvement

- cycle time reduction

Grand View Research reports that the global AI market was valued at USD 279.22 billion in 2024 and is projected to reach USD 1,811.75 billion by 2030, growing at a CAGR of 35.9% from 2025 to 2030. Understanding where AI actually moves these needles separates viable ventures from expensive experiments.

Where AI Actually Moves the Needle (Revenue, Margin, Cycle Time)

- Revenue growth – AI applications typically fall into personalization, pricing optimization, and customer acquisition categories. E-commerce platforms using AI personalization engines see 10-30% increases in conversion rates, while dynamic pricing algorithms can boost margins by 2-8% across retail and SaaS businesses. Meanwhile, AI-powered lead scoring and content generation tools accelerate sales cycles by 20-40%.

- Margin gains – come from operational efficiency gains. AI customer support chatbots handle 60-80% of routine inquiries, reducing support costs by $0.50-2.00 per interaction. Predictive maintenance in manufacturing prevents costly downtime, while automated document processing eliminates manual data entry bottlenecks.

- Cycle time reduction – appears in development workflows, financial operations, and compliance processes. AI code generation tools like GitHub Copilot reduce development time by 15-25%, while automated invoice processing cuts accounts payable cycles from days to hours.

Data Moats vs Feature Parity: When Speed Beats Novelty

The most defensible AI businesses build data moats rather than relying solely on model sophistication. Companies with proprietary datasets, customer behavior patterns, domain-specific annotations, or real-time operational data maintain competitive advantages even as foundation models commoditize.

However, speed often trumps novelty in enterprise adoption. CTOs prioritize AI solutions that integrate seamlessly with existing workflows over cutting-edge research experiments.

The key is identifying where incremental AI improvements compound into significant business advantages.

The Idea Matrix, AI App Ideas by Function & Industry

Successful AI startups align technological capabilities with specific business functions and industry pain points. This matrix approach helps identify high-value opportunities where AI provides clear competitive advantages.

Product/Growth: AI Personalization, Pricing Optimization, Churn Prediction

- Product and growth teams – AI personalization engines analyze user behavior, preferences, and contextual signals to deliver tailored content, product recommendations, and interface customizations. Netflix's recommendation system generates $1 billion annually in value retention, while Spotify's personalized playlists drive 40% of listening time.

- Pricing optimization – platforms use machine learning to analyze market dynamics, competitor pricing, and demand signals in real-time. Airlines and hotels have used dynamic pricing for decades, but AI democratizes these capabilities for SaaS companies, e-commerce platforms, and service businesses.

- Churn prediction – models identify at-risk customers before they cancel, enabling proactive retention campaigns. By analyzing usage patterns, support interactions, and engagement metrics, these systems can predict churn with 85-95% accuracy, allowing targeted interventions that improve retention rates by 15-30%.

Support/Operations: AI Customer Support Chatbot, Agentic Workflows, RPA + LLM

Customer support represents one of the most mature AI application areas, with chatbots handling increasingly complex interactions through natural language understanding and knowledge base integration. Modern AI customer support chatbots resolve 70-85% of common inquiries without human escalation, while maintaining customer satisfaction scores comparable to human agents.

Agentic workflows combine multiple AI capabilities, understanding, planning, tool usage, and execution, to automate complex business processes. These systems can handle multi-step tasks like order processing, compliance checks, and vendor onboarding with minimal human oversight.

The fusion of Robotic Process Automation (RPA) with Large Language Models creates powerful hybrid systems. RPA handles structured data manipulation and system integration, while LLMs process unstructured content and make contextual decisions.

Engineering: AI Code/Test Generation, Incident Summarization

- AI Code – Engineering teams use AI to accelerate development cycles and improve code quality. AI-powered code generation tools assist with boilerplate creation, API integration, and algorithm implementation. These systems reduce development time while helping maintain coding standards and best practices.

- Test Generation – Automated test generation analyzes codebases to create comprehensive test suites, identifying edge cases and potential vulnerabilities that manual testing might miss. AI-driven code review tools flag security issues, performance bottlenecks, and maintainability concerns before they reach production.

- Incident Summarization – Incident response benefits significantly from AI summarization and root cause analysis. When production issues occur, AI systems can quickly parse logs, error messages, and system metrics to provide contextualized summaries and suggested remediation steps.

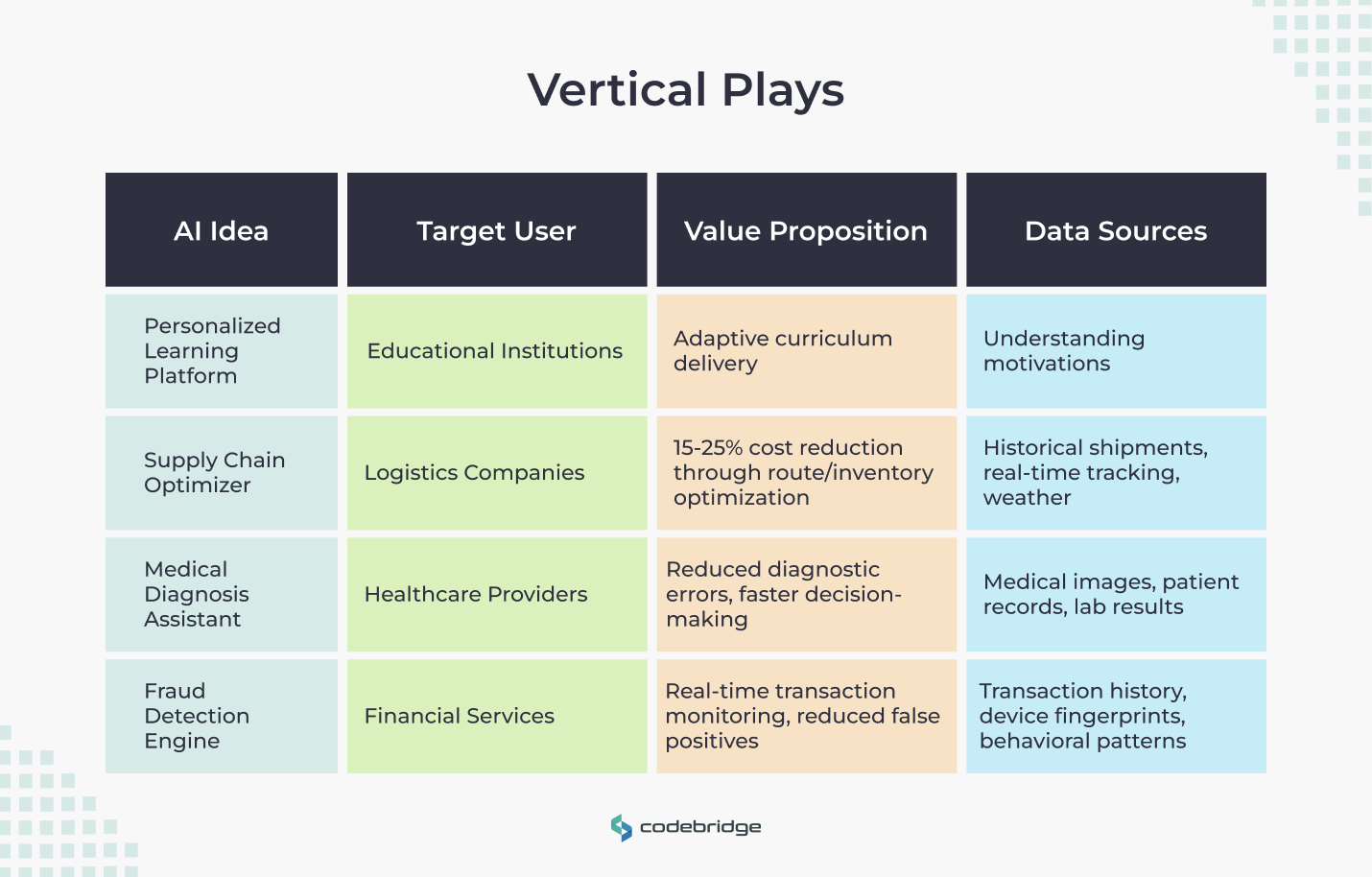

Vertical Plays: Healthcare, Fintech, Logistics, Education, Events/Festivals

Industry-specific AI applications often provide stronger value propositions than horizontal solutions. Healthcare AI focuses on diagnostic assistance, treatment optimization, and administrative automation. Fintech applications include fraud detection, credit scoring, and algorithmic trading. Logistics companies deploy AI for route optimization, demand forecasting, and supply chain visibility.

Education technology leverages AI for personalized learning paths, automated grading, and student engagement analytics. The events and festivals industry presents unique opportunities for crowd management, dynamic scheduling, and personalized attendee experiences.

GenAI Startup Ideas (Agents, RAG, and Workflow Automation)

Generative AI has unlocked new categories of applications that were impossible with traditional machine learning approaches. The combination of large language models, agentic frameworks, and retrieval systems creates powerful platforms for knowledge work automation.

AI Agent Ideas (Task Planning, Tool Use, Guardrails)

AI agents represent the next evolution beyond simple chatbots, capable of multi-step reasoning, tool usage, and autonomous task execution. These systems excel at complex workflows that require planning, decision-making, and integration with external systems.

- Task planning agents – break down high-level objectives into executable subtasks, managing dependencies and resource constraints. A customer onboarding agent might coordinate document collection, compliance verification, account setup, and stakeholder notifications across multiple systems.

- Tool-using agents – integrate with APIs, databases, and third-party services to accomplish tasks that require external information or actions. Research agents can search databases, analyze documents, and synthesize findings into structured reports. Sales agents can update CRM systems, schedule meetings, and generate follow-up communications.

- Guardrails – to prevent unintended actions, data leakage, and hallucinations. These include input validation, output verification, human approval workflows, and comprehensive audit trails.

Retrieval Augmented Generation (RAG) Patterns & Vector Database Choices

Retrieval Augmented Generation (RAG) combines the reasoning capabilities of large language models with access to external knowledge bases, enabling AI systems to provide accurate, up-to-date information while citing sources and maintaining context.

RAG implementations typically follow a pattern of query processing, relevant document retrieval, context injection, and response generation. The quality of retrieval significantly impacts overall system performance, making vector database selection crucial for success.

Vector database options include specialized solutions like Pinecone, Weaviate, and Milvus, as well as traditional databases with vector extensions like PostgreSQL with pgvector. The choice depends on scale requirements, latency constraints, and integration preferences.

Advanced RAG patterns include hierarchical retrieval for complex documents, multi-modal retrieval combining text and images, and hybrid search combining semantic and keyword matching for improved relevance.

Structured Outputs, Eval Sets, and Human-in-the-Loop

Production AI systems require structured, predictable outputs that integrate cleanly with downstream systems. JSON schemas, function calling, and constrained generation ensure AI responses follow expected formats while maintaining flexibility for natural language components.

Evaluation sets provide objective measures of system performance across accuracy, relevance, and safety dimensions. These datasets should represent real-world usage patterns and edge cases, enabling continuous improvement and regression testing.

Human-in-the-loop workflows balance automation efficiency with quality control. Strategic human oversight points, such as final approval for high-stakes decisions or feedback collection for model improvement, maintain system reliability while preserving most automation benefits.

AI SaaS Ideas with Fast Payback

Software-as-a-Service models provide predictable revenue streams and clear value metrics for AI applications. The most successful AI SaaS products solve specific, measurable problems with fast time-to-value and obvious ROI calculation.

SMB Copilots (CRM, Accounting, HRIS)

Small and medium businesses often lack dedicated data science resources but face the same operational challenges as larger enterprises. AI copilots for standard business software, CRM systems, accounting platforms, and HR management tools, democratize advanced analytics and automation.

CRM copilots analyze sales pipelines to identify at-risk deals, suggest optimal follow-up timing, and generate personalized outreach content. These systems can increase sales team productivity by 20-35% while improving conversion rates through better lead prioritization.

Accounting copilots automate invoice processing, expense categorization, and financial reporting while flagging potential compliance issues. Small businesses using AI-powered bookkeeping tools reduce manual data entry by 80-90% and improve financial accuracy.

HRIS copilots streamline recruiting, onboarding, and performance management through automated candidate screening, personalized communication, and predictive analytics for employee retention and success.

Analytics Copilots Over BI/Warehouse

Business intelligence and data warehouse platforms contain vast amounts of structured data but require significant expertise to extract actionable insights. Analytics copilots bridge this gap by translating natural language questions into SQL queries, generating visualizations, and explaining statistical findings in business terms.

These systems can reduce time-to-insight from hours to minutes while making advanced analytics accessible to non-technical stakeholders. Integration with existing BI platforms like Tableau, Power BI, or Looker provides familiar interfaces while adding AI-powered query assistance and automated insight generation.

Document Intelligence (Contracts, Invoices, KYC)

Document processing represents a massive opportunity for AI automation, particularly in industries handling large volumes of structured and semi-structured documents. Legal contract analysis, financial document processing, and Know Your Customer (KYC) verification all benefit from AI-powered information extraction and classification.

Contract intelligence platforms can extract key terms, identify compliance issues, and flag deviation from standard language across thousands of documents. These systems reduce legal review time by 60-80% while improving consistency and risk management.

Invoice processing automation handles multiple formats, currencies, and languages while integrating with accounting systems and approval workflows. Accuracy rates exceed 95% for standard invoice formats, with significant cost savings compared to manual processing.

KYC and compliance automation streamlines customer onboarding by automatically extracting information from identity documents, verifying against watchlists, and generating compliance reports. These systems reduce onboarding time from days to hours while improving regulatory compliance.

Tech Stack & LLMOps for Execution

Successful AI implementations require robust technical foundations that support rapid experimentation, reliable production deployment, and continuous optimization. The choice of models, infrastructure, and operational practices directly impacts both development velocity and ongoing costs.

Model Choices (Open vs Managed), Prompt/Version Control, Observability

Model selection involves trade-offs between cost, performance, customization, and vendor dependence. Managed APIs like OpenAI's GPT or Anthropic's Claude provide excellent out-of-the-box performance with minimal infrastructure requirements, while open-source models like LLaMA or Mistral offer greater control and potentially lower long-term costs.

Hybrid approaches often provide the best balance, using managed APIs for prototyping and specific use cases while developing custom models for high-volume or sensitive applications. The key is maintaining flexibility to switch approaches as requirements evolve.

Prompt engineering and version control become critical as AI applications grow in complexity. Changes to prompts can significantly impact system behavior, making careful testing and rollback capabilities essential. Tools like MLflow, Weights & Biases, or custom versioning systems help track prompt iterations alongside traditional code changes.

Observability for AI systems extends beyond traditional application monitoring to include model performance metrics, data drift detection, and user interaction patterns. Understanding how users interact with AI features helps identify improvement opportunities and potential issues before they impact business outcomes.

Data Pipelines, PII Masking, Caching & Cost Caps

Production AI systems require robust data pipelines that can handle real-time streaming and batch processing while maintaining data quality and privacy compliance. Modern data stack components like Apache Airflow, dbt, and cloud-native solutions provide the foundation for reliable AI data workflows.

- Personally Identifiable Information (PII) – masking and anonymization become critical when processing customer data through AI systems. Techniques like differential privacy, k-anonymity, and synthetic data generation help maintain privacy while preserving analytical value.

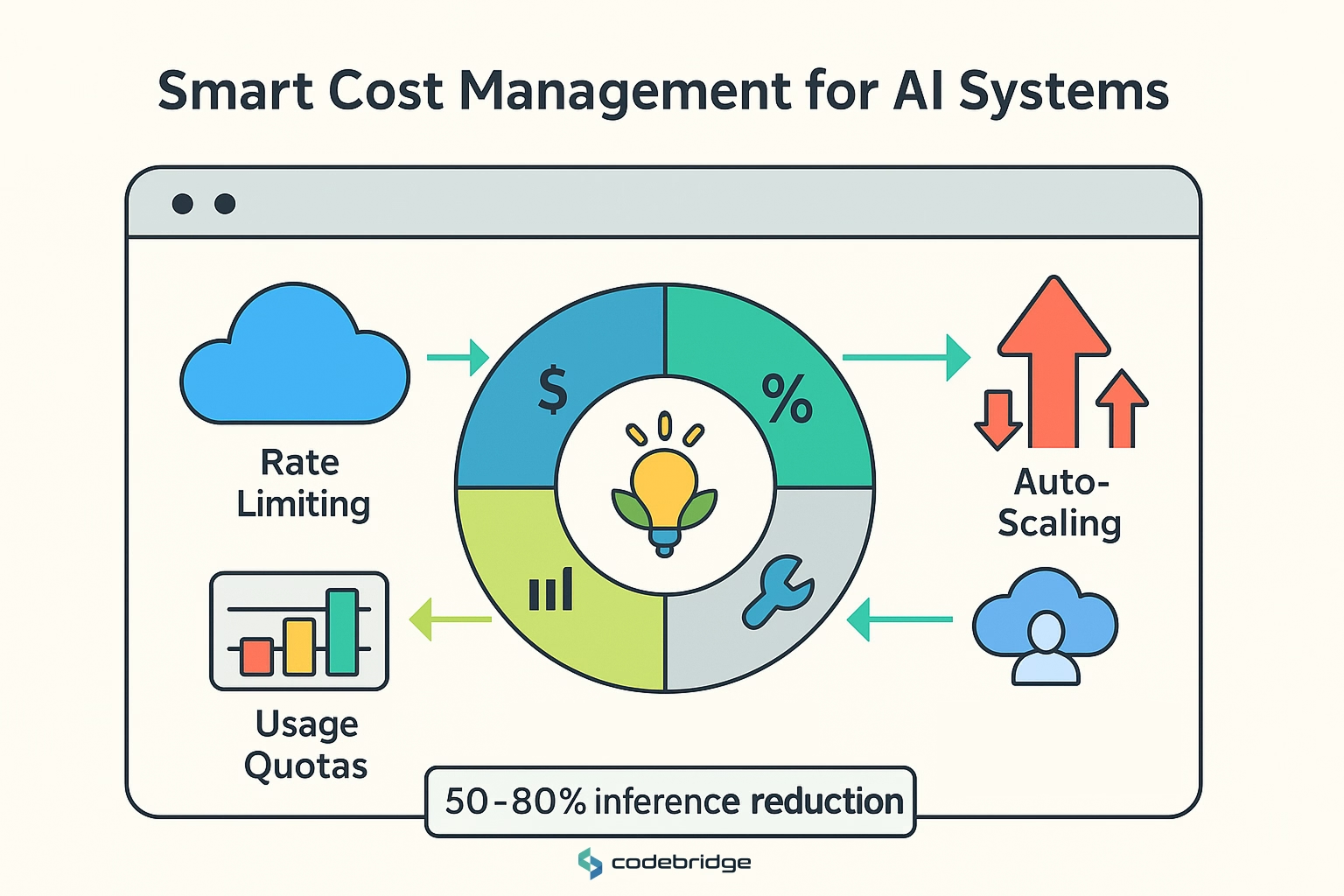

- Caching strategies – impact both performance and costs for AI applications. Semantic caching can identify similar queries and return cached responses, while traditional caching handles exact matches. Combined with intelligent request batching and model optimization, these techniques can reduce inference costs by 50-80%.

- Cost management – requires both technical and operational controls. Rate limiting, usage quotas, and automatic scaling policies prevent runaway costs during traffic spikes or system misuse. Real-time cost monitoring and alerting help maintain budget compliance while supporting business growth.

Evaluation (Accuracy, Hallucination Rate, Latency, $/Task)

Comprehensive evaluation frameworks measure multiple dimensions of AI system performance to ensure production readiness and ongoing optimization. Accuracy metrics vary by use case but should align with business objectives and user expectations.

Hallucination detection becomes crucial for applications where factual accuracy matters. Techniques include consistency checking across multiple model runs, external fact verification, and confidence scoring to flag potentially unreliable outputs.

Latency measurement encompasses both model inference time and end-to-end system response time. User experience suffers when AI features feel slow, making performance optimization as important as accuracy improvement.

Cost per task provides the economic foundation for business model validation. Understanding the relationship between input complexity, model choice, and processing costs enables accurate pricing and margin calculation for AI-powered services.

90-Day Pilot Roadmap (From Hypothesis to Live Users)

Successful AI projects follow structured development phases that balance speed with rigor. This 90-day framework provides a proven path from initial concept to production deployment, with clear milestones and success criteria at each stage.

0–30 Days: Problem Fit, Data Audit, Golden Dataset, Success Metrics

The first month focuses on validating problem-solution fit and establishing the data foundation for AI development. This phase determines whether the proposed AI solution addresses a real business need with measurable impact.

Problem validation involves deep stakeholder interviews, workflow analysis, and competitive research. Understanding how users currently solve the problem, and where existing solutions fall short, provides crucial context for AI system design.

Data audit examines available data sources for quality, completeness, and relevance to the proposed AI solution. This includes technical assessment of data formats, access patterns, and privacy constraints, as well as business evaluation of data representativeness and potential biases.

Golden dataset creation involves curating high-quality examples that represent the full range of use cases and edge conditions the AI system will encounter. These examples serve as training data, evaluation benchmarks, and development test cases.

Success metrics definition establishes both business KPIs (revenue impact, cost reduction, user satisfaction) and technical metrics (accuracy, latency, reliability) that will guide development decisions and measure project success.

31–60 Days: Prototype (RAG/Agent), Guardrails, Stakeholder Testing

The second month focuses on building a functional prototype that demonstrates core AI capabilities while implementing essential safety and reliability measures. This phase validates technical feasibility and gathers initial user feedback.

Prototype development typically centers on either RAG systems for knowledge-intensive applications or agent frameworks for workflow automation. The goal is creating a minimal viable product that showcases the AI solution's value proposition with real data and realistic usage patterns.

Guardrail implementation includes input validation, output filtering, error handling, and audit logging. These systems prevent common AI failure modes like prompt injection, inappropriate responses, and system abuse while maintaining comprehensive operational visibility.

Stakeholder testing involves structured evaluation sessions with representative users, focusing on user experience, functional accuracy, and business value validation. Feedback from these sessions drives refinement priorities for the final development phase.

61–90 Days: Production Hardening, SLAs, Roll-out Plan

The final month prepares the AI system for production deployment through performance optimization, reliability engineering, and operational readiness activities.

Production hardening addresses scalability, security, and reliability requirements that weren't critical for prototype validation. This includes load testing, security penetration testing, data backup and recovery procedures, and integration testing with production systems.

This process must also eliminate critical knowledge gaps; for instance, studies show that 63% of users mistake cloud syncing for a proper data backup solution, a vulnerability that can compromise business continuity.

Service Level Agreement (SLA) definition establishes performance commitments for availability, response time, and accuracy that align with business requirements. These SLAs drive infrastructure sizing, monitoring implementation, and incident response procedures.

Roll-out planning defines the deployment strategy, user training program, and success measurement framework. Phased deployment approaches minimize risk while enabling rapid iteration based on real user feedback.

Build vs Buy vs Partner

AI implementation strategy requires careful evaluation of internal capabilities, strategic importance, and total cost of ownership. The right approach depends on competitive positioning, resource constraints, and long-term business objectives.

Decision Matrix (Differentiation, Time-to-Value, TCO)

The build-versus-buy decision should be evaluated across three primary dimensions: competitive differentiation potential, time-to-value requirements, and total cost of ownership over the solution lifecycle.

High-differentiation applications that directly impact competitive positioning often justify custom development, especially when proprietary data or unique domain expertise provides advantages. Core business processes that define customer value propositions typically warrant internal development to maintain strategic control.

Time-to-value considerations favor purchasing solutions when speed to market is critical and acceptable commercial options exist. However, vendor solutions may limit customization and create dependencies that constrain future innovation.

Total cost of ownership extends beyond initial development or licensing costs to include ongoing maintenance, integration expenses, and opportunity costs of alternative approaches. Custom solutions often have higher upfront costs but may provide better long-term economics for high-volume or specialized use cases.

Vendor Diligence: Data Ownership, SLAs, Exit Plan

Vendor evaluation for AI services requires careful attention to data handling, performance guarantees, and relationship termination procedures. These factors significantly impact both operational risk and strategic flexibility.

- Data ownership – determine how vendor AI services can use customer data for model training, improvement, or other purposes. Clear contractual language around data residency, processing restrictions, and deletion procedures protects sensitive business information.

- Service level agreements for AI – vendors should address accuracy, availability, response time, and data security metrics. Unlike traditional software SLAs, AI performance can degrade over time due to data drift or model staleness, making ongoing performance monitoring and vendor accountability crucial.

- Exit planning – ensures business continuity when vendor relationships end, either through contract termination or business failure. This includes data export capabilities, API transition periods, and alternative vendor qualification procedures.

Where Custom Software Development Compounds the Advantage

Organizations with unique data assets, specialized domain expertise, or innovative business models often benefit from custom AI solutions that precisely align with strategic objectives.

Custom development enables rapid iteration and experimentation that may not be possible with vendor solutions. The ability to quickly test new approaches, integrate proprietary datasets, and optimize for specific use cases accelerates innovation cycles and competitive positioning.

Integration advantages of custom solutions include seamless workflow embedding, unified user experiences, and elimination of data silos that plague multi-vendor architectures. These benefits compound over time as AI capabilities expand across more business functions.

Risk, Compliance & Governance

AI systems introduce new categories of risks that require proactive management through technical controls, policy frameworks, and governance processes. Effective risk management enables innovation while protecting business interests and stakeholder trust.

AI Security, Prompt Injection, Data Leakage, RBAC/Audit Trails

AI security encompasses traditional application security concerns plus AI-specific vulnerabilities like prompt injection, model poisoning, and inference attacks. Prompt injection attempts to manipulate AI system behavior through carefully crafted inputs, while data leakage risks exposing training data or sensitive information through model responses.

Role-based access control (RBAC) for AI systems should restrict both input capabilities and output access based on user roles and data sensitivity. Administrative functions like model updates, prompt modifications, and system configuration require elevated privileges and comprehensive audit trails.

Audit trails for AI systems must capture both technical events (model calls, input/output data, system errors) and business events (user actions, decision outcomes, human overrides). These logs support compliance reporting, incident investigation, and system improvement initiatives.

AI Governance Policies, Documentation, Human Oversight

AI governance frameworks establish organizational policies and procedures for AI development, deployment, and operation. These frameworks should address risk assessment, approval processes, documentation requirements, and ongoing monitoring responsibilities.

Documentation requirements for AI systems extend beyond traditional software documentation to include model cards, data lineage, bias testing results, and ethical considerations. This documentation supports regulatory compliance, stakeholder communication, and knowledge transfer.

Human oversight mechanisms ensure appropriate human involvement in AI decision-making processes, particularly for high-stakes applications. This includes defining escalation criteria, approval workflows, and feedback collection processes that maintain human agency while preserving automation benefits.

Model Monitoring & Rollback

Production AI systems require continuous monitoring for performance degradation, data drift, and unexpected behavior changes. Model performance can decline over time as real-world conditions diverge from training data, making proactive monitoring essential for maintaining system reliability.

Rollback capabilities enable rapid response to model performance issues or unexpected system behavior. This includes maintaining previous model versions, automated rollback triggers based on performance thresholds, and manual override procedures for emergency situations.

Data drift detection identifies when input data characteristics change significantly from training distributions, potentially impacting model accuracy and reliability. Early detection enables proactive model retraining or system adjustments before user experience degrades.

ROI & Metrics, Proving Value

Successful AI implementations require clear measurement frameworks that connect technical performance to business outcomes. Effective metrics enable continuous optimization, stakeholder communication, and investment justification.

Business KPIs (Revenue Influence, Deflection, Cycle-Time Cuts)

Revenue influence metrics quantify how AI systems impact sales, customer retention, and market expansion. These measurements require careful attribution modeling to isolate AI contributions from other business factors while accounting for indirect effects and long-term customer value.

Deflection metrics measure how AI systems reduce manual work, support inquiries, or process bottlenecks. Cost avoidance calculations should include both direct labor savings and productivity improvements that enable staff to focus on higher-value activities.

Cycle time reductions demonstrate operational efficiency gains from AI automation. These metrics should capture end-to-end process improvements rather than individual task acceleration, accounting for integration overhead and quality assurance requirements.

Model Metrics (Quality, Hallucination, Latency, Cost per Task)

Technical performance metrics provide the foundation for system optimization and reliability management. Quality metrics vary by application but should align with business success criteria while remaining measurable and actionable.

Hallucination detection and measurement become crucial for applications where factual accuracy directly impacts business outcomes. Detection techniques include consistency checking, external validation, and confidence scoring, with measurement frameworks that track both frequency and severity.

Latency measurement encompasses both model inference time and complete user interaction cycles. Performance benchmarking should reflect realistic usage patterns and system load conditions while identifying optimization opportunities.

Cost per task provides the economic foundation for business model validation and pricing optimization. Understanding cost drivers, input complexity, model choice, infrastructure utilization, enables accurate financial planning and margin management.

Experiment Design: Offline vs Online A/B, Cohort Analysis

Rigorous experiment design enables confident decision-making about AI system changes and business impact attribution. Offline evaluation using historical data provides rapid feedback during development, while online A/B testing validates real-world performance and user behavior.

Cohort analysis tracks user groups over time to measure AI system impact on retention, engagement, and business outcomes. This longitudinal perspective reveals delayed effects and cumulative benefits that simple before-and-after comparisons might miss.

Statistical significance testing and sample size calculation ensure experiment results are reliable and generalizable. Power analysis helps determine appropriate experiment duration and participant allocation while controlling for seasonal effects and external factors.

Case Study: The Unicorn App

Practical case studies show how digital platforms face real challenges, demand precise solutions, and drive measurable business outcomes. The Unicorn App project demonstrates how a custom vendor-matching platform can transform the process of finding reliable contractors, overcoming technical stagnation, and achieving sustainable growth.

Problem & Solution: Vendor Discovery, Code Stability, Platform Scalability

Software platforms often struggle with performance bottlenecks, poor code quality, and a lack of effective contractor matching features. For Unicorn App, these issues led to limited growth and user dissatisfaction:

- Incomplete development with a previous vendor meant years of delay and no meaningful updates.

- Code quality issues prevented stability, caused bugs, and stalled user adoption.

- Lack of scalability left the platform unable to attract new customers or support further development.

Our team addressed these challenges by redesigning the platform’s architecture, introducing a robust vendor discovery model, and implementing AI-powered project briefs to streamline collaboration.

Vendor Matching & Technical Enhancements

- Vendor discovery engine – Users are automatically connected with five vetted software companies matching their project needs, with options to expand contractor pools through premium features.

- Code rehaul and bug fixing – Legacy performance issues were solved through deep retesting, stabilization, and structured release cycles.

- Future-ready updates – The platform roadmap now supports new features, scalable integrations, and user-centric improvements.

AI Ideas Unlocked

The Unicorn App platform went beyond static contractor listings, using AI to enhance the entire selection and collaboration process:

- Project brief copilots – AI-generated project descriptions help customers clearly communicate requirements, reducing misunderstandings and mismatched proposals.

- Vendor recommendation logic – Matching algorithms prioritize relevance based on company expertise, reviews, and performance history.

- Growth-focused data insights – Continuous system monitoring identifies bottlenecks and informs strategic improvements for future scaling.

Data & Stack

The technical implementation of Unicorn App was designed for stability and scalability:

- Codebase overhaul with retesting and modernization for stable performance.

- Vendor catalog integration powered by AI-based filtering and ranking logic.

- User interaction data analyzed to improve vendor matching and personalization.

- Ongoing support and monitoring ensure long-term reliability and feature rollouts.

Lessons Learned & Ongoing Development

The Unicorn App case showed how critical code quality and structured execution plans are for platform recovery and growth. Key insights included:

- Stable technical foundations enable meaningful product innovation.

- AI-powered briefs and structured vendor discovery significantly reduce friction in contractor selection.

- Regular collaboration with stakeholders accelerates adoption and ensures alignment with business goals.

The project is still ongoing, with new features being added to engage more users and extend the platform’s market impact.

Conclusion

This comprehensive playbook provides the strategic framework and technical foundation for successful AI startup development. From initial concept validation through production deployment, these proven patterns and best practices accelerate time-to-value while minimizing implementation risks.

The key to AI success lies in aligning technological capabilities with clear business objectives, maintaining focus on measurable outcomes, and building robust systems that scale with user adoption and business growth.

Whether you're exploring specific AI applications or developing comprehensive AI strategies, booking a free consultation connects you with experienced practitioners who can help scope a targeted 90-day pilot that delivers measurable business value.

The AI revolution is creating unprecedented opportunities for innovation and growth. With the right approach, strategic focus, and technical execution, your organization can build AI solutions that drive meaningful business transformation and sustainable competitive advantage.

FAQ

What are the best AI startup ideas for CTOs?

High-value areas include personalization/pricing engines, churn prediction, AI customer support, agentic workflows (tool-using agents), code/test generation, incident summarization, and vertical plays in healthcare, fintech, logistics, education, and events, prioritizing measurable wins in revenue, margin, and cycle-time reduction.

Which AI SaaS ideas with fast payback should founders prioritize?

SMB copilots (CRM/accounting/HRIS), analytics copilots on top of BI/warehouse, and document intelligence for contracts/invoices/KYC deliver clear ROI: e.g., 20–35% sales-team productivity gains, time-to-insight cut from hours to minutes, and 60–80% reductions in manual document handling.

What RAG architecture examples actually move the needle?

Use RAG for knowledge-heavy workflows: policy/Q&A assistants, product support, compliance guidance, and festival/event info retrieval. Advanced patterns (hierarchical/hybrid retrieval, vector DBs like pgvector/Pinecone/Weaviate) improve factuality and freshness while enabling source-cited responses.

How does an analytics copilot over warehouse reduce time-to-insight?

It translates natural-language questions into SQL, generates charts, explains results in business terms, and plugs into existing BI (Tableau/Power BI/Looker), shrinking analysis from hours to minutes and democratizing access beyond data teams.

What are the top document intelligence AI use cases (contracts, invoices, KYC)?

Contract review (clause extraction, deviation alerts), invoice processing (multi-format OCR + posting), and KYC onboarding (ID extraction + watchlist checks) drive 60–90% manual effort reduction, higher accuracy, and faster cycle times while improving compliance.

%20(1).jpg)

%20(1)%20(1)%20(1).jpg)